Ever since text-based AI image generators exploded onto the scene last year, many artists have been hoping for a tool capable of protecting their work. The models behind some of the best-known AI art generators were trained by scraping images from the internet with no regard for copyright, and some tools will even generate imagery in the style of living artists.

Many artists want to to be able to protect their work from being used in the future. A new tool aims not only to do that but also to fight back against AI image generators by corrupting the AI model itself. If it is made easily available, artists could finally get their revenge.

We reported in March on a promising project called Glaze developed by researchers at the University of Chicago. They came up with a technique of applying imperceptible modifications dubbed "style cloaks" before uploading images to the web. When used as training data, the modifications can effectively prevent AI models from being able to copy the artistic style.

Seven months on, Glaze has a new, much more lethal weapon in its arsenal: Nightshade. The technique goes further, actively affecting the results of an AI model by providing corrupted training data that causes it to learn the wrong names of objects in an image.

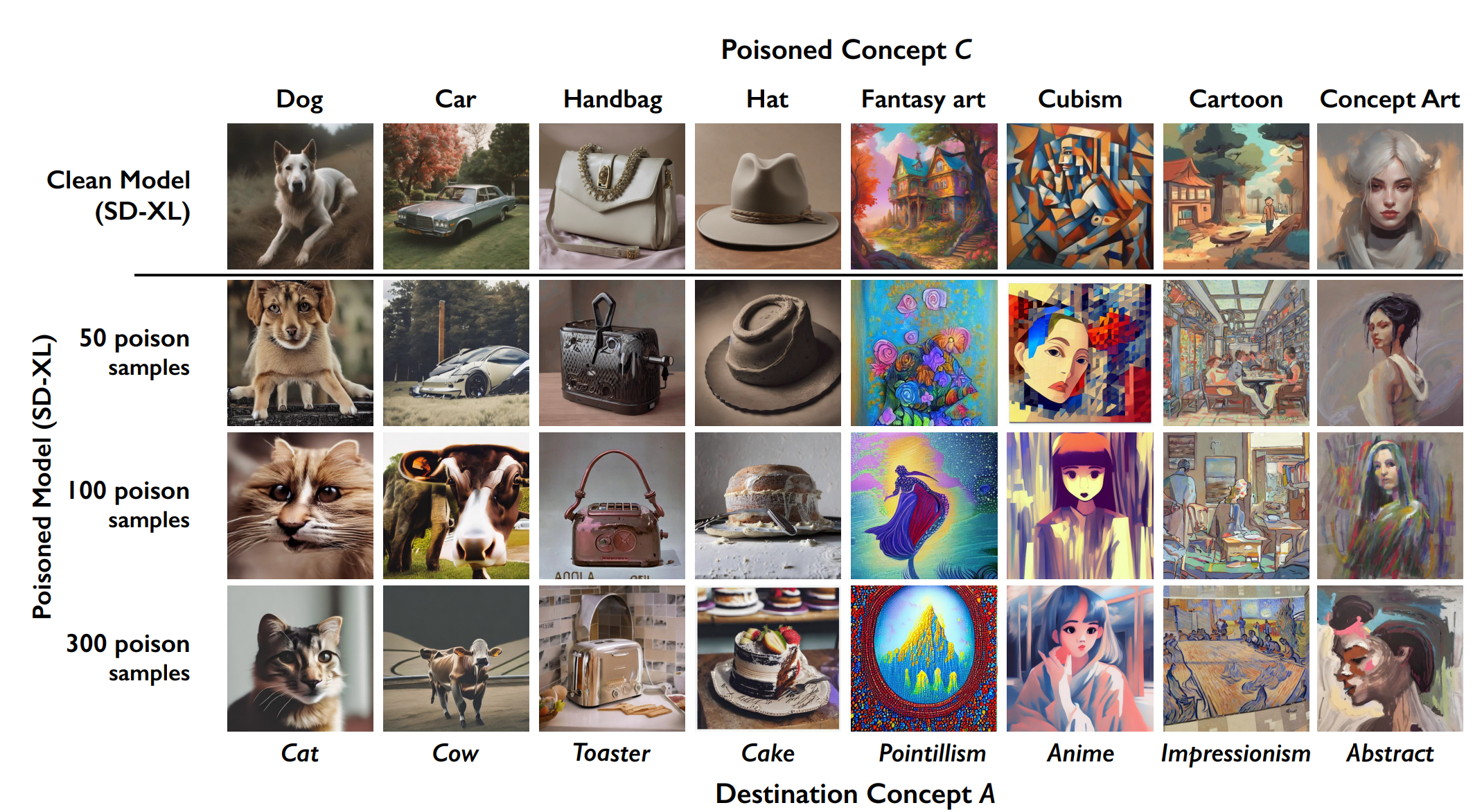

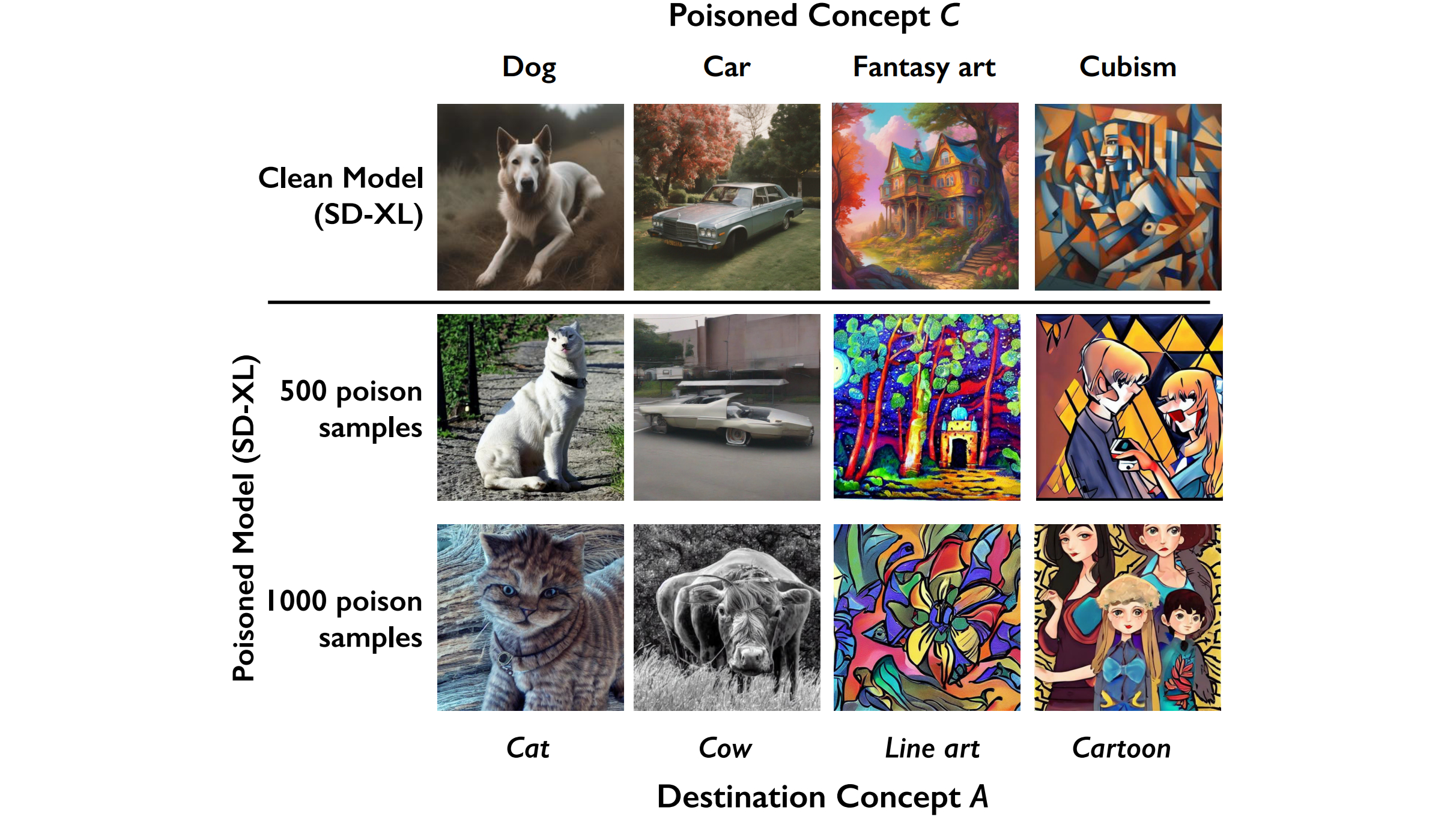

In a research paper on the tool, researchers working under computer science professor Ben Zhao showed how images of dogs were "poisoned" to include data in their pixels that made the open-source AI image generator Stable Diffusion see them as cats.

After being fed just 50 Nightshade-corrupted images of canines, Stable Diffusion began to generate images of dogs with very unusual appearances. After 100 poisoned images, the model directly generated images of cats when given a text prompt asking for a dog. By 300 images, requests to generate cats resulted in almost perfect images of dogs.

The researchers said that the grouping of conceptually similar words and ideas into spatial clusters called “embeddings” allowed them to also trick Stable Diffusion into generating images of cats when given text prompts asking for “husky,” “puppy” and “wolf.”

Get the Creative Bloq Newsletter

Daily design news, reviews, how-tos and more, as picked by the editors.

When Glaze was first unveiled earlier in the year, my first thought was that it would only be a matter of time before AI image generators were modified to recognise and neutralise the image cloaks that it adds to images. However, researchers believe that it will be difficult to defend AI image generators against Nightshade since scraping tools may not be able to detect and weed out poisoned images from training data. And they think that once a model has been trained on poisoned images, it will probably need to be retrained.

Why Nightshade? Because power asymmetry between AI companies and content owners is ridiculous. If you're a movie studio, gaming company, art gallery, or indep artist, the only thing you can do to avoid being sucked into a model is 1) opt-out lists, and 2) do-not-scrape directivesOctober 24, 2023

The tool is not yet available for use, but in a thread on X (Twitter), researchers said they were considering "how to build/release a potential Nightshade tool" either as an optional enhancement for Glaze/Webglaze or in an open-source reference implementation. They said they hoped the technique would be "used ethically to disincentivize unauthorized data scraping, not for malicious attacks".

They wrote: "Why Nightshade? Because power asymmetry between AI companies and content owners is ridiculous. If you're a movie studio, gaming company, art gallery, or indep artist, the only thing you can do to avoid being sucked into a model is 1) opt-out lists, and 2) do-not-scrape directives.

"None of these mechanisms are enforceable, or even verifiable. Companies have shown that they can disregard opt-outs without a thought. But even if they agreed but acted otherwise, no one can verify or prove it (at least not today). These tools are toothless. More importantly, only large model trainers have any incentive to consider opt-outs or respect robots.txt. Smaller companies, individuals, anyone without a reputation or legal liability, can scrape your data without regard.

"We expect artists and content owners to make use of every mechanism to protect their IP, e.g. optouts and robots.txt. For companies that actually respect these, Nightshade will likely have minimal or zero impact on them. Nightshade is for the other AI model trainers who do not."

To learn more about AI image generators, see our selection of the best AI art tutorials and our basic guide to how to use DALL-E 2.

Thank you for reading 5 articles this month* Join now for unlimited access

Enjoy your first month for just £1 / $1 / €1

*Read 5 free articles per month without a subscription

Join now for unlimited access

Try first month for just £1 / $1 / €1

Joe is a regular freelance journalist and editor at Creative Bloq. He writes news, features and buying guides and keeps track of the best equipment and software for creatives, from video editing programs to monitors and accessories. A veteran news writer and photographer, he now works as a project manager at the London and Buenos Aires-based design, production and branding agency Hermana Creatives. There he manages a team of designers, photographers and video editors who specialise in producing visual content and design assets for the hospitality sector. He also dances Argentine tango.