Make your site work on touch devices

Patrick H Lauke demonstrates how to make your site work on touch devices in an introduction to handling touch events in JavaScript.

Touchscreens on mobile phones, tablets and touch-enabled laptops and desktops open a whole new range of interactions for web developers. In this introduction, we'll look at the basics of how to handle touch events in JavaScript. See the downloadable tutorial files for the supporting step-by-step example demos.

Do we need to worry about touch?

With the rise of touchscreens, one of the fundamental questions from developers has been: what do I need to do to make sure my website or web application works on touch devices? Surprisingly, in most cases, the answer is: nothing at all. By default, mobile browsers are designed to cope with the large amount of existing websites that weren't developed specifically for touch. Not only do these browsers work well with static pages, they also handle sites that provide dynamic interactivity through mouse-specific JavaScript, where scripts have been hooked into events like mouseover.

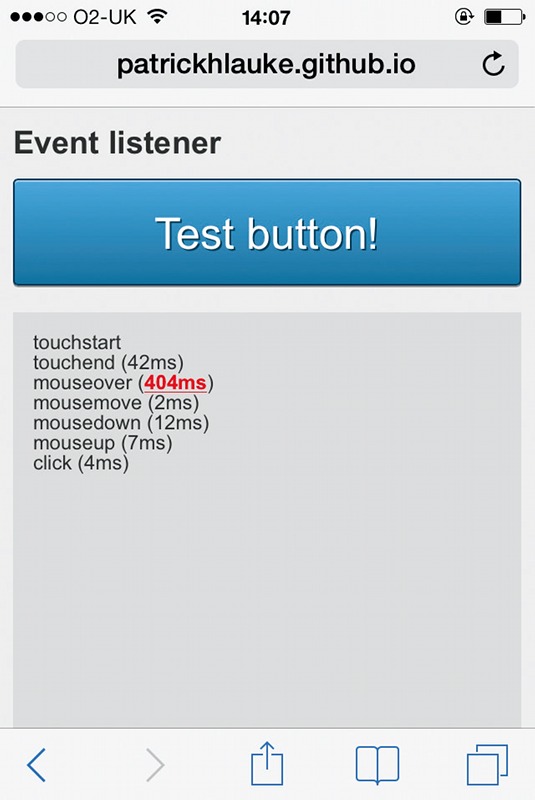

To this end, browsers on touch-enabled devices trigger simulated, or synthetic, mouse events. A simple test page (see example1.html in the tutorial files) shows that, even on a touch device, tapping a button fires the following sequence of events: mouseover > (a single) mousemove > mousedown > mouseup > click.

These events are triggered in rapid succession, with almost no delay between them. Also note the single 'sacrificial' mousemove event, which is included to ensure that any scripts that may be listening to mouse movement are also being executed at least once. If your website is currently set to react to mouse events, its functionality will (in most cases) still work without requiring any modifications on touch devices.

Shortcomings of simulated mouse events

As good as the fallback to simulated mouse events is, there are, however, still situations where purely relying on mouse-specific scripts may result in a suboptimal experience.

Delayed click events

When using a touchscreen, browsers introduce an artificial delay (in the range of about 300ms) between a touch action, such as tapping a link or a button, and the time the actual click event is fired. This delay allows users to double-tap (for instance, to zoom in and out of a page) without accidentally activating any page elements (see example2.html). This delay can be a problem if you want to create a web application that feels snappy and native. For regular web pages, this is unlikely to be an issue as this is the default behaviour users understand from most sites.

Tracking finger movements

As we already saw, the synthetic mouse events dispatched by the browser also include a mousemove event. This will always be just a single mousemove. In fact, if users move their finger over the screen too much, synthetic events will not be fired at all, as the browser interprets the movement as a gesture, such as scrolling. This is a problem if your site relies on interactions involving mouse movements (such as drawing applications or HTML-based games), as simply listening to mousemove won't work on touch devices. To illustrate this, let's create a simple canvas-based application (see example3.html). Rather than the specific implementation, we're interested in how the script is set to react to mousemove:

Daily design news, reviews, how-tos and more, as picked by the editors.

var posX, posY;

...

function positionHandler(e) {

posX = e.clientX;

posY = e.clientY;

}

...

canvas.addEventListener('mousemove', positionHandler,

false );If you try out example three (in the tutorial files) with a mouse, you'll see that the position of the pointer is continuously tracked as you move over the canvas On a touch device, you'll notice it won't react to finger movements; it only registers when a user taps on the screen, which will fire that single synthetic mousemove event.

"We need to go deeper…"

To work around these issues, we need to get our hands dirty at a lower abstraction level. Touch events were first introduced in Safari for iOS 2.0, and, following widespread adoption in (almost) all other browsers, were retrospectively standardised in the W3C Touch Events specification. The new events provided by the touch events model are: touchstart, touchmove, touchend and touchcancel. The first three are the touchspecific equivalent to the traditional mousedown, mousemove and mouseup events. On the other hand, touchcancel is fired when a touch interaction is interrupted or aborted. For example, when a user moves their finger outside of the current document and into the actual browser interface. Looking at the order in which both touch and synthetic mouse events are dispatched for a tap (see example4.html in the tutorial files), we get the following sequence: touchstart > [ touchmove ]+ > touchend > mouseover > (a single) mousemove > mousedown > mouseup > click.

First, we get all the touch-specific events: touchstart, zero, or more touchmove (depending on how cleanly the user manages to tap without moving the finger during contact with the screen) and touchend. After that, the browser will fire the synthetic mouse events and the final click.

Feature detection for touch event support

To determine if a particular browser supports touch events, we're able to use a simple bit of JavaScript feature detection:

if ('ontouchstart' in window) {

/* browser with Touch Events support */

}This snippet works reliably in modern browsers. Older versions have a few quirks and inconsistencies that require you to jump through various different detection strategy hoops. If your application is targeting older browsers, try Modernizr (modernizr. com) and its various touch test approaches, which smooth over most of these issues.

When conducting this sort of feature detection, we need to be clear what we're testing. The prior snippet only checks for the browser's capability to understand touch events and shouldn't be used as a simple way of checking if the current page is being viewed on a touchscreen-only device. There is a new class of hybrid devices, which feature both a traditional laptop or desktop form factor (mouse, trackpad, keyboard) and touchscreen (Windows 8 machines or Google's Chromebook Pixel). As such, it's no longer an either-or proposition as to whether the user will interact with our site via a touchscreen or a mouse.

Working around the click delay

If we test the sequence of events dispatched by the browser on a touch device and include some timing information (see example5.html in the tutorial files), the 300ms delay is introduced after the touchend event: touchstart > [ touchmove ]+ > touchend > [300ms delay] > mouseover > (a single) mousemove > mousedown > mouseup > click.

So, if our scripts are currently set to react to click events, we can remove the sluggish browser behaviour and prevent the default delay. We do this by reacting to either touchend or touchstart – the latter for interface elements that need to fire immediately when a user touches the screen, such as controls for HTML-based games.

Once again, we must be careful not to make false assumptions about touch event support and actual touchscreen use. Here's one of the common performance tricks that's quite popular and often mentioned in mobile optimisation articles.

/* if touch supported, listen to 'touchend', otherwise 'click' */

var clickEvent = ('ontouchstart' in window ? 'touchend' :

'click');

blah.addEventListener(clickEvent, function() { ... });Although this script is well-intentioned, the mutually-exclusive approach of listening to either click or touchend depending on browser support for touch events will cause problems on hybrid devices as it will immediately shut out any interaction via mouse, trackpad or keyboard.

For this reason, a more robust approach would be to listen to both types of events:

blah.addEventListener('click', someFunction, false);

blah.addEventListener('touchend', someFunction, false);The problem with this approach is that our function will be executed twice: once as a result of touchend, and a second time when the synthetic mouse events and click are being fired. One way to work around this is to suppress the fallback mouse events entirely by using preventDefault(). We can also prevent code repetition by simply making the touchend event trigger the actual click event.

blah.addEventListener('touchend', function(e) {

e.preventDefault();

e.target.click();

}, false);

blah.addEventListener('click', someFunction, false);There's a catch. When using preventDefault(), we also suppress any other default behaviour of the browser. If we apply it directly to touchstart events, any other functionality like scrolling, long click or zooming will be suppressed as well. Sometimes, this may be desirable, but generally this method should be used with care. Also note that the above example code hasn't been fully optimised. For a robust implementation, check out FTLabs's FastClick.

Tracking movement with touchmove

Armed with our knowledge on touch events, now let's go back to the tracking example (as shown in example3.html) and see how we can modify it to also track finger movements on a touchscreen.

Before looking at the specific changes needed in our script, we need to backtrack a bit first to understand how touch events differ from mouse events.

Anatomy of a touch event

In accordance with the Document Object Model (DOM) Level 2 Events Specification functions that listen to mouse events receive a MouseEvent object as parameter. This object includes coordinate properties such as clientX and clientY , which our script (example3.html in the tutorial files) uses to determine the current mouse position.

For example:

interface MouseEvent : UIEvent {

readonly attribute long screenX;

readonly attribute long screenY;

readonly attribute long clientX;

readonly attribute long clientY;

readonly attribute boolean ctrlKey;

readonly attribute boolean shiftKey;

readonly attribute boolean altKey;

readonly attribute boolean metaKey;

readonly attribute unsigned short button;

readonly attribute EventTarget relatedTarget;

void initMouseEvent(...);

};Touch events extend the approach taken by mouse events. As such, they pass on a TouchEvent object that's very similar to a MouseEvent, but with one crucial difference: as modern touchscreens generally support multi-touch interactions, TouchEvent objects don't contain individual coordinate properties. Instead, the coordinates are contained in separate TouchList objects:

interface TouchEvent : UIEvent {

readonly attribute TouchList touches;

readonly attribute TouchList targetTouches;

readonly attribute TouchList changedTouches;

readonly attribute boolean altKey;

readonly attribute boolean metaKey;

readonly attribute boolean ctrlKey;

readonly attribute boolean shiftKey;

};As we can see, a TouchEvent contains three different TouchList objects:

- touches: includes all touch points that are currently active on the screen, regardless of whether or not it's directly related to the element we registered the listener function for.

- targetTouches: only contains touch points that started over our element - even if the user moved their finger outside of the element itself.

- changedTouches: includes any touch points that changed since the last touch event.

Each of these represents an array of individual Touch objects. Here we find the coordinate pairs like clientX and clientY that we're after:

interface Touch {

readonly attribute long identifier;

readonly attribute EventTarget target;

readonly attribute long screenX;

readonly attribute long screenY;

readonly attribute long clientX;

readonly attribute long clientY;

readonly attribute long pageX;

readonly attribute long pageY;

};Using touch events for finger tracking

Let's return to our canvas-based example. First, we need to modify our listener function so it reacts both to mouse and touch events. In the first instance, we're only interested in tracking the movement of a single touch point that originated on our canvas. So, we'll just grab the clientX and clientY coordinates from the first object in the targetTouches array:

var posX, posY;

function positionHandler(e) {

if ((e.clientX)&&(e.clientY)) {

posX = e.clientX;

posY = e.clientY;

} else if (e.targetTouches) {

posX = e.targetTouches[0].clientX;

posY = e.targetTouches[0].clientY;

e.preventDefault();

}

}

canvas.addEventListener('mousemove', positionHandler,

false );

canvas.addEventListener('touchstart', positionHandler, false

);

canvas.addEventListener('touchmove', positionHandler, false

);Testing the modified script (see example6.html in the tutorial files) on a touchscreen device, you'll see that tracking a single finger movement now works reliably. If we want to expand our example to also work for multi-touch, we'll need to modify our original approach slightly. Instead of a single coordinate pair, we'll consider a whole array of coordinates, which we'll process in a loop. This will allow us to track single mouse pointers as well as any multi-touch finger movements a user makes (see example7.html in the tutorial files):

var points = [];

function positionHandler(e) {

if ((e.clientX)&&(e.clientY)) {

points[0] = e;

} else if (e.targetTouches) {

points = e.targetTouches;

e.preventDefault();

}

}

function loop() {

...

for (var i = 0; i<points.length; i++) {

/* Draw circle on points[0].clientX / points[0].clientY */

...

}

}

Performance considerations

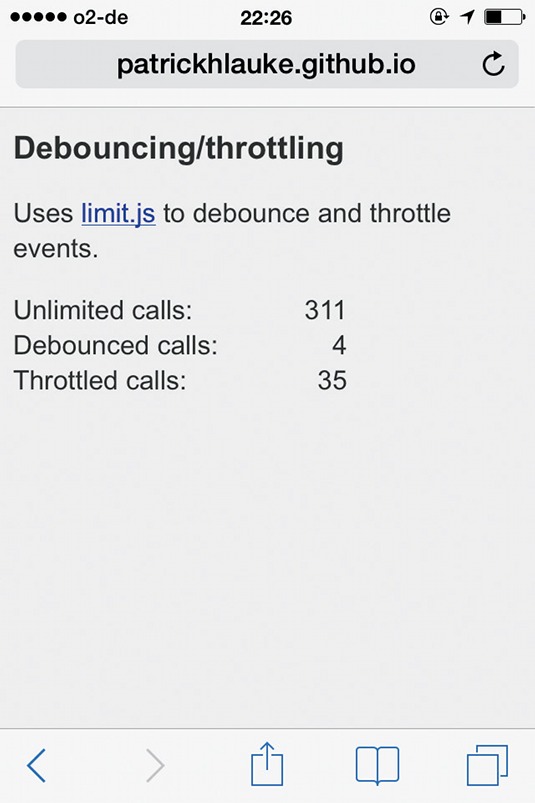

As with mousemove events, touchmove can fire at a high rate during any finger movements. It's advisable to avoid executing intensive code, such as complex calculations, or even entire drawing operations, for each move event. This is important for older, less performant touch devices. In our example, we do the absolute minimum by simply storing the latest array of mouse or touch point coordinates. The code to actually redraw our canvas is executed independently in a separate loop called via setInterval .

If the number of events that your script needs to process is still too high, it may be worth debouncing or throttling these events further with solutions like limit.js.

Conclusion

Although, by default, browsers on touch-capable devices do a reasonable job of handling mouse-specific scripts, there are still situations where it may be necessary to further tweak our code specifically for touch interactions.

Throughout this project tutorial, we've looked at the basics of how to handle touch events in JavaScript. Hopefully this tutorial has given you a solid introduction into why touch events are necessary, as well as a foundation to build on for how they can be used to make your websites and applications work well on touch devices.

Words: Patrick H Lauke

This article originally appeared in net magazine issue 248.

Thank you for reading 5 articles this month* Join now for unlimited access

Enjoy your first month for just £1 / $1 / €1

*Read 5 free articles per month without a subscription

Join now for unlimited access

Try first month for just £1 / $1 / €1

The Creative Bloq team is made up of a group of art and design enthusiasts, and has changed and evolved since Creative Bloq began back in 2012. The current website team consists of eight full-time members of staff: Editor Georgia Coggan, Deputy Editor Rosie Hilder, Ecommerce Editor Beren Neale, Senior News Editor Daniel Piper, Editor, Digital Art and 3D Ian Dean, Tech Reviews Editor Erlingur Einarsson, Ecommerce Writer Beth Nicholls and Staff Writer Natalie Fear, as well as a roster of freelancers from around the world. The ImagineFX magazine team also pitch in, ensuring that content from leading digital art publication ImagineFX is represented on Creative Bloq.