Get started with WebRTC

Andi Smith demonstrates how to use Web Real Time Communication to control webcam and microphone streams using JavaScript APIs.

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Five times a week

CreativeBloq

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Once a week

By Design

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Once a week

State of the Art

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Seasonal (around events)

Brand Impact Awards

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

- Knowledge needed: HTML, CSS, JavaScript

- Requires: Text editor and a copy of Google Chrome Canary

- Project Time: 2 hours

- Support file

Web Real Time Communication (WebRTC) is one of the most talked about subjects in web development at the moment. Thanks to recent browser updates, it is now possible to capture the webcam and microphone streams directly from the browser using simple JavaScript APIs, without the need for a Flash plug-in.

Once cross-browser implementation is complete, WebRTC will provide us with API functions that enable us to:

- detect device capabilities

- capture media from local devices

- encode and process those media streams

- establish connections, over direct peer-to-peer, including firewall/NAT traversal

- decode and process, including echo cancelling, stream synchronisation and a number of other functions

Parts of WebRTC are now supported in both Google Chrome and Opera. An implementation is also available in Firefox Nightly, with an expected delivery to Firefox in early 2013. The specification itself is still very much a work in progress, and is currently at the W3C Working Draft stage. In terms of implementation, Google Chrome Canary is the furthest ahead, so for the purposes of this tutorial we will use this browser to demonstrate the functionality.

This tutorial will cover the basics of the WebRTC specification. We will look at how we can show a web cam feed within a web page, how we can pick up audio from the microphone and how to add various effects to our video feed.

01. Getting started

Before we begin, we will need a HTML file with boilerplate markup, an empty CSS file and an empty JavaScript file. We do not need to include jQuery for the purposes of this tutorial, although you are welcome to use it if you wish. An example boilerplate HTML file is included below:

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8" />

<title>Getting started with WebRTC</title>

<link href="styles.css" rel="stylesheet" type="text/css" />

</head>

<body>

<script src="script.js"></script>

</body>

</html>

You'll also need to be able to load your HTML file from a web server. For security reasons, using the file:/// URI scheme will not work, so ensure you set up a localhost environment or FTP to where you can upload your test files to, before you attempt to running them in the browser.

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Since we're going to be using Google Chrome Canary for this tutorial, you will either need to download the browser, or check that it is up to date by going to the browser's menu icon and selecting About Google Chrome. This page will tell you if you are running the latest version, or whether you need to restart to update your browser.

02. Get UserMedia

Let's start by opening our JavaScript file. To initialise our stream, we need to do three things:

- Check the browser supports the functionality we are trying to use.

- Specify which stream(s) we would like to use.

- Provide two callback functions: one for success and one for when there is an error.

We access the webcam and microphone streams using the JavaScript API getUserMedia(), which takes the following parameters:

getUserMedia(streams, success, error);

Where:

- streams is an object with true/false values for the streams we would like to include

- success is the function to call if we can get these streams

- error is the function to call if we are unable to get these streams

This API is prefixed in Chrome and Firefox but not in Opera. We will therefore first write a small JavaScript shim, so that we can reference each browser implementation using navigator.getUserMedia. We've included an ms prefix, so that should Microsoft support WebRTC and be prefixed in the future (to the specification), our code would already work.

navigator.getUserMedia ||

(navigator.getUserMedia = navigator.mozGetUserMedia ||

navigator.webkitGetUserMedia || navigator.msGetUserMedia);

Now that we have specified the different prefixed versions of the API, we want to check that it exists in this version of the browser. To do this, we simply write the following if statement within our JavaScript file:

if (navigator.getUserMedia) {

// do something

} else {

alert('getUserMedia is not supported in this browser.');

}

If the browser does not support getUserMedia(), then the user will be alerted to this. Assuming that the browser does support getUserMedia we can now specify the streams we would like:

navigator.getUserMedia({

video: true,

audio: true

}, onSuccess, onError);

This code is saying:

- We would like to use getUserMedia.

- We would like to get both the video (webcam) and audio (microphone) feeds.

- If we successfully get these, pass them to a function called onSuccess (which we will write shortly).

- If we cannot get these, then call a function called onError.

Let's write a couple of stub functions for onSuccess and onError to test and run our code.

function onSuccess() {

alert('Successful!');

}

function onError() {

alert('There has been a problem retreiving the streams - did you allow access?');

}

Our complete JavaScript file should now read as follows:

navigator.getUserMedia ||

(navigator.getUserMedia = navigator.mozGetUserMedia ||

navigator.webkitGetUserMedia || navigator.msGetUserMedia);

if (navigator.getUserMedia) {

navigator.getUserMedia({

video: true,

audio: true

}, onSuccess, onError);

} else {

alert('getUserMedia is not supported in this browser.');

}

function onSuccess() {

alert('Successful!');

}

function onError() {

alert('There has been a problem retrieving the streams - did you allow access?');

}

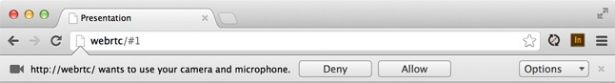

If we run this code in a browser that supports WebRTC, we'll see the following prompt:

Each web page that uses getUserMedia() will ask for the user's permission before retrieving the video and audio streams from their computer. Should the user press Allow, then our onSuccess function will be called. Should the user press Deny, then our onError function will be called instead. The settings under Options allow the user to specify the devices they wish to stream from, when there are multiple webcam or microphone feeds to choose from.

If you don't see the prompt within your browser, it could be that you are running the page from file:///; or it could be that you need to update your browser.

03. Video streams

Let's take a look at how we would display a user's webcam stream directly back on to the web page. Showing the webcam stream is currently the most implemented feature of WebRTC (available in Chrome, Firefox Nightly and Opera).

To display our video in our webpage, we need to add an HTML5 video element to our page:

<video id="webcam"></video>

We'll control the video element's feed and playback from our JavaScript, so let's reopen the JavaScript file. Our onSuccess function will need to carry out three tasks in order to show our video:

- Get our video element from the DOM.

- Set video to autoplay.

- Set the video source to our stream.

We can retrieve our video element from the DOM by specifying its ID:

function onSuccess() {

var video = document.getElementById('webcam');

// more code will go here

}

Now that we have our HTML5 video element, we can set our video to autoplay by adding to our onSuccess function:

video.autoplay = true

Finally, we need to point our video element's src attribute to the stream of the video. How we retrieve the stream varies depending on the browser, so let's code defensively:

var videoSource;

if (window.webkitURL) {

videoSource = window.webkitURL.createObjectURL(stream);

} else {

source = stream;

}

video.src = videoSource;

Altogether, our onSuccess() function should now read as follows:

function onSuccess(stream) {

var video = document.getElementById('webcam');

var videoSource;

if (window.webkitURL) {

videoSource = window.webkitURL.createObjectURL(stream);

} else {

videoSource = stream;

}

video.autoplay = true;

video.src = videoSource;

}

If you reload our page in your browser and accept the prompt, you should now see a video stream of your webcam directly in your page. Awesome!

Popular uses for this feature could be a web photo booth, tracking a user's movement or taking photos of users while playing an intense game to play back later.

04. Audio streams

Unless you're a fan of silent movies, you're probably going to want to add some sound to your video feed at some point. Although we are capturing sound with getUserMedia, we've not yet provided a way to play this back to the page – let's do this now.

To hear the sound being played, we are going to use the Web Audio API, also a W3C Editor's Draft specification. This is a JavaScript API for processing and synthesising audio, and it provides fades, cross-fades and effects. The Web Audio API Input functionality (what we require) is currently only available in Google Chrome Canary and is hidden behind a flag.

To enable it, we need to type in to our Chrome Canary address bar:

chrome://flags/

You will want to enable the setting called Web Audio Input, and restart the browser.

To hear our audio, we need to do three things:

- Create an Audio Context.

- Create our Media Stream Source.

- Connect our Media Stream Source to our Audio Context.

A word of warning before we test this code – if you're using a laptop, you'll either want to plug headphones in or turn the volume very low. Owing to the proximity of the headphone and microphone on modern laptops, an unfiltered audio stream will cause interference. With that in mind, in our onSuccess function, we will need to add the following code:

var audioContext,

mediaStreamSource;

window.audioContext ||

(window.audioContext = window.webkitAudioContext);

if (window.audioContext) {

audioContext = new window.audioContext();

mediaStreamSource = audioContext.createMediaStreamSource(stream);

mediaStreamSource.connect(audioContext.destination);

}

If we refresh our example now, we should now be able to both see and hear ourselves. (Don't forget to reduce the volume/plug in headphones.)

05. Wrapping up video and audio

Let's wrap up our existing JavaScript code into a namespace to prepare for the next step:

window.audioContext ||

(window.audioContext = window.webkitAudioContext);

function onSuccess(stream) {

var videoSource,

audioContext,

mediaStreamSource;

if (getVideo) {

if (window.webkitURL) {

videoSource = window.webkitURL.createObjectURL(stream);

} else {

videoSource = stream;

}

video.autoplay = true;

video.src = videoSource;

}

if (getAudio && window.audioContext) {

audioContext = new window.audioContext();

mediaStreamSource = audioContext.createMediaStreamSource(stream);

mediaStreamSource.connect(audioContext.destination);

}

}

function onError() {

alert('There has been a problem retreiving the streams - are you running on file:/// or did you disallow access?');

}

function requestStreams() {

if (navigator.getUserMedia) {

navigator.getUserMedia({

video: getVideo,

audio: getAudio

}, onSuccess, onError);

} else {

alert('getUserMedia is not supported in this browser.');

}

}

(function init() {

requestStreams();

}());

})();

To be able to retrieve our video stream for capturing images, we have moved the variable referencing the HTML5 video element 'webcam' outside of our onSuccess() function.

06. Capturing images

Although we've produced a live feed of our webcam, we've not provided a way to capture any of the images being produced. To capture images, we would need somewhere to draw them to: the HTML5 canvas element is the right element for the task.

The canvas element allows us to draw a 2D bitmap using simple JavaScript APIs. Let's open our HTML and add a canvas element to the page:

<canvas id="photo"></canvas>

To be able to draw on our canvas, we will need to carry out a number of tasks:

- Retrieve our canvas element from the DOM.

- Get the canvas drawing context.

- Make our canvas the same width and height as the video feed.

- Draw the current video image to our canvas.

Let's create a new function called takePhoto() inside of our namespace:

function takePhoto() {

var photo = document.getElementById('photo'),

context = photo.getContext('2d');

// draw our video image

}

Here, we have assigned two variables.

- photo is our canvas element.

- context is our artists toolkit for our canvas - imagine the context as our pens and paint brushes, and so on.

Now we need to retrieve our video element, which is accessible from the video variable. From this, we can grab the height and width of the video. We can also use the context to draw an image our source video using drawImage(), which takes the following parameters:

drawImage(source, startX, startY, width, height);

drawImage() will draw the frame of video shown at the time it is called.

function takePhoto() {

var photo = document.getElementById('photo'),

context = photo.getContext('2d');

photo.width = video.clientWidth;

photo.height = video.clientHeight;

context.drawImage(video, 0, 0, photo.width, photo.height);

}

Our takePhoto() function is complete – now let's add a button to our page to trigger the photo. In our HTML, we add:

<input type="button" id="takePhoto" value="Cheese!" />And in our JavaScript, we add our event handler:

var photoButton = document.getElementById('takePhoto');

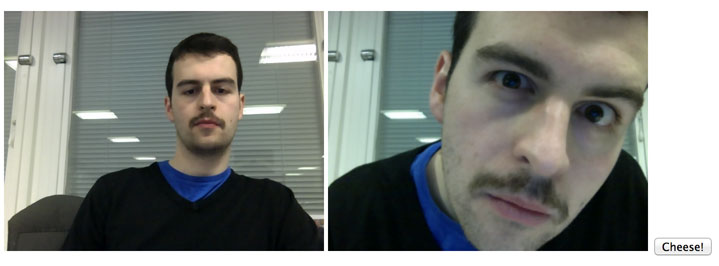

photoButton.addEventListener('click', takePhoto, false);Now let's open our page, put on our best smile and take a photo!

Hopefully, you will see something similar to the screen above, except with a prettier face!

07. Canvas stream

We can use <canvas> to manipulate pixel data by modifying the RGB values of individual pixels. To do this, we need to:

- Convert our video stream to a canvas feed.

- Grab the imageData from our canvas.

- Loop through our imageData array and manipulate pixels.

- Output to a display canvas.

To be able to show our video stream in a canvas element, we will need to animate our canvas. For this we can use requestAnimationFrame: an API which is optimised to schedule animations within a reflow/repaint. It will also run only when our tab is currently visible to save battery life. requestAnimationFrame is also prefixed, so we need to include another shim:

window.requestAnimationFrame ||

(window.requestAnimationFrame = window.webkitRequestAnimationFrame ||

window.mozRequestAnimationFrame ||

window.oRequestAnimationFrame ||

window.msRequestAnimationFrame ||

function( callback ){

window.setTimeout(callback, 1000 / 60);

});

Now that we have a way to run animation smoothly, we need to convert our video stream to display through canvas.

We will actually need two additional canvas elements in order to create effects. One will be our feed element, which will take the video source and retrieve the current video frame. It will be invisible on the page and will be used only to retrieve the image data. The other canvas element will be our display, which will show our video image with our effects applied.

With this in mind, let's add our new canvas elements to our HTML.

<canvas id="feed"></canvas>

<canvas id="display"></canvas>

We only want to show one of our video streams on our page, so in our CSS we will set our video element webcam and feed canvas to display: none to hide the original video element.

#webcam,

#feed {

display: none;

}

We need to reference our canvas and its context in every animation frame, so we'll create additional variables that are private, but accessible to each function within our namespace:

var webrtc = (function() {

var feed = document.getElementById('feed'),

feedContext = feed.getContext('2d'),

display = document.getElementById('display'),

displayContext = display.getContext('2d');

// our existing code.

function streamFeed() {

requestAnimationFrame(streamFeed);

displayContext.drawImage(video, 0, 0, display.width, display.height);

}

})();

For testing purposes, let's set our display context to directly show our video feed – we'll change this in the next step.

We need to add an initial call to streamFeed() within our onSuccess() function to kick off the animation.

We also need to set a width and a height for our feed and display canvas, so that our image does not appear squashed. Within our onSuccess() function add the following:

display.width = feed.width = 320;

display.height = display.height = 240;

We'll also need to change the photo width and height to look at the feed element rather than the video element (as it's now invisible):

photo.width = display.width;

photo.height = display.height;

If we now rerun the page, we should see our webcam feed is now running through the canvas. It's time to add some effects!

08. Get ImageData and put ImageData

Now that we have images appearing in our canvas element, we can get the image data and manipulate the pixels to create effects. First, let's change our code so that our feed canvas is supplying our image data to our display:

function streamFeed() {

requestAnimationFrame(streamFeed);

feedContext.drawImage(video, 0, 0, display.width, display.height);

imageData = feedContext.getImageData(0, 0, display.width, display.height);

displayContext.putImageData(imageData, 0, 0);

}

Here we are using two functions of the canvas context:

- getImageData(left, top, width, height) to retrieve an imageData object representing pixel data for the area we have specified.

- putImageData(data, left, top) to paint the pixel data back to the canvas.

09. Canvas effects

To execute our special effects, let's add a new function to our JavaScript called addEffects().

function addEffects(data) {

// do some stuff

return data;

}

In our function streamFeed() we will need to call the addEffects() function:

function streamFeed() {

requestAnimationFrame(streamFeed);

feedContext.drawImage(video, 0, 0, display.width, display.height);

imageData = feedContext.getImageData(0, 0, display.width, display.height);

imageData.data = addEffects(imageData.data);

displayContext.putImageData(imageData, 0, 0);

}

Now we are calling our function, let's have some fun making effects! Let's start by looping through our image data:

function addEffects(data) {

for (var i = 0, l = data.length; i < l; i += 4) {

// do something

}

return data;

}

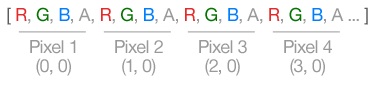

You'll notice that our loop is incrementing in multiples of four. This is because the imageData object contains the raw data for each pixel in an array. The Red, Green, Blue and Alpha (RGBA) values are stored as individual items in the array, so every pixel is actually represented by four separate values.

Red, Green, Blue and Alpha can be any integer value from 0 to 255, with the Alpha values representing 0 as transparent and 255 as visible.

If we wanted to manipulate a particular colour, we just need to change the relevant array item. For example, if we want to make our image completely red, we could set the G and B values to 0:

function addEffects(data) {

for (var i = 0, l = data.length; i < l; i += 4) {

data[i + 1] = 0; // g

data[i + 2] = 0; // b

}

return data;

If we want to invert our image, we subtract our pixel value away from 255, like so:

function addEffects(data) {

for (var i = 0, l = data.length; i < l; i += 4) {

data[i] = 255 - data[i]; // r

data[i + 1] = 255 - data[i + 1]; // g

data[i + 2] = 255 - data[i + 2]; // b

}

return data;

}

We can also check colour values and create effects based on those values. For example, here we check for a certain amount of red in a pixel and, if it is greater than 127, we apply a semi-transparent effect:

function addEffects(data) {

for (var i = 0, l = data.length; i < l; i += 4) {

if (data[i] > 127) {

data[i + 3] = 127;

}

}

return data;

}

Now we are creating effects in canvas, we need to switch our takePhoto() function to draw an image from the canvas rather than the video feed. We can do this by switching the variable video to display:

context.drawImage(display, 0, 0, photo.width, photo.height);

Now that you know how to manipulate pixel data and create effects – why not have a go at creating some effects yourself?

During this tutorial I have explained what WebRTC is, and showed you how to access the webcam and microphone from your user's machine via JavaScript. I have taken this further by showing you how to manipulate the data returned to suit your own needs. Have fun experimenting with this great new HTML5 specification, and see what you can produce!

Andi Smith is a presentation technical architect at ideas and innovation agency AKQA; providing valid frontend solutions for large scale digital solutions; as well as working with the latest HTML5 and JavaScript API. He works alongside clients including Heineken, Nike, Unilever and MINI.

Liked this? Read these!

- How to make an app

- Free graphic design software available to you right now!

- Brilliant Wordpress tutorial selection

- Our favourite web fonts - and they don't cost a penny

The Creative Bloq team is made up of a group of art and design enthusiasts, and has changed and evolved since Creative Bloq began back in 2012. The current website team consists of eight full-time members of staff: Editor Georgia Coggan, Deputy Editor Rosie Hilder, Ecommerce Editor Beren Neale, Senior News Editor Daniel Piper, Editor, Digital Art and 3D Ian Dean, Tech Reviews Editor Erlingur Einarsson, Ecommerce Writer Beth Nicholls and Staff Writer Natalie Fear, as well as a roster of freelancers from around the world. The ImagineFX magazine team also pitch in, ensuring that content from leading digital art publication ImagineFX is represented on Creative Bloq.