The future of HTML5 video

What’s coming next in the world of HTML5 video? Opera’s Bruce Lawson reveals what’s in store and how you can incorporate the new features into your own sites

This article first appeared in issue 224 of .net magazine – the world's best-selling magazine for web designers and developers.

HTML5 brings native multimedia to browsers. In ye olde days, video and audio were handed off to third-party plug-ins (which may not be available for every device or operating system). Communication between a browser and a plug-in is limited and therefore the multimedia was very much a black box.

Then along came HTML5. All the major browsers support native video and audio (including Internet Explorer), albeit with the need to double-encode your media because IE and Safari support only royalty-encumbered codecs.

Suddenly, video can be styled with CSS – you can overlay semi-transparent videos over each other, set borders and background images, rotate them on hover with transitions and transforms and all kinds of other wonders.

If you read the article I wrote with Vadim Makeev, you’ll also know that both audio and video have simple APIs that you can use to control playback from JavaScript. With some simple JavaScript and CSS as complex or as simple as your look requires, you can build your own media player.

Where we are now

Already, then, native multimedia is looking pretty groovy. It’s not fully mature, of course; browsers have been media players for less than two years, while developers of desktop media player programs have been perfecting their programs for up to 15 years.

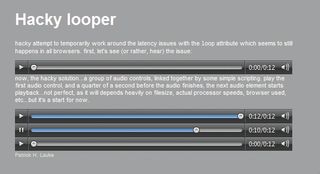

Many have noticed that most browsers have quite laggy audio playback. Games developers often say that the remaining use of Flash for them is for sound, as some browsers can take up to two seconds to trigger an HTML5 audio file. Patrick Lauke documents hacking a looping audio that doesn’t have a small delay before looping.

Get the Creative Bloq Newsletter

Daily design news, reviews, how-tos and more, as picked by the editors.

Remy Sharp has documented how he got over iOS shortcomings with a technique he called Audio Sprites. Elsewhere in iOS land, must-reads are HTML5 video issues on the iPad and how to solve them and Unsolved HTML5 video issues on iOS.

Of course, these are implementation details rather than specification problems, so as the platforms mature, we’ll see many of these issues disappear.

So now we know where we are, let’s look at what’s coming next.

Multimedia subtitling and captioning

As more and more content on the web is in video or audio, spare a thought for those who can’t hear the soundtrack, or for whom English is not a native language so would like to read as well as listen, or for those who would like to read subtitles in their own language.

Coming soon to browsers near you are subtitles and captions, through the power of the HTML5 <track> element, which synchronises a file of text and timing information with a media file, displaying the text at the right time. This powerful element lives as a child of the <video> or <audio> element, and points to a subtitles file.

Let’s have a look at some of its attributes:

<track src=subtitles.vtt kind=subtitles srclang=en label="English">The src attribute points to the external timed text tracks. The kind attribute tells the browser if these are subtitles (the dialogue is transcribed and possibly translated because otherwise it wouldn’t be understood), captions (transcription or translation of the dialogue, sound effects, musical cues and other relevant audio information suitable for when sound is unavailable or not clearly audible), descriptions (textual descriptions of the video component of the media resource, intended for audio synthesis when the visual component is obscured, unavailable, or not usable, for example, because the user is interacting with the application without a screen while driving, or because the user is blind), chapters or metadata. The default is subtitles.

The srclang attribute tells the browser what language the text files are in and allows you to associate more than one set with a video or audio – so you could offer subtitles in multiple languages. Label is optional and is a user-readable title for the track.

<video controls> <source src=movie.mp4 type=video/mp4> <source src=movie.webm type=video/webm> <track type=subtitles srclang=en src=subtitles-en.vtt> <track type=subtitles srclang=de src=subtitles-de.vtt label="German"> <!-- fall back content, eg a Flash movie or YouTube embed code </video>The spec makes no requirements of the browsers for how they communicate the presence of timed text, and no shipping browser has support for it yet, but we can experiment using a Polyfill.

For rapid prototyping, I like to use Playr, a lightweight script from Julien Villetorte. It’s available on Github. Just grab the images that make up the Playr UI, add playr.js and playr.css in the head of your page, add class name playr_video to your video tag and your page will render with a sexy Playr skin and the ability to choose between your subtitles.

Note that the UI is made by the Polyfill and isn’t built in to any browser yet – but will be soon. It’s being worked on by Opera, Microsoft and Google, and it’s likely that the browsers will offer a similar UI and functionality.

The <track> element doesn’t presuppose any particular format for the timed text, either. In this case, it’s a webVTT file, but <track>, like <img>, <video> and <audio> is format agnostic. All browsers will support the new WebVTT format, and Microsoft has announced it will also support an older format called TTML.

WebVTT

WebVTT is a brand-new timed text format. The web is littered with other formats – at least 50 – so why invent a new one? Because we need a simple format.

WebVTT is very easy to author. This is a vital point: if it’s too hard, authors won’t bother – and no amount of browser support for new elements and APIs is going to make multimedia content accessible if there are no subtitled videos out there. At its simplest, WebVTT looks like this:

WEBVTT

00:01.000 --> 00:02.000

Hello

00:03.000 --> 00:05.000

World

It’s simply a UTF-8 encoded text file, with WEBVTT at the top. Timings are indicated as offsets from the start of the media. So “Hello” will be displayed from one second into the video until two seconds from the start of the video (therefore, displaying for a one second duration). Subtitles will disappear until three seconds from the start, at which point “World” will be displayed.

It doesn’t come much simpler. Of course, you can do more if you want to. For example, you can change the position of the subtitle (so you don’t get white text on a white part of the frame, for example).

00:03.000 --> 00:05.000 L:-85%

This moves the subtitle 85 per cent of the media height “up” from its default position at the bottom of the video.

You can change text size, for example S:150% increases the size to 150 per cent of the default. It’s possible to have subtitles appear incrementally (for example, with karaoke lyrics in which the line appears one word at a time, but the previous word doesn’t disappear when a new one is displayed). You can style different speakers’ words with different colours, and there is basic support for styling different words with different colours. For more information visit delphiki.com/webvtt/#cue-settings.

More importantly than those stylistic options are the internationalisation options that are available. The webVTT spec builds in right-to-left support for languages such as Arabic and Hebrew, vertical support for languages such as Chinese and the ability to add Ruby annotations as pronunciation hints for Chinese, Japanese and Korean.

If you want to experiment with webVTT, grab Playr and get writing. Opera’s Anne van Kesteren has written a live webVTT validator to test your work.

Full-screen video

Everybody likes full-screen video. Everybody except the HTML5 specifiers, that is, who didn’t allow it for a long time. WebKit dreamed up its own JavaScript method called WebkitEnterFullscreen(), and implemented the API in a way that could only be triggered if the user initiated the action – like pop-up windows, which can’t be created unless the user performs an action such as a click.

In May 2011, WebKit announced it would implement Mozilla’s own flavour of a full-screen API. This API allows any element to go full-screen (not only <video>) – you might want

full-screen <canvas> games or video widgets embedded in a page via an <iframe>. Scripts can also opt in to having alphanumeric keyboard input enabled during full-screen view, which means that you could create your super spiffing platform game using the <canvas> API and it could run full-screen with full keyboard support.

As Opera likes this approach, too, we should see something approaching interoperability. Until then, we can continue to fake full-screen by going full-window, setting the video’s dimensions to equal the window size.

Synchronising media elements

The ability to synchronise media elements is still being specified, so it’s further away from implementation than synchronised captions and subtitles. It will allow several related media elements (video, audio, or a mixture of both) to be linked.

There are two main use cases for this. Imagine a site that shows videos of sporting events: there might be multiple video elements, each from a different camera angle – for example, one on each goal, one in the air and one tracking the ball. A site showing a concert might have one camera on the bass guitar, one on the guitar, one on the Peruvian noseflute. Moving the seek bar, or changing the playback rate to slow motion, on one video should affect each of the other videos.

Another important use case is accessibility. The <track> element allows us to synchronise text to a video; the ability to synchronise media elements allows us to synchronise another video (for example, a movie of someone signing the words spoken in the main video) or synchronising an audio track that describes the action in a video during dialogue breaks.

There is a whole Controller API specified, but the easiest way to synchronise media elements will be declaratively, using the mediagroup attribute on <video> or <audio>. All those with the same value for mediagroup will be synchronised:

<video mediagroup="jedward" src="bass-guitar.webm">..</video> <video mediagroup="jedward" src="lead-guitar.webm">..</video> <video mediagroup="jedward" src="idiot-1.webm">..</video> <video mediagroup="jedward" src="idiot-2.webm">..</video>This chunk of markup synchronises four cameras on different musicians at a Jedward concert. The following synchronises audio description with the popular film Mankini Magic:

<video mediagroup="described-film" src="mankini-magic.webm">..</video> <audio mediagroup="described-film" src="describe.ogg">..</audio>This is still being specified, so there’s no browser support and (as far as I know) no polyfills.

Accessing camera and microphone

There are a few remaining uses for plug-ins that HTML5 so far hasn’t been able to usurp. Copy-protecting content with DRM is one (see the Q&As above and overleaf for more on that). Another is adaptive streaming – changing the bit rate etc according to network conditions, although that’s being worked on.

Traditionally the territory of the Flash plug-in, HTML5 now adds the facility to connect to a device’s camera and microphone. Previously known as HTML5 <device>, this functionality is now wrapped in an API called getUserMedia. To tell the device what type of media we wish to get, we pass audio or video as arguments. Because many devices have a forward-facing camera, which captures the user’s image, and rear camera, we can pass in the token’s user or environment.

First, we feature detect:

if(navigator.getUserMedia) { navigator.getUserMedia('audio, video user', successCallback, ¬ errorCallback);When we have the streams, we can use them as we need. Here, we’ll simply replicate the stream on the page by hooking up the stream to the source of a video element:

var video = document.getElementsByTagName(‘video’)[0] ... function successCallback( stream ) { video.src = stream; }Once we have this, it’s simple to copy the video into a canvas element using drawImage to grab the current frame of the video, and redraw it every 67milliseconds (so approximately 15 frames per second). Once it’s in the canvas, you can access the pixels via getImageData.

In an example by Richard Tibbett of Opera the canvas is then accessed by JavaScript to perform face recognition – in real time! – and, once a face is found, to draw a magic HTML5 moustache in the right place.

getUserMedia is supported in Opera 12, Opera Mobile 12 and Canary builds

of Chrome. Plenty more examples of getUserMedia can be found here.

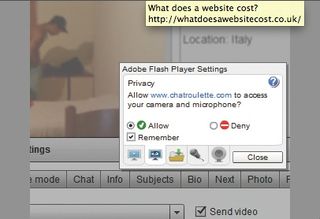

Obviously, giving websites access to your webcam could create significant privacy problems, so users will have to opt-in, much as they have to do with geolocation. But that’s a UI concern rather than a technical problem.

Of course, it’s just possible that the designers of the getUserMedia API had other uses in mind, besides drawing moustaches. It could be used for browser-based QR/bar code readers. Or, more excitingly, augmented reality. The HTML5 Working Group is currently specifying a peer-to-peer API which will allow you to hook up your camera and microphone to the <video> and <audio> elements of someone else’s browser, making it possible to do video conferencing.

WebRTC

In May 2011, Google announced WebRTC, an open technology for voice and video on the web, based on the HTML5 specifications. RTC stands for “real-time communication”, and is video-conferencing in the browser. It hooks up your camera and microphone to a <video> element on a web page in your friend’s browser (and vice-versa) over the HTML5 PeerConnection API.

WebRTC uses VP8 (the video codec in WebM) and two audio codecs optimised for speech with noise and echo cancellation, called iLBC, a narrowband voice codec, and iSAC, a bandwidth-adaptive wideband codec. As the project website says, “We expect to see WebRTC support in Firefox, Opera, and Chrome soon!”

As you can see, HTML5 multimedia support is about to get a whole lot richer. As usual with HTML5, the implementations need to catch up with the spec – and the specs need to be finished too – but the future looks exciting indeed.

For more on the future of video, see our Q&As with John Foliot and Silvia Pfeiffer

Thank you for reading 5 articles this month* Join now for unlimited access

Enjoy your first month for just £1 / $1 / €1

*Read 5 free articles per month without a subscription

Join now for unlimited access

Try first month for just £1 / $1 / €1

The Creative Bloq team is made up of a group of design fans, and has changed and evolved since Creative Bloq began back in 2012. The current website team consists of eight full-time members of staff: Editor Georgia Coggan, Deputy Editor Rosie Hilder, Ecommerce Editor Beren Neale, Senior News Editor Daniel Piper, Editor, Digital Art and 3D Ian Dean, Tech Reviews Editor Erlingur Einarsson and Ecommerce Writer Beth Nicholls and Staff Writer Natalie Fear, as well as a roster of freelancers from around the world. The 3D World and ImagineFX magazine teams also pitch in, ensuring that content from 3D World and ImagineFX is represented on Creative Bloq.