Unity 2023: discovering the next-gen CG tech every artist needs

Exploring the latest updates to Unity, one of the leading real-time creation tools.

Unity is one of the world's leading CG platforms and can be used for everything from film VFX to video game creation. In recent years we've seen a huge upsurge in content creators utilising game engines like Unity (and Unreal Engine 5) to deliver their work to the public.

These CG engines have put unbelievably powerful tools into the hands of everyday artists and led to an explosion of highly-rated games, films and visualisation. While the debate still pits Unity versus Unreal Engine, and you can read my Unreal Engine 5 review for a closer examination, here I'll be looking at Unity's recent new features that make it on of the best platforms for content creation. (You may want one of the best laptops for game development if you're serious about using Unity or Unreal Engine.)

This democratisation from developers to content creators shows no signs of stopping, evidenced by Unity's teased AI creator at this year's GDC and Unity's acquisition of two major players, bringing both Weta Digital (now Weta FX) and Ziva under its ever-expanding wings. The result of these moves, for artists, is that they are gaining access to world-class, industry proven tools and workflows that would otherwise be unattainable.

Unity is bringing pro VFX tools to everyone

By way of example, Weta’s toolset is world renowned and many artists could have only dreamed of accessing those exact same tools for their own projects. The time for dreaming is now over. A new era is dawning, and it seems to be firmly dawning on these global real-time engines like Unity and Unreal Engine.

The acquisition of Ziva is another example of this democratisation. By making the computer take the lion’s share of the hard work, Ziva makes it possible for all artists, experienced or not, to create game-changing animations.

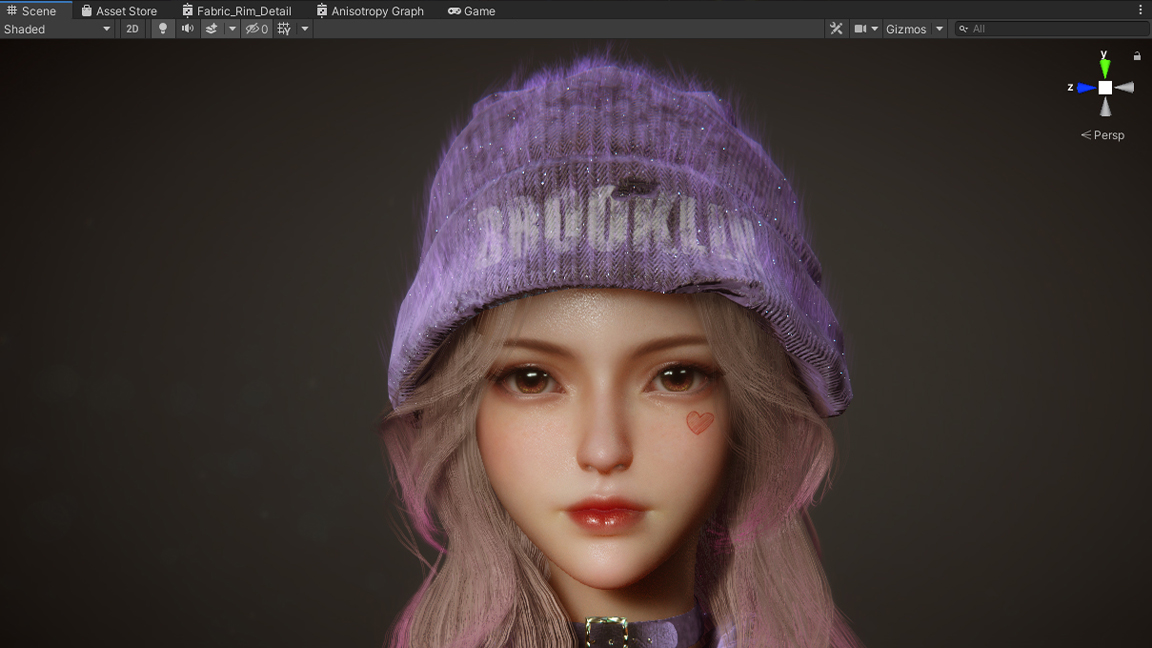

Its capabilities were showcased in Unity’s latest demo, Enemies. It builds upon their previous demo The Heretic, released in 2020, which began the work of developing new tools, new pipelines and new tech to enable artists to create a new generation of digital humans. At this point, the quality of these demos might seem beyond many of us, but what they do, in reality, is drive forward the development of powerful, user-accessible tools that enable smaller, non-AAA teams to deliver breathtakingly realistic work.

Unity’s vision is to make it possible for anyone to create what they want, when they want. It talks about giving content creators superpowers by making a plethora of deep tools available and accessible to everyone. It is yet to see this vision fully realised, but certainly committed to it. Let’s take a look at some of the next-gen technology that we’ve already started to see, and that we’ll be encountering in the not-too-distant future.

Get the Creative Bloq Newsletter

Daily design news, reviews, how-tos and more, as picked by the editors.

Unity adopts Weta Digital's VFX tools

It was in November 2021 that the news became public: Unity had acquired Weta Digital. It was difficult for many to grasp what this acquisition really meant. Most people’s experience of this New Zealand-based VFX company was through watching any number of game-changing cinematic masterpieces including Avatar, Wonder Woman, The Lord of the Rings, and King Kong, among others. What on earth could one of the biggest realtime game engines want with this VFX company?

It seems that Unity was wanting to fast-track its tool development. Why develop all the tools you want from scratch, when they already exist in the world? This acquisition brought together world leaders in both real-time development and innovators in digital entertainment.

The coming together of these two heavyweights will result in bringing a plethora of tried and tested VFX tools to artists that will drastically speed up workflows, and enable artists all around the world to create incredible content. Unity is a now serious competitor to specialist VFX software like Houdini and Cinema 4D.

Weta’s toolset has been industry-honed over the last 15 years and Unity now owns it all. Each tool, on its own, is capable of delivering amazing results, but their full power lies in their ability to be utilised together. The potential of what can be created through these tools is going to be scary once Unity has managed to make them all accessible. Let’s take a whistle-stop tour of some of these tools.

First up, there’s the two core renderers, Manuka and Gazebo. Manuka is the path-tracing renderer and Gazebo is an interactive renderer. It will be interesting to see whether these are directly integrated into Unity considering it already has its own rendering tools, but I’d expect Gazebo to really stretch what is possible in Unity, especially as it relates to pre-visualisation and virtual production.

Next up are some of the tools that drive the creation of worlds and everything in them. Tools such as Scenic Design and Citybuilder will drastically speed up the creation of procedural worlds. Alongside Lumberjack and Totara for vegetation, artists will be able to design and create worlds that would otherwise be beyond reach.

Finally, Weta brings other tools including Loki for physics-based simulations, Barbershop for hair and fur, and Koru for advanced puppet rigging. These tools cover the whole digital workflow and put Unity in an extremely strong position compared to its competitors and easily comparable to the best 3D modelling software.

It will be interesting to see how these tools are made available, especially because some of them seem in direct competition to tools already available in Unity or Ziva. The intention seems to be to make them available in the cloud, so artists can utilise them directly inside their preferred digital content creation (DCC) tools such as Maya, Houdini and others.

This approach will put the power in the hands of the artists as they’ll be able to pick and choose the tools that work for them. Alongside these tools, artists will also gain access to a huge asset library, including environments, humans, objects, materials and more. The promise of this library increasing as Weta FX creates more content is particularly exciting.

Digital humans are now easier to create in Unity

Another area of content creation that has seen a sustained and concerted investment is around digital humans. Weta’s tools will obviously enhance this element, but there are other ways that Unity has and is looking to bolster its offering.

The most notable of these is in its acquisition of Ziva, announced only two months after Weta came on board. Ziva had already been involved in a number of creations including Hellblade: Senua’s Sacrifice and Godzilla vs. Kong, so it was hardly surprising that Unity took an interest in this physics-based character simulation platform.

Since its inception, Ziva has been an instrumental force in enabling animators, of various skill levels, to create their own lifelike characters. Its vision to place these industry-defining tools into the hands of the masses dovetails perfectly with Unity’s plans.

All of that incredible tech is gradually working its way into the hands of Unity users, and the potential of what will be possible when it does was seen in their Enemies demo. In terms of next-gen tech, ZivaRT and Ziva Face Trainer are hugely exciting. ZivaRT uses machine learning to deliver cinematic-quality characters to real-time platforms. With this technology, artists can take advantage of the latest progress in facial animation and body deformation.

This will further close the gap between what is possible in films compared to video games. Ziva Face Trainer is another technology driven by machine learning that transforms an artist’s 3D face models into animation-ready faces. This is a prime example of Unity’s vision to make world-class tools easily accessible to all.

Artists only have to map their head model to a generic face mesh, before uploading that mesh to the Ziva cloud portal. While they sit back, Ziva’s system applies over 72,000 expressions to the face through 4D data sets and synthetic data, before making it available for download. The tech driving it is next level, and yet it is relatively straightforward to use.

To access Unity’s current state of development, as it relates to digital humans, simply download its Digital Human package and take a look. The pack includes the tech developed for The Heretic demo, including a range of facial animation systems, a skin attachment system, shaders, and rendering to boot.

Create beautiful scenes easier in Unity

As you’d expect, Unity has also improved its render pipelines, including its High Definition Render Pipeline (HDRP). The Universal Render Pipeline (URP) enables optimisation of graphics across all platforms, but the HDRP pushes the boundaries in terms of the capabilities of next-generation rendering.

Through HDRP, artists can create dynamic environments using Physically Based Sky, Cloud Layers, Volumetric Fog, Adaptive Probe Volumes, and the Volume System. The HDRP also boasts a powerful new Water System that works out of the box, enabling artists to create oceans, seas, rivers, lakes, and pools. Considering that it has only been in development for around a year or two, the results achievable are truly impressive.

The HDRP is one of Unity’s Scriptable Render Pipelines (SRP), which enables users to write C# scripts to control the way that Unity renders each of their frames. This further pushes the capabilities of rendering within Unity and makes it increasingly powerful.

Unity has always been about development, but it seems in recent years it has supercharged its commitment to this. Having the cash to acquire both Weta and Ziva has helped to democratise world-class tech and get it into the hands of artists of varying skill levels.

At this year's Game Developers Conference in San Francisco Unity AI was teased. In the same conference as Adobe announced its Firefly AI and Nvidia revealed its working with key partner to deliver its Picasso AI, Unity shared what will be its AI – simply type in detailed instructions and Unity will create complex scenes rigged and set for games, VFX, previz and more. Well, that's the pitch, Unity has yet to show the full AI in action, but it could really open up game development to everyone.

The destination is still a long way off and there’s a lot of hard work to be done. Making all of these tools available in a meaningful way will take time, energy and commitment, but that is something Unity isn’t lacking. If Unity manages to break the back of it, in a few years time it’ll be a force to be reckoned with.

This article was originally published in issue 297 of 3D World Magazine. You can subscribe to 3D World at the Magazines Direct website and get 3 issues for £3. The magazine ships internationally.

Read more:

- PSVR 2 review: next-gen virtual reality has arrived

- The best laptop for animation

- Building a 3D virtual studio in Unity

Thank you for reading 5 articles this month* Join now for unlimited access

Enjoy your first month for just £1 / $1 / €1

*Read 5 free articles per month without a subscription

Join now for unlimited access

Try first month for just £1 / $1 / €1

Paul is a digital expert. In the 20 years since he graduated with a first-class honours degree in Computer Science, Paul has been actively involved in a variety of different tech and creative industries that make him the go-to guy for reviews, opinion pieces, and featured articles. With a particular love of all things visual, including photography, videography, and 3D visualisation Paul is never far from a camera or other piece of tech that gets his creative juices going. You'll also find his writing in other places, including Creative Bloq, Digital Camera World, and 3D World Magazine.

- Ian DeanEditor, Digital Arts & 3D