How videogame graphics and movie VFX are converging

The technology and techniques driving game graphics forwards.

Convergence has been a key buzzword across the 3D industry over the last few years. The worlds of game graphics and movie VFX are advancing, and with this change, a natural crossover is happening. We're now seeing that the production methods used in the creation of digital art for movies and games are very similar.

This has been partly triggered by the rise of new technologies such as VR, and with both areas using the same tools such as Maya and ZBrush, but it wasn't that long ago that a single plant in the film Avatar had more polygons than an entire game environment.

Motion capture in games

Motion capture is a big area where we're seeing more and more convergence. I still get excited teaching PBR texturing to my students, knowing I am using implementations of the Disney GGX shader in real-time engines.

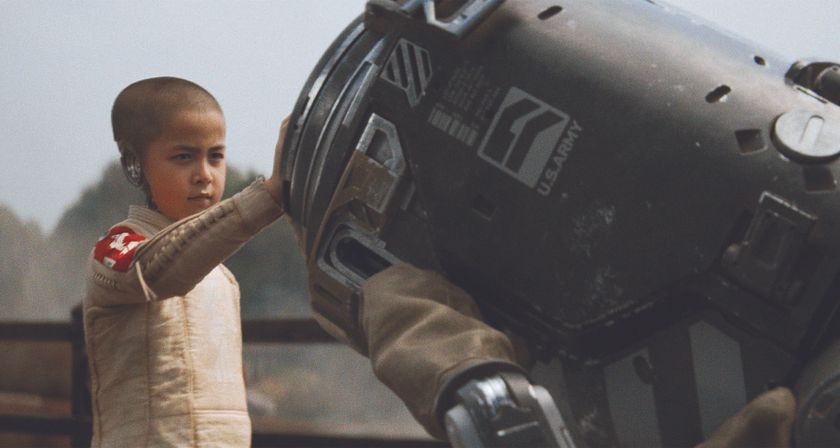

Motion capture in games has allowed a more complex world of storytelling to evolve, with technology allowing game makers to create characters who are relatable and human in their facial expressions and physical mannerisms.

Just look at the work of Imaginarium on Squadron 42, Naughty Dog's The Last of Us and Guerrilla Games' Horizon Zero Dawn. With the development of characters in games using motion capture, this allows the exploration of wider human themes.

Real-time rendering technology

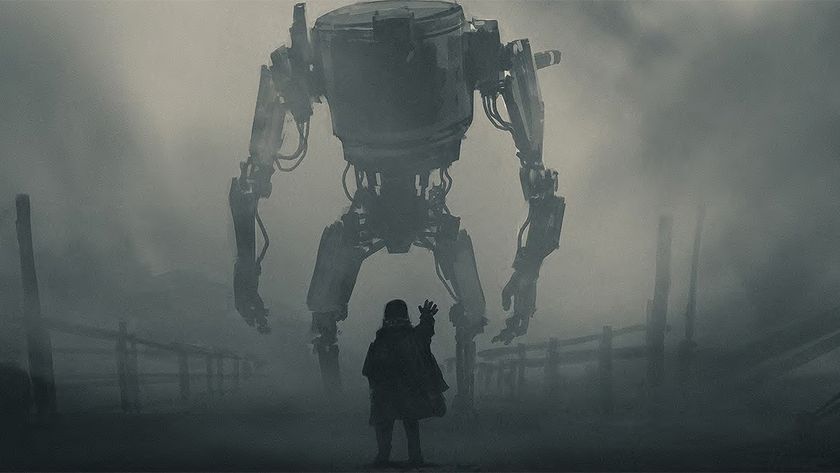

One of the other big developments that's occurred in this space – which we've seen most recently at events such as the Games Developers Conference (GDC) – is the power of real-time rendering.

Epic's Unreal Engine has really stolen a march on this, and it has recently teamed up with The Mill and Chevrolet to demonstrate the engine's potential with the short film Human Race. Merging live action storytelling with real-time visual effects, the film showcases how these technologies are pushing the limits by using real-time rendering in a game engine.

Get the Creative Bloq Newsletter

Daily design news, reviews, how-tos and more, as picked by the editors.

The fact that a similar approach was used for some of the scenes in Star Wars: Rogue One by ILM, using a tweaked version of Unreal Engine 4, just adds to its credentials. The team used this technology to bring the droid K-2SO to life in real time.

While at the moment this technique is predominately being used for hard surfaces, it's surely only a matter of time before it supports more diverse objects.

We're also seeing companies such as performance capture studio Imaginarium expanding to adapt to this change, with Andy Serkis' recently unveiling Imaginati Studios, a game developing studio with a focus on real-time solutions using Unreal Engine 4.

Photogrammetry in games

Another area where there is a real convergence and crossover of talent is in the use of photogrammetry, which involves taking photographic data of an object from many angles and converting it into stunningly realistic fully textured digital models.

Creating game assets from photographs may not be new, but the process has now reached the kind of standards we are used to seeing in film production.

From the incredibly realistic recreation of the Star Wars universe by DICE in Star Wars Battlefront to Crytek's Ryse: Son of Rome, the bar of video game graphics is getting higher and higher. It might be hyperbolic to suggest the visuals of Ryse are parallel to the classic film Gladiator, but it is nevertheless a stunning realisation of ancient Rome.

The Vanishing of Ethan Carter is another game that uses photogrammetry to great effect. Epic Games' Paragon, where photoshoots were used to capture HDR lighting on hair and skin, is another fantastic example.

Some of the most compelling-looking graphics in games were created with photographic processes, and photogrammetry has played a huge part in driving games graphics forward.

Epic Games used Agisoft PhotoScan to capture its images, but there are many issues native to the photogrammetry process that need to be solved. Dealing with reflections in photos of objects and poor lighting can be a real challenge, and can reduce the realism of the final output.

But this is where the marriage of technology and artistry come together. In the fantastic blog Imperfection for Perfection, technical artist Min Oh outlines Epic Games' process, detailing the use of colour checkers and capturing lighting conditions using VFX standard grey and chrome balls.

Other inspiration comes from the team at DICE, who overcame lighting issues when capturing Darth Vader's helmet by removing light information from source images.

Famed VFX supervisor Kim Libreri, who is now CTO at Epic Games, predicted that graphics would be indistinguishable from reality in a decade in 2012. And a few years on, it seems like we're well on our way.

Simon Fenton is head of games at Escape Studios, which runs courses including game art and VFX.

This article originally appeared in 3D World issue 223. Buy it here!

Related articles:

Thank you for reading 5 articles this month* Join now for unlimited access

Enjoy your first month for just £1 / $1 / €1

*Read 5 free articles per month without a subscription

Join now for unlimited access

Try first month for just £1 / $1 / €1