How the intelligent web will change our interactions

Explore the technological breakthroughs that mark the beginnings of a truly emotionally intelligent web.

From emotionally cognisant AI friends to experiences that respond to your body language, we're about to go on a tour of some of the most advanced and exciting forms of future human-computer interaction, powered by the emotionally intelligent web.

To understand this future, we first have to turn to our distant past. Roughly two million years before the first human, in Africa, we find an early precursor to man: the hominid. At this time, many human-like species were facing extinction. Hominids were under intense evolutionary pressures to survive, competing fiercely with other groups for scarce resources.

When we examine the next 100,000 generations of the hominid, something amazing happens: their brains triple in size. Hominids were adapting to survive. The amazing thing is how they were learning to do it. Most of this new neural volume was dedicated to novel ways of working together: cooperative planning, language, parent-child attachment, social cognition and empathy. In the evolution of the brain, the moment we learnt to use tools was not the most physiologically significant: it was the need to work together to survive that drove the programming of our brains.

Let's fast forward

Then 60 years ago, researchers interested in the characteristics that might predetermine professional success ran a study with 80 PhD students. They asked them to complete personality tests, IQ tests and interviews. 40 years later they contacted the study subjects again, and evaluated their professional successes. Contrary to what we might expect, they found the correlation between success and intelligence was unremarkable. The skill of emotional intelligence was four times more important in determining professional success than IQ.

Emotional intelligence is the ability to detect, understand and act on the emotions of ourselves and others. From the day we're born to the day we die, the deep wiring of the hominid brain preconditions us to understand and be understood in emotional terms.

No wonder we often feel frustrated with the web of today: in stark contrast to our natural programming, the modern web deals in binary terms. Our frustrations are deeply rooted in the feeling that technology doesn't truly know what we're trying to ask of it.

For a long time, an emotionally intelligent web has felt like science fiction. But break it down into its individual components – the ability to detect, recognise, interpret and act on emotional input – and we start to understand how emotional intelligence might be programmed. Let's see how people are doing this today.

Get the Creative Bloq Newsletter

Daily design news, reviews, how-tos and more, as picked by the editors.

Natural language

For the past 100 years we've had to augment the way we naturally communicate in order for machines to understand what we mean – from binary switches to MS-DOS, to point-and-click interfaces. However, every few years we see a quantum leap forward that allows us to communicate with machines in more natural ways.

Let's start with some of the simpler executions. Meet Amy, a bot you can copy in on any email who will help you schedule meetings. Her sophistication is twofold: firstly, she understands the complexities in how you speak ('I can't do this week but what about next week at the same time?') and secondly she is able to reply in ways that demonstrate emotional cognition.

Then, far more interesting than the overly-hyped Facebook chatbots (a technology that has been available for decades) are conversational bots driven by deeper learning. We're all aware of 'Tay', Microsoft's experiment-turned-millennial-neo-Nazi chatbot. But we may be less familiar with experiences like DeepDrumpf: a Twitter bot using a neural network that has been to trained to analyse the content of Donald Trump's tweets and create original tweets to emulate him.

Then, consider Xiaoice – so amazing, she deserves a whole paragraph of her own. Xiaoice is an advanced natural language chatbot developed by Microsoft and launched in China, who runs on several Chinese services like Weibo. But she is no longer just an experiment: she reached 1 per cent of the entire population within one week of launch and is used by 40 million people today. Much unlike task-driven Siri, users talk with Xiaoice as if she were a friend or therapist. Then amazingly, using sentiment analysis, she can adapt her phrasing and respond based on positive or negative cues from her human counterparts. She listens with emotional cognition and is learning how to reply back accordingly. This is huge.

World famous author and educator Peter Druker once said: "The most important thing in communication is hearing what isn't said." In the futuristic movie Her, the protagonist's artificially intelligent OS understands him with his smallest of sighs. Today we have Beyond Verbal, a live sentiment analysis tool driven by voice, which can detect complex emotions such as alertness, positivity, excitement and boredom to adapt experiences all through the intonation of your voice.

Facial language

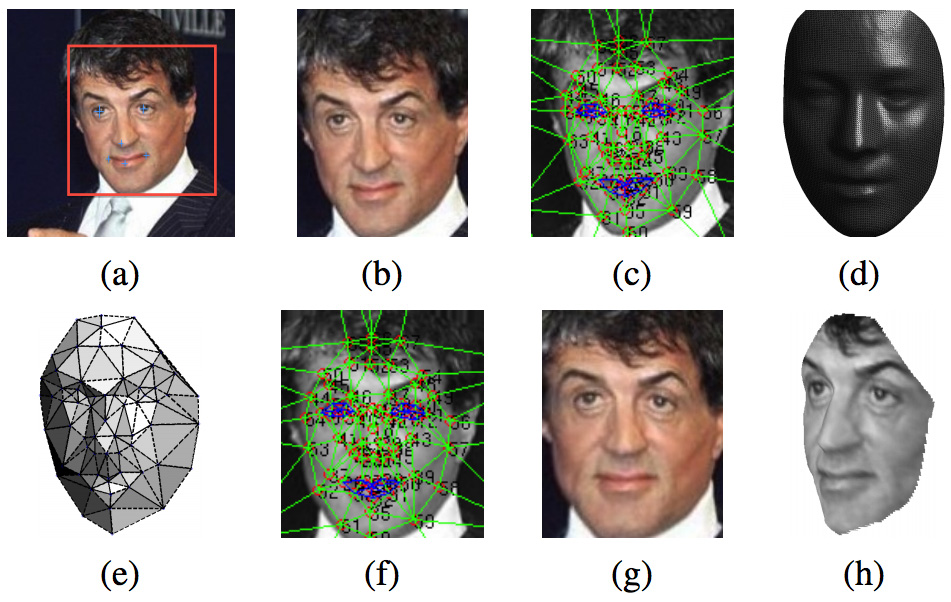

Facial expressions are some of the most powerful evolutionary tools we have at our disposal. We use them to naturally decode and transmit social information at incredible speed. Facial recognition technology is already used by social networks to do things like automatically detect your friends in photographs (Facebook's DeepFace) and create fun facial effects (Snapchat).

Based on these building blocks, we have experiences like Kairos: a facial recognition tool that watches the micro-movements in your face to understand your emotions with incredible accuracy. Microsoft, EmoVu and many others have APIs for emotional recognition that you can use today. Imagine an emotional intelligent BuzzFeed that can adapt based on the articles it sees you enjoying by watching your face. These APIs make experiences that can adapt based on a user's emotional state increasingly possible. And as Google works with Movidius, and Apple acquires Emotient in order to bring emotion-sensing to the forward-facing camera, they're becoming increasingly likely.

Body language

The notion that the web might one day be able to better tailor experiences based on our body language might seem like science fiction. However, the technologies to do so are here today. Plug Granify into your website and you can attempt to detect a user's emotion based on the micro-movements of their cursor. With its Pre-Touch technology, Microsoft recently demonstrated how its future devices can respond contextually, before your finger has even touched the screen, allowing interfaces to truly adapt to body language.

What's more, wearables constantly measure the minute kinetic and physiological changes in the body to create uniquely identifying information that allows for infinitely customisable personalisation. Spire and Feel are two wearables that purport to be able to track your mood and create unique experiences based on how you're feeling. Nymi can detect the speed and rhythm of your heart. All three of these devices empathise with the natural, evolutionary language of the body to create a new receptive user experience.

The next 9,000 days

The web is roughly only 9,000 days old. In that time we have witnessed some of mankind's greatest achievements leveraging the power of the internet. As we move into the next 9,000 days, how will we as creators continue to make new empathic experiences, designed to better understand and empower the next generation of the human?

This article originally appeared in net magazine.

Thank you for reading 5 articles this month* Join now for unlimited access

Enjoy your first month for just £1 / $1 / €1

*Read 5 free articles per month without a subscription

Join now for unlimited access

Try first month for just £1 / $1 / €1