How the BBC builds websites that scale

Here are seven techniques the BBC uses to create sites and apps that can handle millions of users simultaneously.

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Five times a week

CreativeBloq

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Once a week

By Design

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Once a week

State of the Art

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

Seasonal (around events)

Brand Impact Awards

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

This article was originally published in net magazine in 2016.

In 1948, the Olympic Games was broadcast live on television for the first time. Somehow, in post-war austerity with scarce resources and little money, the BBC broke technological boundaries. Live coverage came from two venues in North London, in what was at the time the largest outside broadcast ever made.

It was a milestone in sports coverage. The beginning of a revolution, you could say, into the limitless sport on TV and online today.

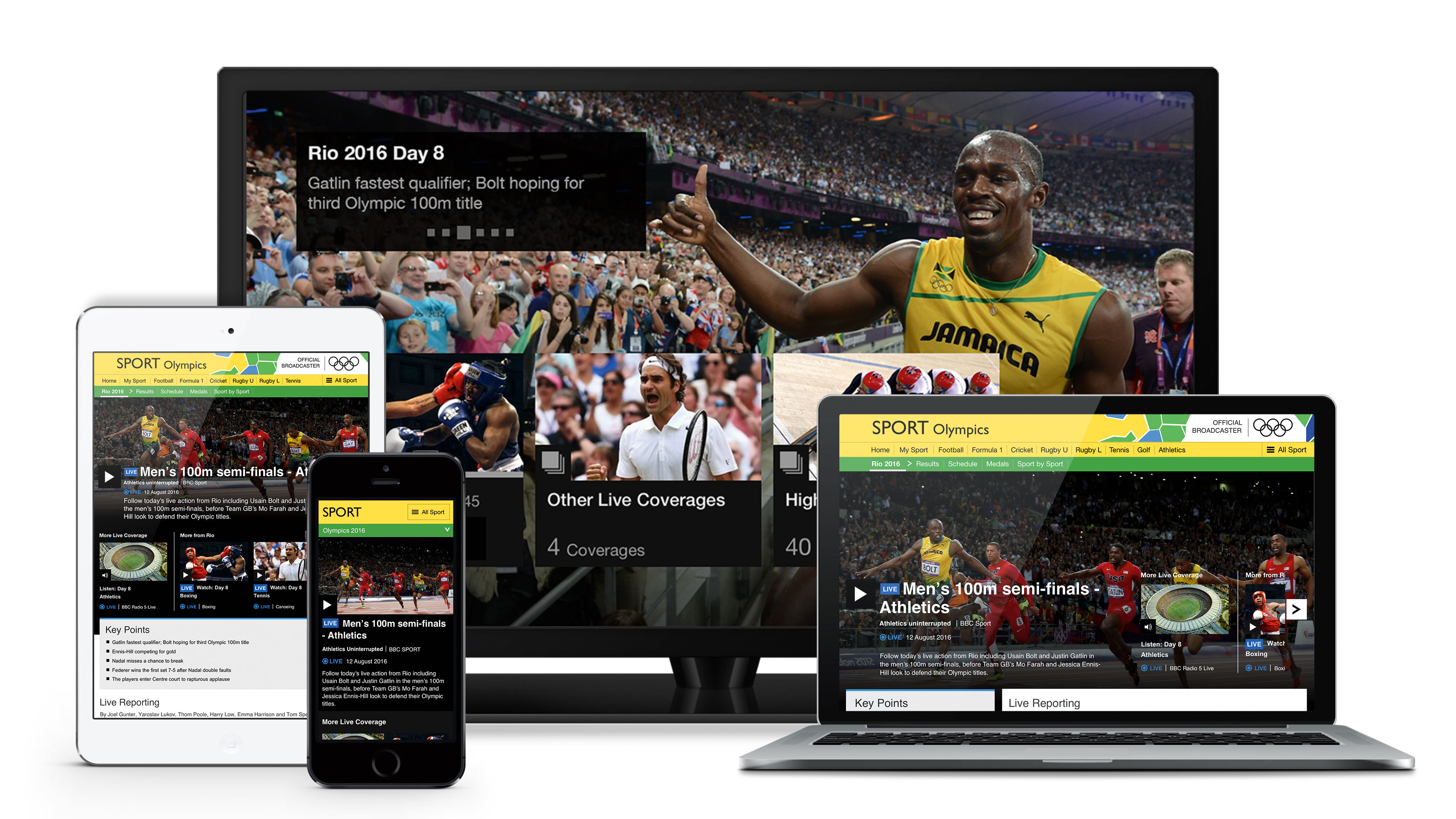

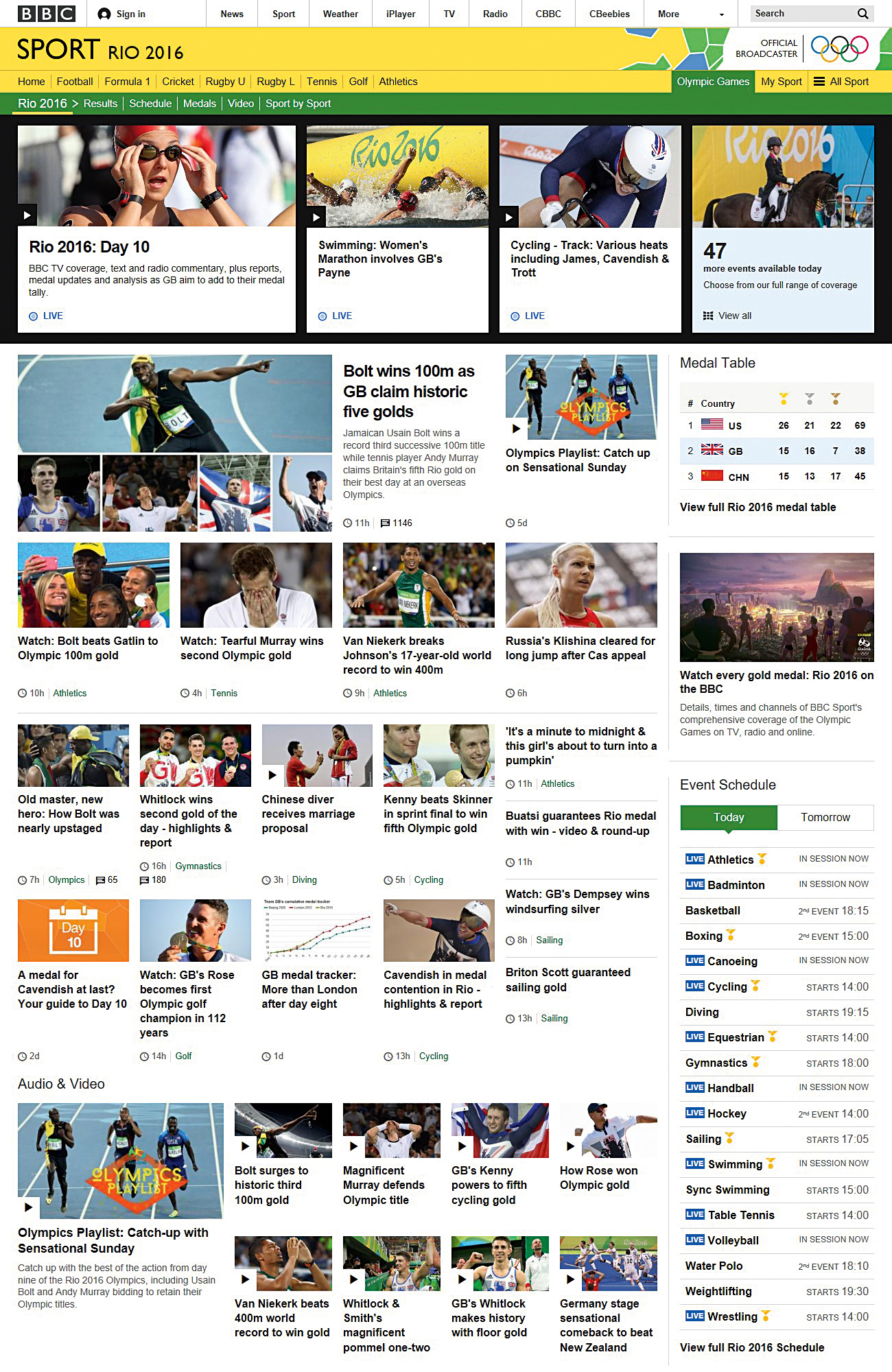

70 years on, we at the BBC continue to push how we can bring the Olympics to the UK and the world. And today, of course, it’s more than TV. A wide range of Olympic content is offered over the BBC’s websites and apps, and it’s phenomenally popular. Over 100 million devices accessed our Rio 2016 site.

Offering a great online service to over 100 million devices is not easy. High levels of web traffic puts huge load on the underlying systems. Fortunately, we were prepared. We knew the summer of 2016 was going to be our biggest ever, and made sure we had a website that could scale to handle it. This article explains how we did it.

01. Caches are your best friend

Let’s start with the basics. A cache is the single most important technology in keeping sites scalable and fast. By storing copies of data and providing them when they're next needed, caches reduce the number of requests that make it to the server. And that allows the server to handle more users.

Everyone knows the cache inside a web browser. It’s perfect for any common content between web pages, such as CSS. As a user moves between pages on your site, the browser’s cache will ensure they don’t repeatedly download the same file. This means there are fewer requests to your web servers, freeing up capacity to handle more users.

Sign up to Creative Bloq's daily newsletter, which brings you the latest news and inspiration from the worlds of art, design and technology.

A cache is the single most important technology in keeping sites scalable and fast

Unfortunately, browser caches aren’t shared between users, so they don’t solve the scaling problem on their own. In other words, if a million users are accessing your site, there are a million browser caches to fill. This means the load on web servers can still be significant. That’s why most websites will also have their own cache, which may or may not be part of the web server.

Caches are extremely efficient and can handle user requests quicker, which allows more users to be served. For example, a good web server will usually be able to accept 1,000 requests a second to content that is cached, but may not for content that needs to be generated.

02. Use a CDN

Web server caches are great, but they have their limits. There will always be a point at which the server cannot cope with the load. To efficiently scale to almost any level, use a content delivery network (CDN). CDNs are more ubiquitous than ever thanks to their being part of all major cloud services, on a pay-as-you-go model.

They are essentially a huge cache; a store of your content that can be served to users without the need to access your servers. They are particularly great for content that does not change often, such as images, video or JavaScript files. In fact, multiple CDNs now offer to serve common files such as jQuery for free.

CDNs have the extra advantage that they distribute the content worldwide. This allows your site to be just as fast internationally as it is locally. It’s another reason why – if you can afford it – you may want to use a CDN for your entire site.

03. Add more servers

Caches (including CDNs) are great for content that normally stays the same. But if content changes frequently, or from person to person, a cache is limited in what it can safely do. If a web page includes a user’s sign-in details, for example, a cache cannot share it, or else one user may see another user’s details.

All this means that, even with the best caches and CDNs, web servers still have to pick up much of the work. So you’ll likely need several of them. Having multiple servers allows the work to be shared, and also prevents a server failure from breaking your site. A load balancer then ensures each one takes their share of the work.

If your servers are running on the cloud, take advantage of the fact new virtual machines can be requested on demand, and paid for by the minute or hour. This elastic nature of most cloud providers allows you to have more web servers when traffic is high, and fewer when it’s quiet.

As an example, we at the BBC have hundreds of cloud-based web servers, the exact number continually changing based on traffic levels to different parts of the site. We’ve learned that other systems must be able to scale too. A database or API that the site uses, for example, will also need to handle moments of high traffic.

04. Optimise page generation

For all but the simplest of sites, there is some dynamic generation: code that runs to create the contents of a page. Optimising this process can allow a web page to be created quicker, which enables a server to handle more requests. Consider:

- Can the code be changed to be more efficient? Is it handling more data than necessary, or creating more content than is actually shown?

- Are there are any database or API calls that can be made faster or removed?

- Could any content be prepared ahead of time and stored in a database, file or cache?

05. Split the work

The strong JavaScript support in today’s browsers is a resource we can make use of. By getting the client – the in-page JavaScript – to do some work, we can reduce how much is happening on the server. This can have a number of benefits.

During high traffic, pick the right thing to compromise on. Which probably isn’t speed

You can make fewer page requests by, for example, filtering rows of a table in JavaScript rather than on the server. Also, rather than having large pages that take time to create, consider making smaller ones that only include the initial content, then use JavaScript and APIs to load additional content separately. Facebook does this extensively.

Finally, it enables you to offer the same thing to everyone. If a page has small parts that are unique to a user (e.g. a shopping cart or recommendations), arrange for those parts to be added client-side. This way the base page can be the same for everyone, so it can be cached and the web server won’t have to recreate it for every user.

06. Find your limit

So you’ve made a heap of changes to enable your site handle high load. But how do you know it has worked? There is always a breaking point; you need to know what it is. Load testing – simulating high traffic so you can understand how your site performs – is the only way to be sure. The BBC does a lot of load testing because, even though an educated guess can be made as to how our site will scale, there are always the unknown unknowns.

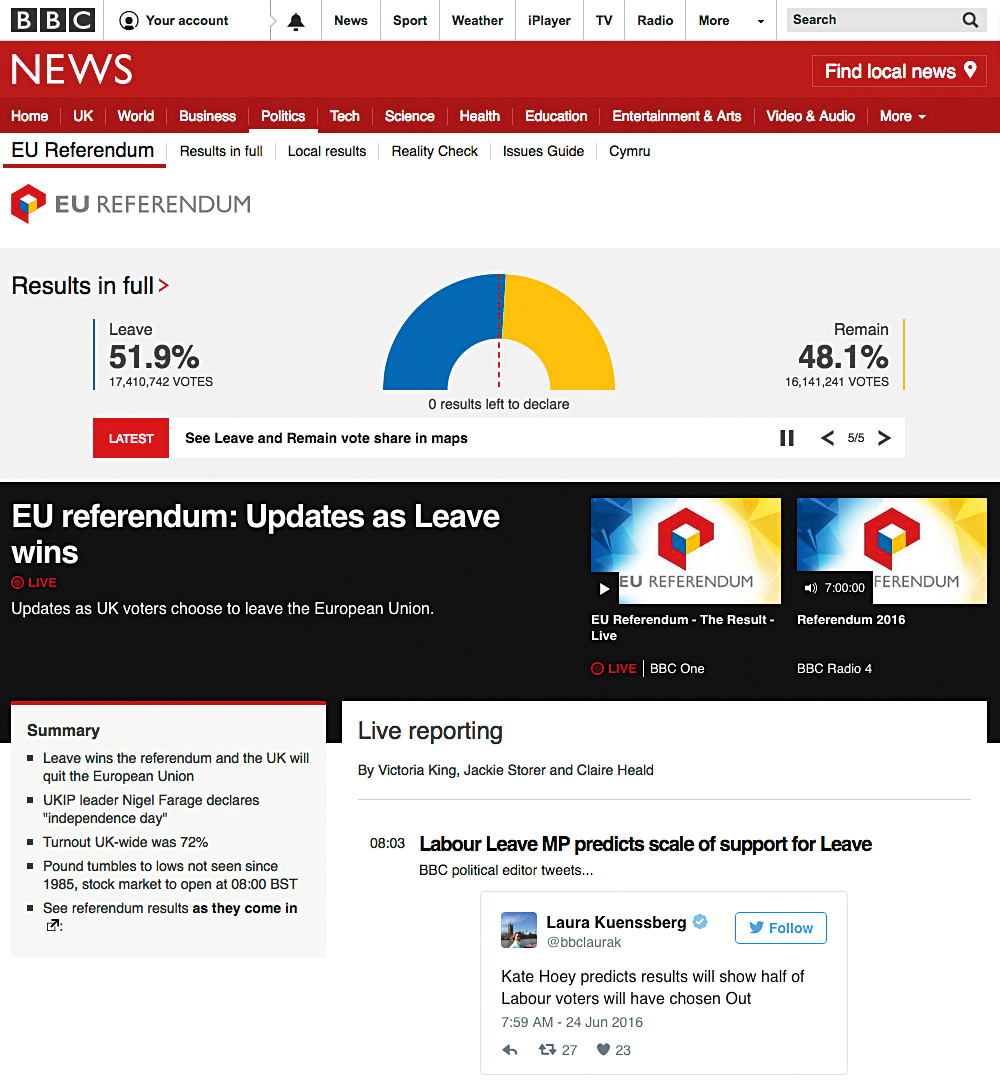

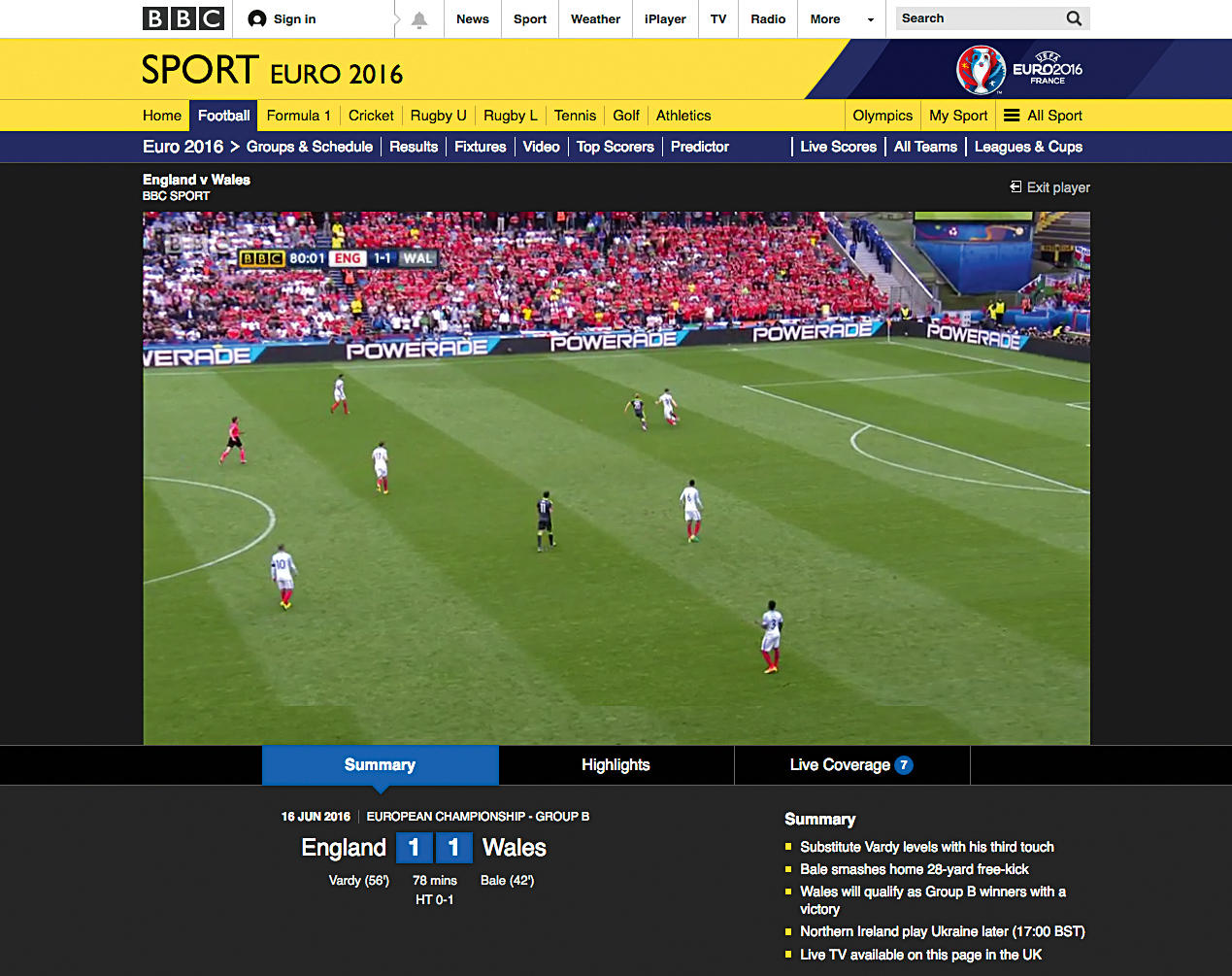

It’s often the case that the busiest moments are also the most important. The BBC website’s busiest times are during major sporting events or breaking news – moments when failure is not an option. Load testing can give you confidence that you’re ready for when the big day comes.

07. Compromise, but not on speed

In many cases, a site under high load will slow down. Web servers and databases will handle high traffic by doing multiple things at once, causing performance to drop. But this is precisely the time you don’t want poor performance, as you’re probably welcoming new users that you want to impress.

At the BBC we’ve noticed that, for every additional second a page takes to load, 10 per cent of users leave. This is why, if the BBC site is slowing down due to load, certain features will automatically switch off to bring the speed up again. These will be low-importance things – such as a promo box at the bottom of a page – that are expensive on the server and few users will miss.

In short, if compromise is necessary during high traffic moments, pick the right thing to compromise on. Which probably isn’t speed.

These seven techniques have helped the BBC create a site that handles tens of millions of users every day. They’ve been proven to work with the biggest moments the BBC website has ever seen, such as this year’s Olympics, when thousands of pages were requested every second.

Back in 1948, when the Olympics were first televised live, there were only 80,000 TVs to receive it. Today, 80,000 smartphones are sold every half an hour. The internet’s growth continues to be astonishing. With these techniques, your site can be ready for astonishing growth of its own.

This article was originally published in net magazine.

Related articles: