There has been an explosion of AI art in past two years, but the history of AI art goes back long before the arrival of diffusion models like Midjourney, DALL-E 2 and Stable Diffusion in 2022. In fact, it goes all the way back to the 1960s, and humans' interest in the possibility of machines that can make art goes back even further.

Today, many artists are among the fiercest critics of AI art generators, but over the years, artists have also been at forefront of developing new technology that can create images, sometimes in their own style. Below we look at where AI art came from, how it evolved and where it stands today.

The history of AI art: conceptual beginnings

The existence of AI art is a relatively recent phenomena, but man has been fascinated with the idea of machines that can make art for centuries. Even the ancient Greeks imagined such a thing. Daedalus and Hero of Alexandria are described as making machines that could write text and play music. And this vision of machines that could create art has inspired philosophers and inventors throughout history.

Before the advent of computer science, the Swiss mechanician Henri Maillardet created an automaton known as the Draughtsman-Writer. Now at the Franklin Institute in Philadelphia, it can produce four drawings and three poems. This was mechanical imitation, not creation or generation or new work, but already in the 1840s, Ada Lovelace, often considered the mother of computer science, envisioned a machine that could go beyond calculation and create art of its own.

The beginning of AI art in modern times

One step in that direction was provided by the reactive machines of Inventors like the cybernetician Gordon Pasks, whose MusiColour machine in the 1950s used sound input from a human performer to control lights. Jean Tinguely's 'painting machines' were kinetic sculptures that allowed a user to choose the color and position of a pen and the length of time for the machine to work. The robot would then create an original abstract artwork, but the user had little control over the results.

Things advanced after the academic discipline of artificial intelligence was founded in a research workshop at Dartmouth College in 1956. Early explorations in the creation of art using artificial intelligence were sometimes referred to as algorithmic art or computer art. And one British artist would dedicate his life to exploring this...

AI art in the 1960s and 70s: Harold Cohen's AARON

Harold Cohen began developing AARON at the University of California at San Diego in the late 1960s. Using a symbolic rule-based approach, his aim was to code the act of drawing and create a machine that could produce original works in his own style by building his own plotters and painting machines to interpret computer commands.

Get the Creative Bloq Newsletter

Daily design news, reviews, how-tos and more, as picked by the editors.

When first exhibited in 1972 at Los Angeles County Museum of, AARON could only create simple black-and-white drawings, but Cohen continued to develop it until his death in 2016, giving it the ability to paint using brushes and dyes chosen by the program itself. Its name is believed to be an allusion to the biblical Aaron, questioning the glorification of artistic creation as communication with the divine. AARON was limited to creating the single style that Cohen coded it with, based on his own painting style, but it could produce an infinite number of images.

2000s: AI art evolves

By the 2000s, the availability of software and an increase in the number of artists learning to code led to a rise in interest in generative art. In 1991 and 1992, Karl Sims won the Golden Nica award at Prix Ars Electronica for Panspermia and Liquid Selves, 3D AI animated videos that used artificial evolution techniques to select from among random mutations of shapes. In 1999, the self-described software artist Scott Draves began Electric Sheep, an evolving infinite animation of fractal flames that learns from its audience.

Meanwhile, the creation of huge public annotated repositories of images like ImageNet would go on to provide the data sets that could be used to train algorithms to catalogue images and identify objects, providing the basis for the machine learning models that would come in the following decade.

2010s: GAN models allow AI to learn specific styles

In 2014, Ian Goodfellow and colleagues at Université de Montréal created a type of deep neural network that could learn to mimic the statistical distribution of input data including images. Named generative adversarial network (GAN), it comprised a 'generator' to create new images and a 'discriminator' to decide which of these images was successful. This represented a major evolution from algorithmic art because the network could learn to create any given style by analysing a big enough dataset.

Being able to create images in a specific style meant the ability to reproduce the style of any specific artist without their collaboration. The advertising agency J Walter Thompson Amsterdam provided a headline-grabbing example of this in 2016 with The Next Rembrandt. The project involved training an AI model with a dataset based on the Dutch master's existing 346 works of art. The AI produced a new work, imagining something that Rembrandt might have painted had he lived longer.

A major contributor to the interest in GAN models was Google's DeepDream. Emerging in 2015, it used a convolutional neural network to identify and enhance patterns in images using algorithmic pareidolia, resulting in images with a dream-like look.

Another notable work created by a GAN was Edmond de Belamy, a portrait generated by the Parisian arts collective Obviousin 2018 by training an open source algorithm created by the artist Robbie Barrat using 15,000 portraits spanning from the 14th to the 19th centuries from the pnline art encyclopedia WikiArt. A print of the work sold for $432,500 at Christie's in 2018. That same year, Artbreeder launched, becoming the first well-known and easily available online AI image generator. Using StyleGAN and BigGAN, it allowed users to generate and modify images.

AI art wins awards

AI art soon began to scoop awards. In 2019, Stephanie Dinkins won the Creative Capital Award for Not the Only One (NTOO), described as a multigenerational memoir of a black American family told from the mind of an artificial intelligence of evolving intellect. In the same year, Sougwen Chung won the Lumen Prize for her performances with a robotic arm that uses AI to attempt to draw in her style.

The explosion of diffusion-based AI art generators

The last big leap in AI art has been the arrival of the diffusion-based text-to-image models responsible for the explosion of AI visuals produced today. OpenAI's DALL-E 2 appeared in April 2022, Midjourney entered open beta in July 2022 and the open-source Stable Diffusion joined the party just a month later.

They're relatively quick and easy to use, and they produce more realistic-looking output. That's brought AI art into mainstream popular culture, allowing anyone to create an image of almost anything they can imagine.

The explosion was accompanied by some murky ethics, with many AI models trained datasets scraped from the web without regard for copyright. That was enough to largely keep businesses away from using AI art. However, the launch of 'commercially safe' Adobe Firefly in March 2023, and the roll out of AI image generation directly in Microsoft's Bing, Google Images or via integration with chatbots like Copilot and ChatGPT, means the creation of AI-generated images has quickly become commonplace.

The explosion of AI art has led to other problems too. We've seen AI-generated images being passed of as digital paintings or photographs, and even winning art competitions. There are also concerns AI images could spread fake news or be used for scams. Instagram has finally added a 'Made with AI' tag, but there is backlash to AI art - the anti-AI social network Cara has seen a surge in popularity.

AI art today

AI art continues to raise many questions, including whether it's even correct to call it art at all. The ease of access to AI image generators and the sheer amount of content being created at the click of a button also raises questions around whether AI-generated art has any value, and it's still not clear how normalised it will become and how accepting the public will be.

Ultimately, the market will decide if AI art becomes something that is bought and sold and used by brands or merely entertainment on social media. Some uses we've seen so far have led to backlashes or seemed jarring, like when the Glenlivet proudly boasted of putting AI art on €40,000-per-bottle whisky, apparently unaware of the contradiction with its artisanal tradition.

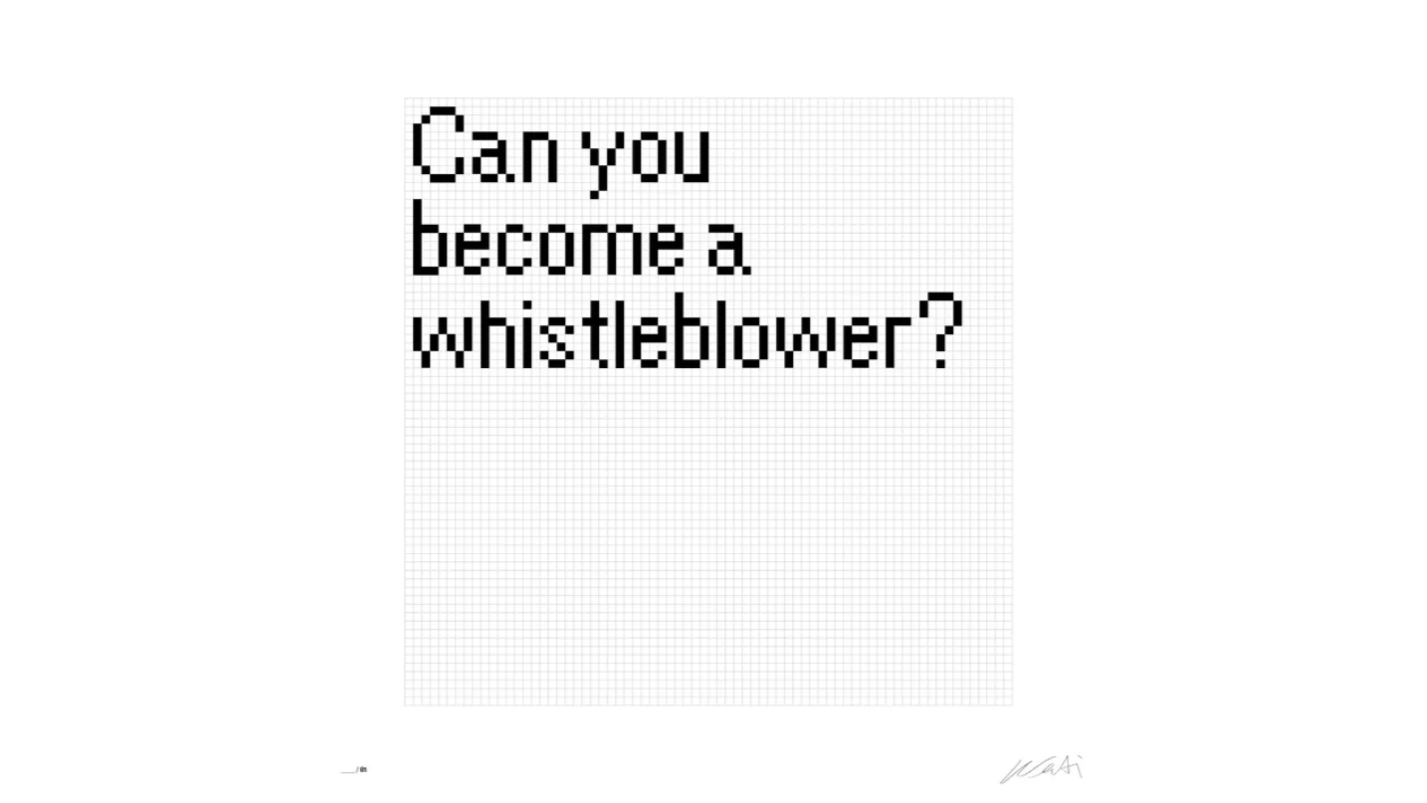

But this isn't art, it's design and decoration. With AI likely to become a major part of many aspects modern life, artists are likely to seek new ways to interact with it, comment on it and critique it. Think pieces like Ai Weiwei's 81 Questions, which saw the Chinese artist have a question-and-answer conversation with ChatGPT, with the responses broadcast on public screens. Ai has suggested that the types of art that AI can easily reproduce had already become meaningless. It's meaning that may ensure the value and authenticity of original human-created art retains a premium.

Creative Bloq's AI Week is held in association with DistinctAI, creators of the new plugin Vision FX 2.0, which creates stunning AI art based on your own imagery – perfect for ideation and conceptualising during the creative process. Find out more on the DistinctAI website.

Thank you for reading 5 articles this month* Join now for unlimited access

Enjoy your first month for just £1 / $1 / €1

*Read 5 free articles per month without a subscription

Join now for unlimited access

Try first month for just £1 / $1 / €1

Joe is a regular freelance journalist and editor at Creative Bloq. He writes news, features and buying guides and keeps track of the best equipment and software for creatives, from video editing programs to monitors and accessories. A veteran news writer and photographer, he now works as a project manager at the London and Buenos Aires-based design, production and branding agency Hermana Creatives. There he manages a team of designers, photographers and video editors who specialise in producing visual content and design assets for the hospitality sector. He also dances Argentine tango.