10 tips for better testing

How to get the most from your testing strategy and ensure your sites are the best they can be.

Web development is more complicated now than a few years ago. Web design tools, browser features, frontend frameworks and best practices change almost monthly and there is always something to update. And with this comes risk. How can we be sure these changes won't have unintended side effects?

Testing is all about mitigating risk. If a user has trouble using a site, they are less likely to come back and more likely to jump over to a competitor. By checking every decision that gets made when developing a site, it reduces the chance that users will have a sub-par experience (brilliant web hosting will help you analyse problems with your site).

Clearly there is more to user testing than just making sure a codebase is free of errors. There are steps to test right the way through the design process that can make sure everything that gets created is guided by a real user need.

Want to make a website without hassle? Try a website builder.

01. Write lots of unit tests

Unstructured code is a precursor to bugs and issues further down the road. It not only makes it hard to understand and impossible to upgrade, it also makes it difficult to test. With so many pieces all directly relying on each other, tests must run on all of the code at once. This makes it difficult to see exactly what doesn't work when the time comes.

Each part of the application should be broken up into its own concern. For example, a login form can comprise database queries, authentication and routing in addition to styled inputs and buttons. Each one of these is a great candidate for having its own class, function or component.

The foundation of a solid codebase is a good set of unit tests. These should cover all code and be quick to run. Most unit tests and their frameworks share the same structure:

Get the Creative Bloq Newsletter

Daily design news, reviews, how-tos and more, as picked by the editors.

<pre>

describe("DateLocale", function() {

test("provides the day in the correct language", function() {

var date = new DateLocale("en");

date.setDate(new Date(1525132800000));

expect(date.getDay()).toBe("Tuesday");

});

});

</pre>A "describe" block denotes what piece of code is under test. Inside that are a number of tests that set up a scenario and compare our expected result with the actual result. If they don't match, the test fails and we can investigate further.

By creating and running unit tests as we change files, we can be sure that nothing has accidentally broken the expected functionality of each piece of code. These pieces can also be dropped into other projects where needed. As tests are already written for it, we can be sure that this particular unit is free of any issues right from the start.

There are plenty of tools to help write unit tests, such as Jest, Jasmine and AVA. The best fit will depend on the needs of each project, any frameworks involved and ultimately developer preference.

02. Use test doubles when required

While it may seem counter-productive, it can be easy to test more than originally intended. If a function depends on an external library, for example, any bugs that come from that library will fail other tests even if the code we have written is sound.

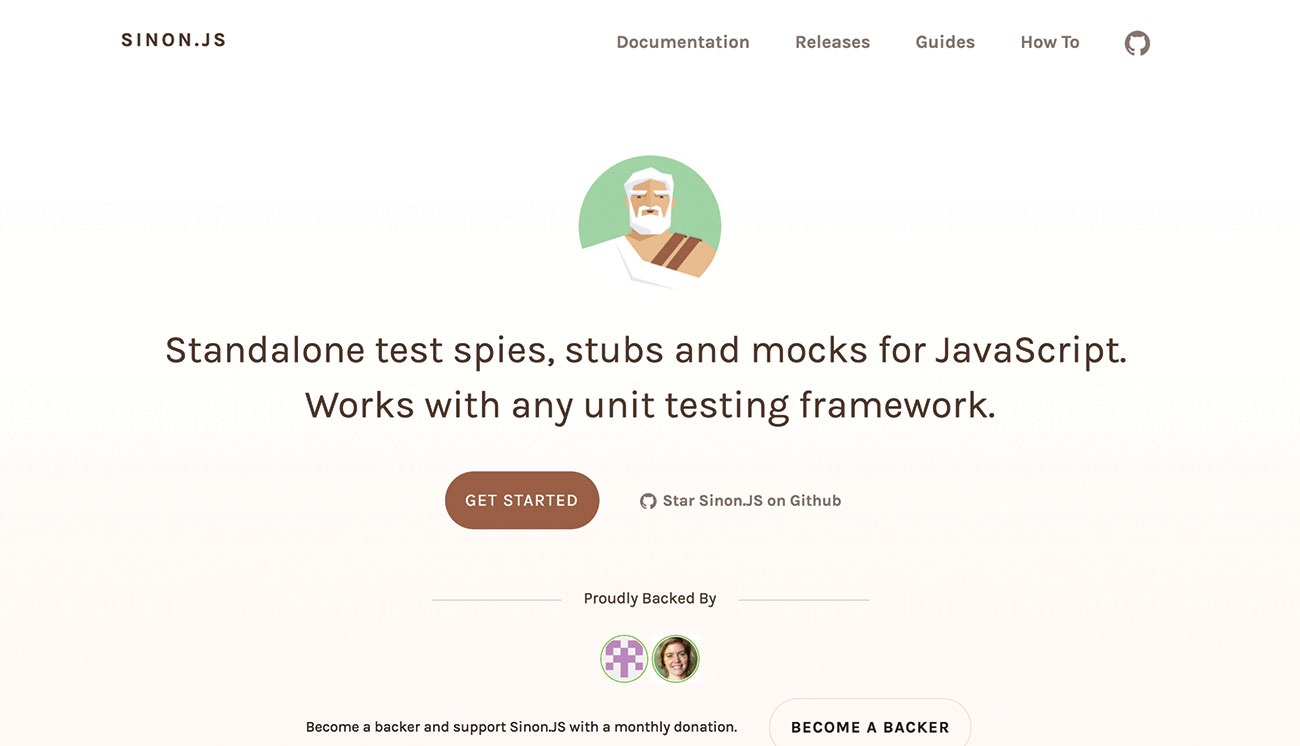

The solution to this is to add placeholders – or 'test doubles' – for this functionality that behave in the same way but will always give us an expected result. The three main test doubles are 'mocks', 'stubs' and 'spies'.

A mock is a class or object that simply holds the place of a real one. They have the same interface but will not provide any practical functionality.

A stub is similar to a mock but will respond with pre-programmed behaviour. These will be used as needed to simulate specific parts of an application while it's being tested.

A spy is more focused on how the methods in that interface were called. These are often used to check when a function is running, how many times it ran and also what arguments were supplied when it did. This is so we know the right things are being controlled at the right time.

Libraries such as Sinon, Testdouble and Nock provide great, ready-made test doubles. Some suites such as Jasmine also provide their own doubles built-in.

03. Check how the components work together

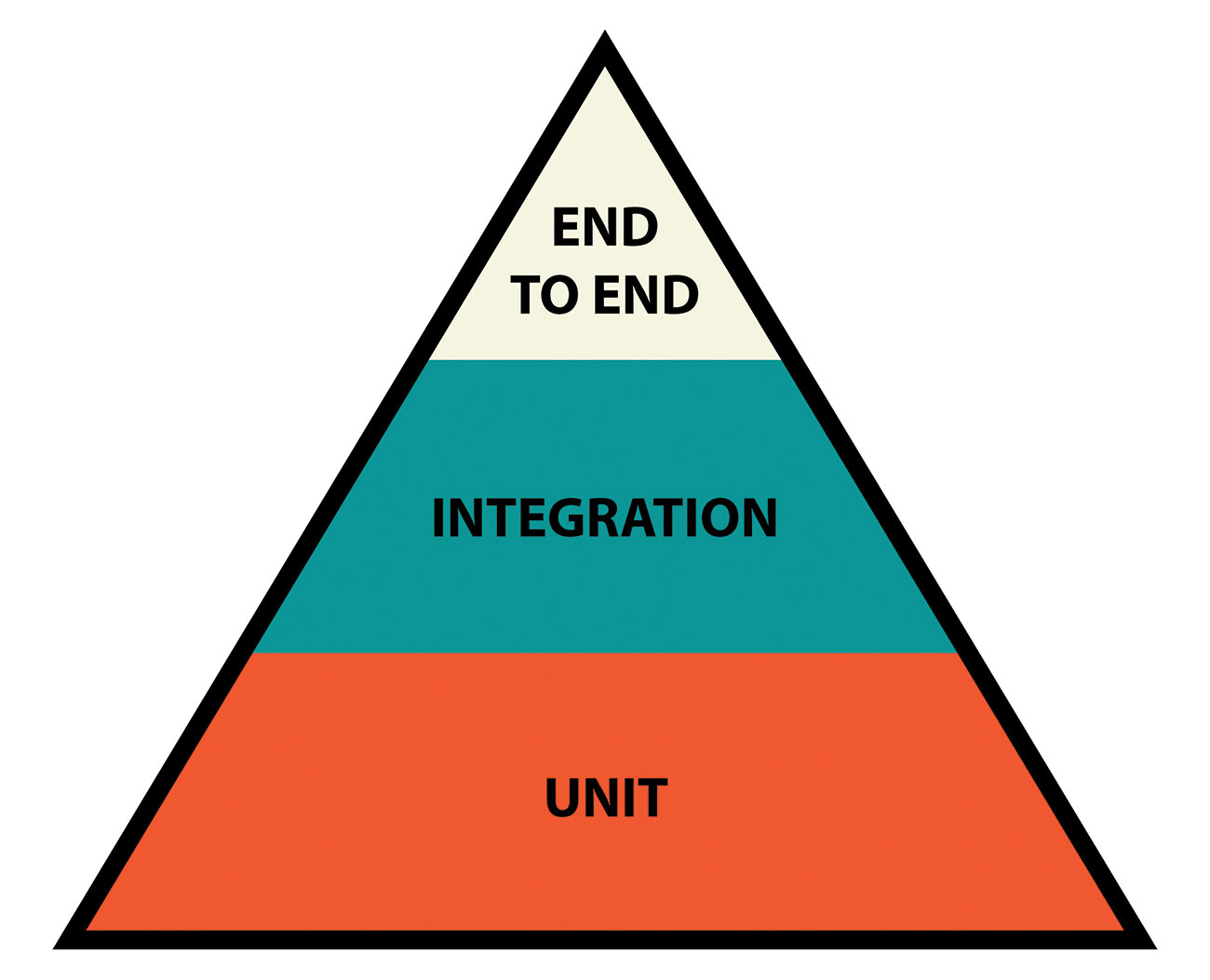

Once code is broken out into separate components, we then need to test that they can work together. If the authentication layer doesn't understand what gets returned from the database, for example, nobody would be able to log in. These are known as 'integration tests'. They check how one part of the application works with another. While unit tests are deliberately isolated from one another, integration tests encourage communication between these two parties.

As with unit tests, the goal of an integration test is to check the end result was the intended one. In our login example, that may be a check to see if the "last logged in" timestamp was updated in the database.

Since more is being dealt with at one time, integration tests are typically slower than unit tests. As such there should be fewer of them and they should run less often. Ideally, these would run only after a feature has been completed to be sure nothing has changed.

The same suites used for unit tests can be used to write integration tests, but they should be able to execute separately to keep things running quickly.

04. Follow the path of each user

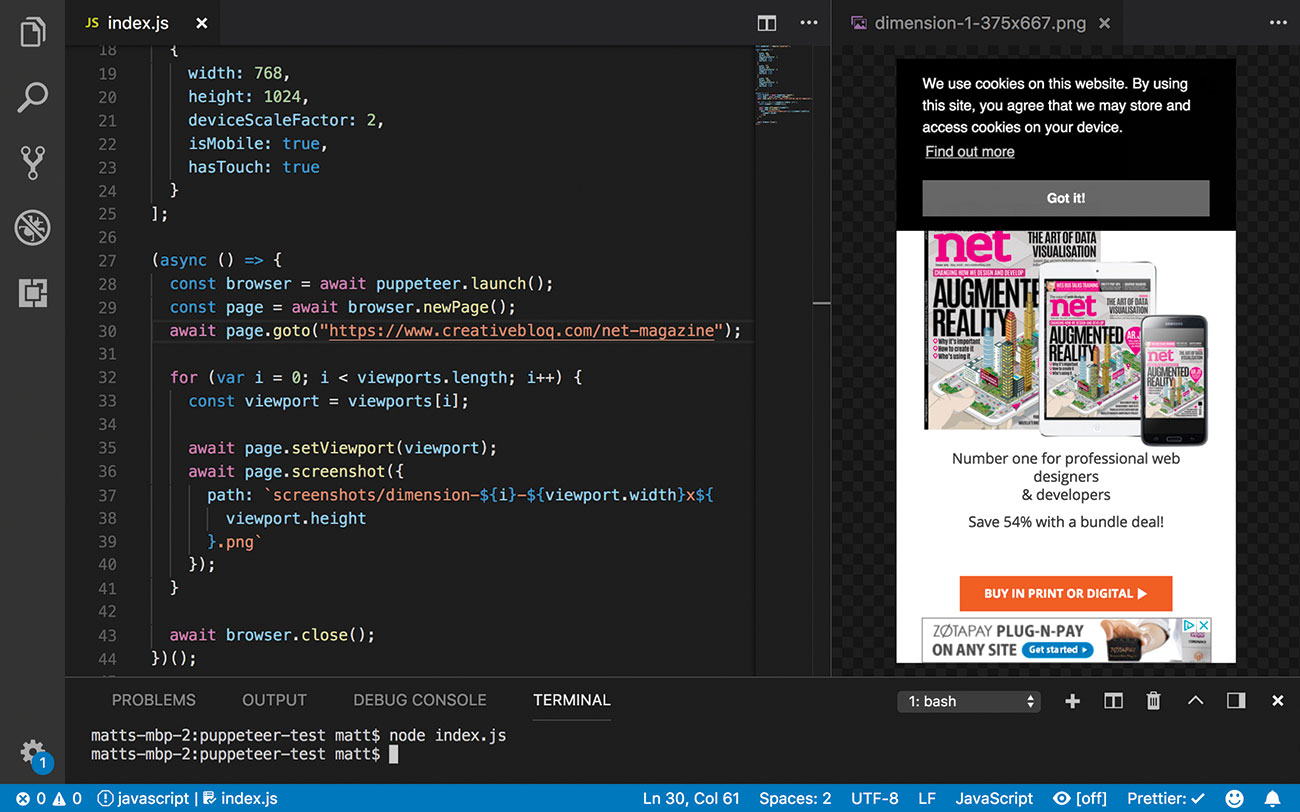

The top level of automated technical testing is known as 'end-to-end' or 'functional' testing. As the names suggest, this level covers all actions a user can take from start to finish. They simulate real scenarios and how a user is likely to interact with the finished product.

The structure of these tests often mirrors user stories created as part of the development process. To extend an example from earlier, there may be a test to make sure a user can enter their username and password on a login form.

As they rely on the UI to run, they need to be updated as the interface changes. Long load times can also cause issues. If any action cannot be completed quickly enough, the test will fail, which will result in false positives.

These tests will also run slowly. The bottleneck tends to come from running the browser, which is not as fast as the command line but is necessary to emulate the right environment. As such these will run less frequently than integration tests – usually before pushing a set of changes into production.

Tools such as Selenium and Puppeteer can help with writing end-to-end tests. They enable browsers to be controlled through code to automate what would otherwise be a repetitive manual process.

05. Set performance budgets

Modern front-end development often involves creating bundles for each project with lots of heavy assets. Without being careful, these can have a damaging impact on performance.

Webpack comes with a way to keep track of performance issues such as bundle and asset size. By tweaking the 'performance' object in webpack.config.js, it can emit warnings when files grow too large and how best to tackle it. These can even throw errors that can stop a build succeeding to be sure end users aren't negatively affected.

It is also important to test on a range of devices similar to those used by the visitors to the site. A mobile-first approach to design and development makes sure users on low-end devices aren't left waiting for a page to render.

WebPagetest provides a comprehensive overview of the performance of a website alongside hints as to how it can be improved. Live services such as Pingdom can track the performance of a site with live users for real-world data.

Make sure you keep detailed notes of any findings safe for your team to access in cloud storage.

06. Develop for accessibility

Every website should be readily accessible to everyone. While accessibility testing commonly refers to those with disabilities, changes made as a whole will benefit everybody by creating a more approachable, easy-to-navigate site.

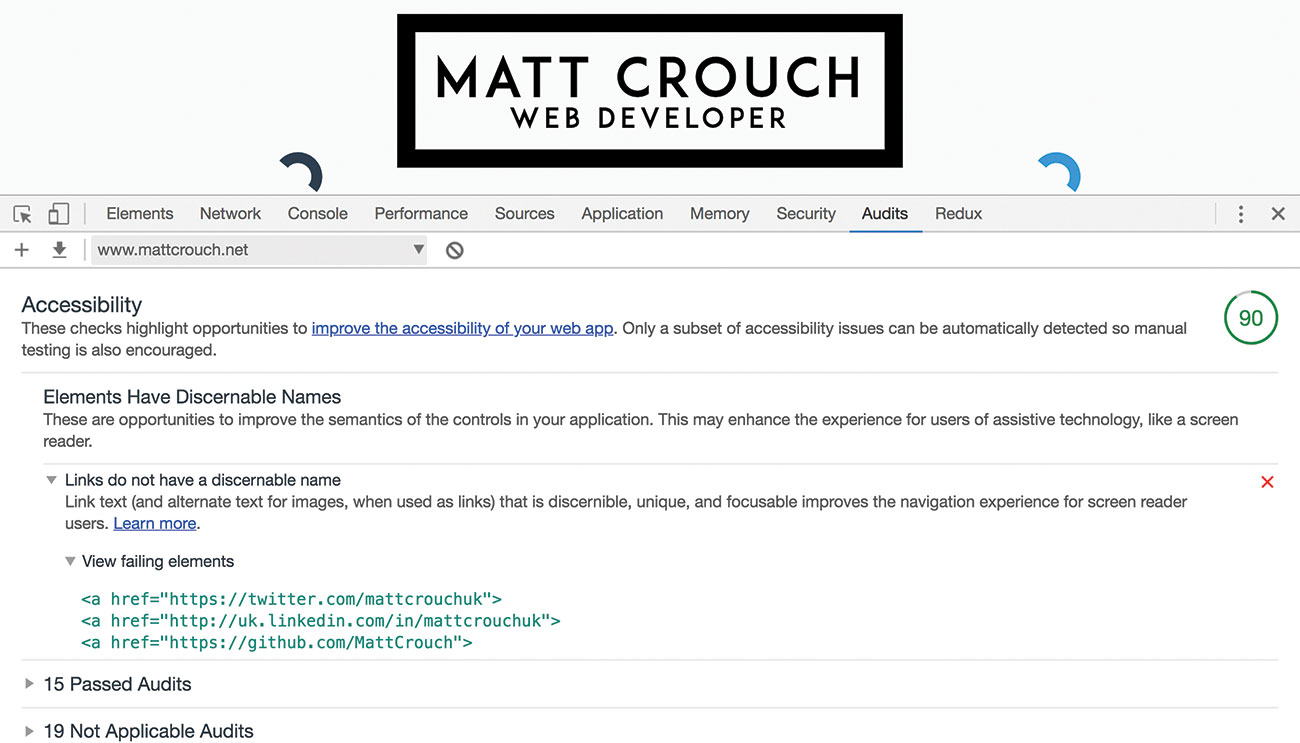

There are tools that can automatically detect the most common issues, such as poor semantic markup or missing alt text on images. Lighthouse, for example, runs inside the Chrome developer tools and gives instant feedback on the accessibility of the page it analysed.

Automated tooling cannot detect everything – for example, it isn't possible for a machine to know if the alternative text for an image is appropriate. There is no substitute for manual testing alongside users with various disabilities. Devices will be set up for that user's unique needs and we will need to make sure they are catered for.

07. Work to the extremes

Edge cases are a common cause of issues – particularly the length and content of strings. By default, long words will stretch the container and cause flow issues on a page. But what happens if someone decides to use characters from a different alphabet or makes use of emoji?

Issues become more permanent when storing these strings in a database. Long strings may be truncated and encoding issues can end up distorting the message. All test data should include these checks.

Fuzz testing is an automated technique that bombards an interface with random input as a form of stress test. The aim of the test is to make sure no unexpected issues arise from an unexpected – but possible – set of user actions.

These extremes aren't just limited to content. Those on slow connections, low-end devices and smaller screens shouldn't be made to wait. Always aim for faster performance metrics, such as time to first paint, to cater for these users.

In short, almost all aspects of development are more varied than anticipated. Use real-world data as early in the process as possible to be sure the site can cope with every eventuality.

08. Keep an eye on regressions

As features are added or changed, tests will need to be re-run. It is important to prioritise those that are likely to be affected by that change. The test suite Jest is able to determine what has changed based on Git commits. It can then determine which tests to run first to give the fastest feedback.

Visual regression tools like PhantomCSS can detect when styles have changed. A similar concept exists in Jest for objects or UI components called snapshot tests. These capture the initial state of each test. When anything changes, the test will fail until the change has been confirmed.

09. Test early, test often

When tight schedules determine releases, it is easy to let the developers create a product and have testers test the execution. In reality, this can lead to a lot of wasted development time.

Getting in the habit of testing each new feature early means an idea can be checked to make sure it's heading in the right direction. By using paper prototypes and mockups, it is easy to test an idea with no code at all.

By regularly testing a feature as it gets developed, we can be sure it's hitting the needs of the user. If any small tweaks are required, they are easier to implement in smaller stages.

It's also important to get ideas tested on real users. Rounds of alpha and beta testing can highlight issues early enough to correct them with little overhead. Later rounds should involve targeted demographics that are related to the eventual end user.

Finally, keep these rounds as small as possible. A study by Neilsen found that five users is enough to get an idea of what works and what doesn't. If the element under test is kept small, the range of feedback gained will be enough to fuel the next round of testing.

10. Encourage a test culture

Tests can only be of benefit when used regularly. Everybody involved on a project has to be on board to help them be most effective.

Continuous integration (CI) tools automate as many checks as possible before any update lands in a codebase. These can run unit tests, check for coverage and identify common issues automatically and flag them if any issues arise. Code with any issues whatsoever cannot be added to the project.

People separate from the development of a feature can perform quality assurance (QA) tests, which can act as a final check to make sure all the required functionality is present and working.

If bugs do make it through the various checks, make sure there is a process in place to report them both internally and externally. These reports can form the basis of new tests in the future to make sure this issue never resurfaces.

This article was originally published in issue 307 of net, the world's best-selling magazine for web designers and developers. Buy issue 307 here or subscribe here.

Related articles:

Thank you for reading 5 articles this month* Join now for unlimited access

Enjoy your first month for just £1 / $1 / €1

*Read 5 free articles per month without a subscription

Join now for unlimited access

Try first month for just £1 / $1 / €1

Matt Crouch is a front end developer who uses his knowledge of React, GraphQL and Styled Components to build online platforms for award-winning startups across the UK. He has written a range of articles and tutorials for net and Web Designer magazines covering the latest and greatest web development tools and technologies.