Get UX testing right with these 10 essential steps

The UX testing techniques you need to know to nail user experience design.

UX testing is essential when designing user experiences to ensure you're really serving the target user. And successful UX testing requires a deep understanding of the core goals that motivated a project in the first place. Purpose provides context to data. Without that context, the data can become clouded by your own personal bias and assumptions.

That's why so many impressive unauthorised UX redesigns of websites like Facebook would likely get nowhere. Making something better cannot be based on opinion. It doesn't matter whether you don't like using drop-downs in navigation, what matters is whether your user does. User experience strategy isn't about you or me or even about the client who's asking you to improve something. It's about the users that spend their hard-earned money on a company's products or services. The way to find out what works for them is UX testing, and below we'll outline 10 essential steps to get UX testing right.

Need some new tools? Check out our guides to the best website builder, the best web hosting service and the best cloud storage options to make sure you're ready to go.

10 essential steps for successful user testing

01. Determine the purpose of your UX testing

One of the most difficult parts of UX testing, or of testing anything in fact, is understanding the purpose behind it. Why are you currently being asked to test anything at all? In many cases, the organisation only recently began to find value in user experience, perhaps driven by a past failure, but it doesn't know where to begin.

Failure is a powerful instigator and within the framework of UX testing, it's inherently positive because it defines what needs to be fixed. It adds context and guidance and forms the bridge toward the success we're seeking. Failure comes in various forms: lost revenue, cancelled membership accounts, high bounce rates on landing pages, abandoned shopping carts, expensive marketing campaigns that don't convert or maybe a redesign that looks incredible but just doesn't generate as many leads.

A key tip? It's not your job to determine the purpose of a test. It doesn't matter what you want to test, nor does it matter how much better you think you can make what's being tested. Being proactive is great but you need to rely on your team lead, manager or stakeholder on a project to set the purpose of a test. If none of those people can't provide adequate guidance, you need to ask them some questions to get the information you need to start.

- What is the motivation behind wanting to conduct this test?

- Who is the customer you are trying to reach (age, gender, likes and dislikes)?

- What would you define as a successful result for this UX testing (are there any specific KPIs)?

- Is there a set date for when the success needs to be realised?

02. Explore your UX testing tools

Chances are you will be using Google Analytics to capture and review data as it's currently installed on over half of all websites. In larger organisations, you may come across New Relic, Quantcast, Clicky, Mixpanel or Adobe Experience Cloud, among others. The platform doesn't matter as much as the strategy behind what you're doing.

Get the Creative Bloq Newsletter

Daily design news, reviews, how-tos and more, as picked by the editors.

If the organisation already has one of these solutions installed, you can jump in and look at data immediately. If not, it's worth installing one and collecting data for 30 days. If the website or application has hundreds or thousands of visitors each day, it's possible to start analysing data earlier than 30 days. Use your best judgement. See our pick of the best Google Analytics tools to learn more about the platform.

03. Establish your benchmark

Although the data adds contextual clues as to what is happening, remember that you only have one side of the story. You have the result: visitor came from site X, went to page Y, viewed product Z and then left after 36 seconds. But wait. What does that mean? Why did that visitor behave that way? You'll never know with this solution and that's okay. That's not the goal. We want to capture and report quantifiable data.

Let's walk through an example. Imagine you have a landing page with the sole purpose of lead generation (your KPI). The landing page requires a visitor to fill out a form and click submit. It could be to receive a free resource, sign up for a webinar or join an email marketing list.

When the form submits, send them to a confirmation page (or trigger an event for the more technically inclined). Track the number of visitors that go to the landing page and how many go to the confirmation page. That's your conversion rate. The first time you track that information it becomes your benchmark. This will be used to gauge the success of all future changes to UX strategies on this page.

If you change copy on the landing page to better address the needs of your customer and the conversion goes up, then you know that your change was a positive one. Easy, right? Everything you need to get started with benchmarking, regardless of the platform, should stem from that core model. It can be filtered by domain, geography or ad campaign. It's incredibly powerful and very easy to showcase change.

The most important aspect of showing success in UX strategies is the change in benchmarked data after you implement change. Data is the currency in which UX is funded. That goes for freelancers, agencies or departments within an organisation. Prove the value of what you're doing.

04. Start UX testing with a clear use case

One of the most difficult parts of setting up successful UX testing is to not fall victim to the villain of personal bias. It can creep in when you write a testing plan or when you review data. So how do you craft an effective UX testing plan? It's all about writing simple tasks with a clear focus.

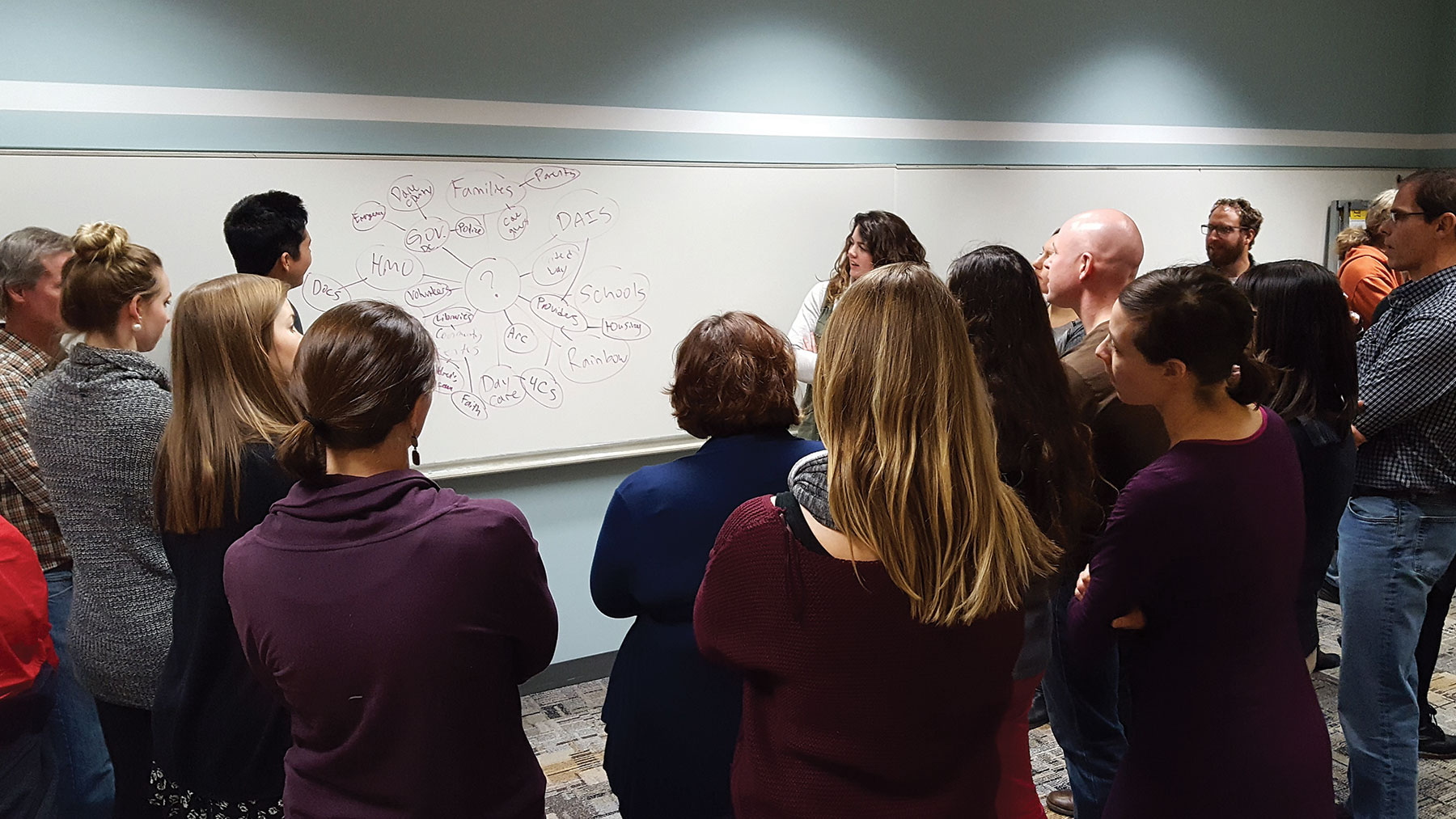

Test participants probably aren't familiar with your organisation, product or goals. Switch to storytelling mode for a moment and set the expectation and motivation as to why they are on the website. Don't start with questions or tasks right away. Remember that the testing participants are roleplaying, in some ways. They may meet the demographics of your customer but they may not actually be a customer. Asking them to find a product on your site becomes a 'click it until I find it' experience with no real comprehension or investment, which makes your data questionable at best.

Explaining to them that they're an avid mountain biker that has trouble riding in the rain because they don't have disc brakes and their goal is to find a set of disc brakes that can be installed on their 26" bike adds purpose and context for more realistic UX testing.

05. Aim for 10 test participants

Jakob Nielsen of Nielsen Norman Group, a well-respected evidence-based user-experience consultancy, suggests that elaborate usability tests are a waste of resources and that the best results come from testing no more than five users.

The problem with this logic is that five participants only show trending data when you can compare it to a larger group size. It is possible to uncover valuable feedback on tests after the initial five participants were recorded in nearly all the tests. But even if nothing new arises, having another 10 participants to provide supporting data makes the final reports a lot stronger.

If you are given an option, try 10 participants for each test and platform, such as mobile, tablet and desktop. It may be valuable to separate them by parameters like gender or age. Any important customer segment should be treated as a new test to keep the data focused.

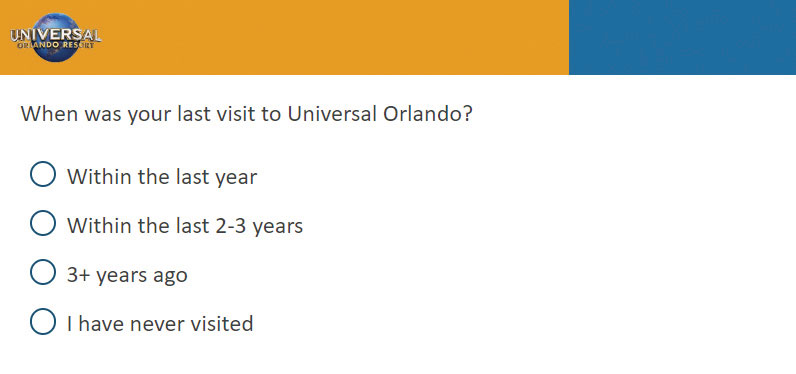

06. Include screener options

Screener options are also important to keep in mind in order to qualify your participants. As participants are paid, there is a certain level of potential dishonesty that comes into play so that they can be accepted into the testing. Keep them honest or you will get useless data that may send you in the wrong direction and jeopardise your position. One way to do this is to include fake answers or improbable options on some questions. That way, if a participant selects one, they can be removed from testing.

07. Focus on quantitative data

Although it's easy to capture emotional (qualitative) feedback by asking what people feel about something or what they liked/disliked, that will have you working with the biased perspective of a very small group size. Emotional response is dictated by personal experience and that will not typically speak to the volumes of your customer base. You run the risk of receiving a lot of, 'I don't like these images' or 'it's too dark of a design' that contrast against 'I really like these images' and 'this dark design is great'. While entertaining at times, it doesn't provide much value.

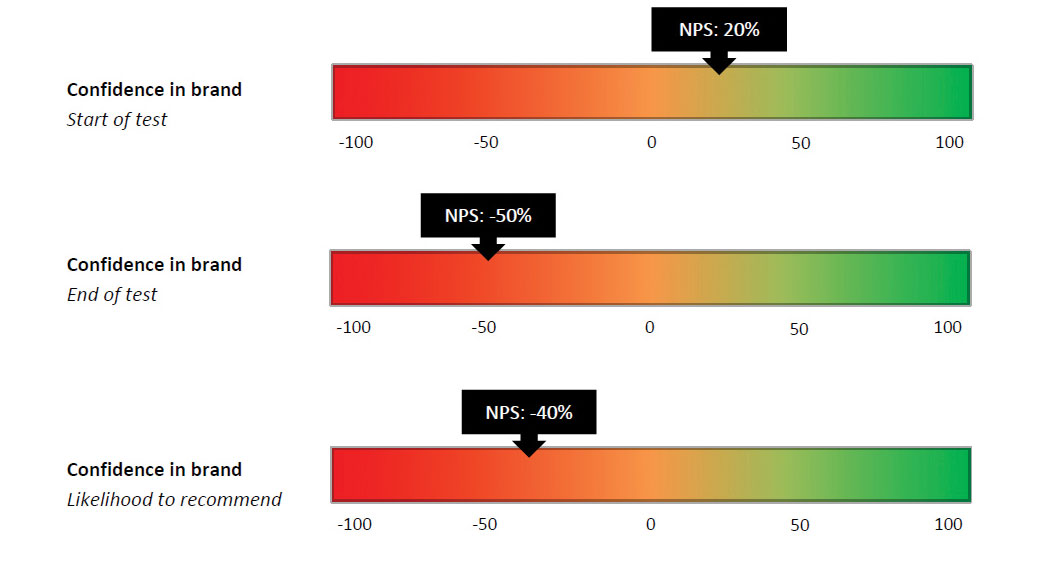

When asking specific questions that have structured responses like 'yes' or 'no', if they were able to complete a task successfully (also 'yes' or 'no') or even the much-debated net promoter score (NPS), you receive a value that can be compared to all other tests in the same way so you can compare the initial benchmarked data from earlier to itself over time. It's important to remove as many variables for bias as possible.

08. Avoid leading questions

Behavioural psychology dictates that many people simply mimic others. It's human nature and often entirely subconscious. Next time you're sitting at a table with someone, take note of how often you both mimic posture. When it comes to the goal of extracting data from others we must be careful to not guide them toward an answer.

A question may be leading if you suggest someone take an action or respond a certain way. For example, 'Visit this website. What do you like about it?' You're leading them into providing very specific feedback. Unless you're testing for how much people like something it's better to reframe the question: 'Visit this website. What is your first reaction?' That's much more open-ended, enabling the participant to answer about anything that stands out first to them.

While this is generally qualitative, it can also provide direct insight into what someone notices first as quantitative. 'X number of participants noticed logo first', for example. Or 'Y number of participants noticed promotional box first'. Then you could divide each of those down based on participant demographic or their sentiment toward it.

Asking non-suggestive questions like: 'Visit website X. Where would you expect to find Y?' lets the participant explore a website or application in a way that speaks to their own learned behaviour. The goal is to get out of their way and remove any potential nudge into a certain response or behaviour. It takes practice.

09. Curate your collected data

The purpose of UX testing is to generate potential solutions that will have a very real, positive impact on an organisation. Collecting data can validate or invalidate the potential solutions. The first step is to curate this information in a document and basic presentation. You don't want to send a 40-page report without a few presentation slides or a one-page overview of bullet points. As impactful as this data may be, it's rare that someone will read through all the details. People's attention is fractured and images and graphs can help, conveying a lot in very little.

So graph the benchmarks, graph the scores and bullet point the key takeaways. Create an issue priority chart. Quick and easy wins versus longer-term solutions. If you're familiar with SWOT (strengths, weaknesses, opportunities, threats), that can be used here as well.

If you've used a solution that recorded video or audio, create a highlight reel three to five minutes long and play it at the start of your meeting or presentation. It's always humbling for a team to hear unfiltered criticism and it sets the stage for change and improvement.

10. Implement changes

And that finally brings us to change. As long as you have the data to support it, make recommendations based on what you've found. That's what the client is looking for in the end. They trust that you've done your job and followed these high-level steps to come out in the end with data-backed solutions. Just remember that once some of your suggestions have been implemented, always compare the results against the benchmarks you set for UX testing.

Want to learn more about UX testing? Don't miss our UX design foundations course.

Read more:

Thank you for reading 5 articles this month* Join now for unlimited access

Enjoy your first month for just £1 / $1 / €1

*Read 5 free articles per month without a subscription

Join now for unlimited access

Try first month for just £1 / $1 / €1