Writing your own cross-browser polyfills

Addy Osmani recounts his experience of creating cross-browser polyfill visibly.js and provides plenty of top tips on how you can create your own HTML5 polyfills, while avoiding the headaches developers often run into when coding them for the first time

I believe it is our responsibility as designers and developers to both advocate for best practices and encourage others to make the leap to using modern features for a modern web. At the same time, we need to do our best to avoid leaving users with older browsers behind. Polyfills — a term coined by Remy Sharp to describe JavaScript shims that replicate the standard API found in native features of new browsers for those without such features — are a way of helping us achieve this.

In my opinion, we shouldn't need to make the decision not to use a functionality a feature offers just because it isn't natively supported in some browsers. There are other factors that we should base this call on instead, such as value and performance. Polyfills mean that regardless of the browsers you target, the freedom to choose to use a feature for the right reasons is in your hands where it should be.

In this post, I'm going to recount my experience of creating a cross-browser polyfill along with the lessons learned along the way. I'll also give you some tips on how you can create your own polyfills and avoid some of the headaches developers often run into when coding them for the first time.

Why do developers write polyfills?

Writing a polyfill is a fantastic opportunity to learn more about the subtle differences between feature implementations in browsers, but the real reason most of us write them is because we have a genuine need and a desire to know if it can be done.

We want to be able to say 'Here's this really amazing new feature that's just become available, but we can already use it in production today without worrying about cross-browser compatibility'. Inclusivity on the web is important to us.

I can't speak for all developers, but if I had to guess I would imagine most polyfill authors prefer being able to use features in the present, rather than waiting a year or two for specifications to be finalised. It's this type of thinking that brought about html5shiv, CSS3Pie and other solutions that have paved the way for modern web technologies being possible on a grander scale.

Sometimes polyfill developers only desire a subset of the behaviour that comes with truly native support whilst at others we may wish to polyfill the entire feature-set. We can always do our best to achieve the latter, but getting it exactly 100 per cent right is often challenging as many developers will attest to.

Daily design news, reviews, how-tos and more, as picked by the editors.

Because of the likes of GitHub and Google code, this is rarely an issue. Thanks to a vibrant development community, if we end up creating a polyfill that is widely used there is always the chance other contributors may be able to help take it to the point where the differences between the polyfill and native are neglible.

Guidelines to getting started

As mentioned, the majority of developers that choose to write a polyfill do so because it fulfils a personal need. If, however, you find yourself with a surplus of free time and would like to contribute a polyfill back to the community, there are some useful guidelines you can follow to get started.

First, select a feature that's currently only supported in modern browsers but not legacy browsers and find out if a polyfill already exists for it.

Paul Irish maintains an active wiki of current polyfills and shims on the Modernizr repo, which I strongly recommend looking through. For many features in the current stable versions of Chrome, Opera, Safari and Firefox, there may well exist popular polyfills for older browsers. However, there are considerably fewer available for features in newer edge browsers as Opera.next and the Chromium nightlies.

At the end of the day, even if there does exist a polyfill for a particular feature, if you feel you do a better job of implementing a solution than what's available, don't hesistate to go for it.

The next important thing on your checklist should be looking at the official specifications for the feature you've chosen. There are a number of reasons you should do this including gaining a better understanding of how the feature should work and, more essentially, what methods and attributes the official 'native' API describes as being offered. Unless you feel it necessary to greatly abstract from these specs, you'll want to follow this model as closely as possible.

There are many other concerns to bear in mind such as performance, value, loading mechanisms and so on but we'll be covering these items shortly.

Putting these ideas into practice

The feature I decided to write a polyfill for was the 'Page Visibility API'. The API is an experimental implementation of the W3C's page visibility draft and the feature offers something particularly useful. If a developer would like their site to react or behave differently if a user decides to switch from the current active tab (your site) to view another, the API makes the change in visibility very straightforward to detect.

We're all experienced in handling situations where we're required to create solutions for two distinct types of browsers:

- modern web browsers and

- legacy browsers.

With the Page Visibility API, only two browsers actually natively supported it at the time and they didn't really fall into either of the cases above. The first (Chromium 13+) was a beta channel version of Google Chrome whilst the second (IE10PP2) was still in the platform preview phrase. What this meant was that I actually needed to also cater for a third scenario: 3) bleeding edge browsers.

This category goes beyond modern browsers as there are typically only a minority of developers actively using them. This, however, didn't put me off working on a polyfill for the Page Visibility API that could work in all browsers. Because 3) already had native support for the feature, it was relatively straightforward to cater for calls to it through the polyfill.

Back-filling support for the feature in the other browsers did however highlight some interesting caveats and lessons that you mind find useful should you decide to write your own polyfill in the future. Some of these tips may be fairly simple whilst others quite the opposite!

Feature detection

Prefixes

When browser vendors decide to implement features or standards that have yet to be finalised, they commonly release them using a prefix specific to their browser. Chrome and Safari, which rely on the WebKit rendering engine, use 'webkit', Firefox use 'moz', Opera 'o', Microsft 'ms' and one should also consider the non-prefixed case (ie when a feature has been finalised by standards bodies and is implemented as such). Depending on what you're attempting to write a polyfill for, you may find a prefix tester such as the one I wrote below of assistance.

/** vendorPrefix.js - Copyright(c) Addy Osmani 2011.* http://github.com/addyosmani* Tests for native support of a browser property in a specific context* If supported, a value will be returned.*/function getPrefix(prop, context) { var vendorPrefixes = ['moz', 'webkit', 'khtml', 'o', 'ms'], upper = prop.charAt(0).toUpperCase() + prop.slice(1), pref, len = vendorPrefixes.length, q = null; while (len--) { q = vendorPrefixes[len]; if (context.toString().indexOf('style')) { q = q.charAt(0).toUpperCase() + q.slice(1); } if ((q + upper) in context) { pref = (q); } } if (prop in context) { pref = prop; } if (pref) { return '-' + pref.toLowerCase() + '-'; } return '';}//LocalStorage testconsole.log(getPrefix('localStorage', window));//Page Visibility APIconsole.log(getPrefix('hidden', document));//CSS3 transformsconsole.log(getPrefix('transform', document.createElement('div').style));//CSS3 transitionsconsole.log(getPrefix('transition', document.createElement('div').style));//File API test (very basic test, ideally check against 'File' too)console.log(getPrefix('FileReader', window));There are two different ways you can approach prefix testing against a particular feature.

If you wish to future-proof your polyfill in case other browser vendors also implement a feature early, you can test against all of the above prefixes. If however, you intend on maintaining your polyfill in the long-term, you could just test against prefixes for the browsers that are currently known to support the feature you are polyfilling (in my case WebKit/Chrome and IE). It's effectively a micro-optimisation to avoid unnecessary testing, however at the end of the day it's entirely your choice as to which of these you opt for.

Support

Establishing the vendor prefix is often however just the first step in feature detecting unfinalised features. In this section I'm going to talk about feature detecting both finalised and unfinalised features as this will probably be of greater benefit. There are a number of approaches that can be taken to detecting if a feature is supported, not all of which are applicable to all cases:

These include testing:

- If a property of the feature exists (ie is supported) in the current window

- If a property of the feature exists in the current document

- If a property of the feature or an instance of it can be created as a new element in the current document

- If a property or attribute of the feature exists within a particular element (eg the 'placeholder' attribute).

This is by no means a comprehensive list. However if you would like to learn more about feature testing, I'd recommend looking through both the Modernizr and has.js feature detection tests as they're full of interesting examples of how these tests can be correctly approached.

Browser features that have been finalised by standards organisations generally result in property, attribute and method names without any vendor prefix attached to them. In the prefix test above, this is the reason why I also include a test to see if the direct property being tested without a prefix exists in the document.

Unfinalised features, as we've seen, often do have prefixes appear before their properties. To test for edge features using prefixes, it's necessary in some cases to 'prefix' the name of a property or event with the corresponding browser prefix the vendor has implemented the feature with.

In most cases this just means prepending the prefix we detected earlier. However, it's very important that you check any vendor documentation available to find out whether there are any caveats that need to be kept in mind. For example, there may be cases where the naming for a finalised feature is elem.something, whilst this could be camel-cased for earlier implementations as elem.mozSomething etc.

Beyond this, the only other thing to bear in mind is that your feature tests absolutely need to work cross-browser. This might seem like a trivial point to make given the examples of tests above. However, with some features these do increase in complexity and the last thing you want is a broken feature detection process.

JavaScript: The Quirky Parts

A polyfill developer's greatest challenges often involve normalising the differences in feature implementations between browsers. This also applies to differences at a language implementation level, specifically JavaScript. It's imperative to remember that there are often discrepancies in implementations of EcmaScript 4 and 5 between not only browsers, but versions of those browsers as well (the worst offender here of course being Internet Explorer).

Fortunately, there are a number of resources you can use as a reference point should you be a little rusty on vendor-specific JavaScript issues. The MDN, official specifications, QuirksMode.org and Dottoro were particularly useful when I was working on my polyfill. StackOverflow is of course another great resource, but do remember not to take any advice listed anywhere for granted: test suggestions or assumptions made by the community to ensure they are in fact correct.

I'm not ashamed to say I ended up refactoring my polyfill a total of eight to nine times. In many cases it was down to very minor things I had either forgotten because I'd become so used to using Dojo, jQuery and other libraries over the years. Under the hood, these libraries and frameworks normalise most of IE's quirks so developers are shielded from them. However it's still essential we be aware of limitations that might affect the time taken to implement a solution.

Writing polyfills is sometimes relatively trivial and at others, you're painfully reminded of why it's important we get to a point where all browsers share more or less the same level of standards compliancy across all bases.

For example, did you know that at the time of writing Chrome 13+ and Firefox 4+ are the only two major browsers that are fully ES5 compliant? (a support table summarising compatibility is available for reference). This means that if a developer wishes to use ES5 features in their application, they'll need to include a shim such as the ES5 shim that provides the same capabilities for browsers that aren't feature complete (this is more so an issue for legacy browsers than those that don't support say, 'strict' mode).

For me, the biggest issue with this was that it directly affected the size of my implementation so in the end I opted to stick to predominantly ES4 approaches to solving my problems. If this was a full-blown application, I probably wouldn't have been as concerned, but a polyfill needs to try its best not to add unnecessary weight to anyone's pages.

Story from the trenches

Specific to my polyfill there's one particular story that might be of interest. We all know that the addEventListener() method allows you to register event listeners on single targets in a browser such as the document or window. It's fairly easy to use. Whilst fully supported in Firefox, Chrome, Safari and Opera, it's only available in IE9 and above. This means that an alternative (Microsoft's attachEvent()) method must be used instead for older versions of IE. This usually only means a few extra lines of code to test for which option to use.

The real problems arise when you want to fire events that can be listened to cross-browser, particularly if there's a dynamic element to your solution. The majority of browsers that behave well support using the createEvent(), initEvent() and dispatchEvent() methods if you wish to have a custom event such as 'onPageVisible' fired. IE, however, requires that you opt for createEventObject() and fireEvent() instead: this caused me me all sorts of headaches because I wanted to allow developers to listen for visibility events easily in a cross-browser manner *without* needing to implement a layer of abstraction to normalise IE's quirks. That idea went out the window due to time constraints.

If you're implementing behaviour that is relatively complex, it can be a challenge getting a succinct, consistent solution that works just as well with Microsoft's equivalents as with their counterparts.

For this reason, do try to spend some time earlier on planning out your solution better as this will save you countless hours of debugging in the long run.

Performance

As experienced advocates of the web, we're all aware of some basic rules for optimising site performance: use fewer HTTP requests, minify your scripts and stylesheets and so on. What developers and designers unfortunately don't have as much experience with is performance-testing their JavaScript code. As the majority of polyfills heavily rely on JavaScript, it's essential that we stress test our code to avoid introducing slow, imperformant routines into other people’s pages as this could well negate the benefits offered by our polyfills.

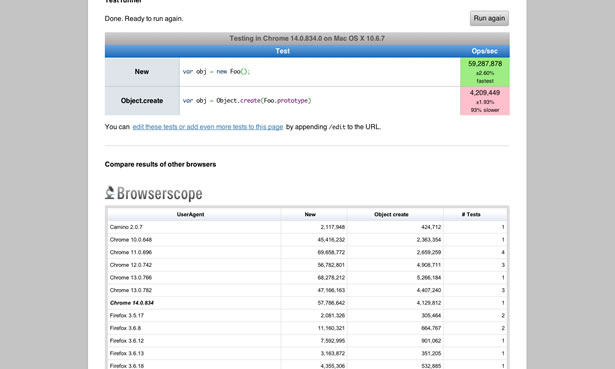

Gauging the performance of scripts is now significantly more straightforward than ever with the availability of online tools such as jsPerf.com (a creation of Mathias Bynens and JD Dalton), which is made possible by Benchmark.js and BrowserScope. jsPerf allows developers to create tests for their JavaScript snippets, which can then be shared and run by literally anyone with a web browser. The results of these tests are then aggregated to provide a comprehensive view of how well a snippet performed.

jsPerf executes each test created repeatedly until it reaches the minimum time required to get a percentage uncertainty for the measurement of roughly one per cent. When you create a test, you'll see a table and a column with the label 'ops/second' below it: this refers to the number of times a test is projected to execute in a second. The quantity of iterations you might experience with jsPerf greatly varies but tests are usually run for a minimum of five seconds or five runs, both of which can be configured. Higher numbers are better and the fastest snippet will usually appear in green.

So, how do we go about performance testing a polyfill?

This really depends on how we've structured our code, but one can generally create a test for each major function or method that's been written and test to establish whether there are obvious issues with an implementation such as considerable differences in performance stats between browsers.

Remember that the figures for browsers with native support can and will likely be higher than those for browsers that have been polyfilled so it's a good idea to focus on benchmarking in modern and legacy browsers instead.

Should there already exist alternatives to your polyfill on GitHub, you may also find it useful to compare the performance of both to find out if there's an obvious area your implementation could use some work on or visa-versa. At the end of the day, we want to create solutions that offer value and perform well and jsPerf can help you with that.

Considering Value

One consideration that isn't always obvious to us when deciding to write a polyfill is whether the value it offers is signficantly worth:

- Our time (and the time of others) implementing and testing a solution

- Other developers adding it to their sites

- Maintaining the solution until the majority of browsers used to access the web are both modern and support the feature you are polyfilling natively (should you choose to do so)

Is it worth our time?

With respect to the first of these points, if the reason you are implementing a polyfill is because you have a need for it at a production level, either at work or on a personal project, it's likely that you can justify the time spent working on it if it's going to offer capabilities which can improve the user experience.

Should there already exist a polyfill out there for the feature you wish to use, you may wish to consider whether the solution does everything you desire, offers too much (which may affect the size of the solution) or whether you would just prefer a slightly different approach.

Remember that you always have the option to fork an existing solution and trim it down if needed.

In my case, I was aware (via the Modernizr polyfill page) that there already existed a polyfill for the Page Visibility API. After having reviewed it, however, I concluded that I only required a subset of what it offered and could probably devise a solution for my needs (in my own preferred style) in about 50 per cent fewer lines of code. My justification for writing the polyfill was both for a desire to learn and possibly for use at a production level later if it proved sufficiently stable.

It it good enough for other developers to use?

Establishing whether your polyfill is worth other developers using is a very interesting dilemma. As intrigued developers we regularly create random snippets or GitHub gists for the sake of experimentation, but a reliable polyfill should ideally:

- Offer developers a solution superior to what they might be able to code themselves in a short space of time (ie value)

- Perform optimally (see the section on performance testing)

- Not consume a considerable amount of space: few developers will use a 100KB polyfill if the overall value added is neglible

- Be reasonably documented

- Ideally, come with its own unit tests

I've seen quite a few widely-used polyfills that do not necessarily address the last two of these points. However, at minimum a developer should ensure their code is easily readable if they don't have the bandwidth to document their solution or write unit tests for it.

Can we really maintain it?

This is downplayed as an important factor when releasing open-source solutions. However, I would recommend bearing it in mind for work you release on the web in general.

On a weekly basis, I (like many others) receive a number of emails from developers wishing to use plug-ins or scripts I've written in the past. In most cases they're also looking for to do something specific, custom or completely new with it and I unfortunately don't always have the free time to assist with most of these requests.

The mistake I made was that I didn't factor in what might happen if something I wrote became 'popular'. The lesson I'd like to share here is that if you don't feel you have the bandwidth to address future issues or requests related to your code, be sure to state what level of maintenance and support will be available clearly, either on your project page or repo's readme.

This will allow developers to know that it would make more sense for them to fork your solution or submit pull requests for new features, rather than leaving comments that may not be chased up or setting their expectations that you might be able to work on new features anytime soon. It's just a courtesy.

As much as I've stressed this last point, don't in any way let discourage you from writing your own polyfills. It's well worth the learning experience, even if you aren't able to offer support for it in the long-term.

Loading mechanisms

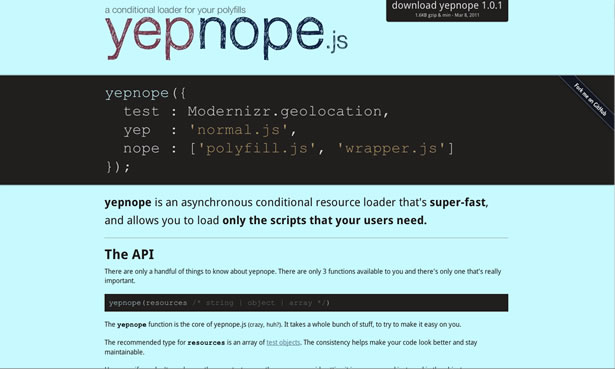

There are a number of valid mechanisms for loading polyfill scripts for use. However, it can be of benefit to consider how yours might be commonly loaded lest this influence the implementation. I personally use yepnope.js (a conditional script loader) for loading mine although there are many alternatives.

At its core, yepnope provides a simple means to define tests for conditions, which must be satisfied in order for a particular script to be loaded. If the result of the test is true (ie 'yep'), one might load 'natively-supported.js' whilst false ('nope') could load 'polyfill.js'.

Remember that in many cases, a self-encapsulated polyfill will usually have its own tests to determine whether a feature is natively supported or requires the polyfill routines to be used instead. If you've opted to intercept the native API calls for a feature and provide a generic layer of abstraction around the API, for example, to provide a cross-browser feature-set with slightly different method names, you've actually created a shim rather than a polyfill. I've seen developers interchange the terms quite frequently so try not to get bogged down with the naming conventions too much.

yepnope is intriguing from a performance perspective as it provides an opportunity to perform feature detection higher up in the chain before the polyfill/shim itself is even loaded. This leaves developers with a decision to make: a) should the solution include its own feature detection tests?, b) should it rely on the user to define their own? or c) should it rely on something like Modernizr for the tests instead?

In my opinion, structuring your polyfill loosely enough to support all of the above is the best option. I haven't had a chance to do so with my solution just yet. However, Remy Sharp's storage polyfill is a great example of how to define feature tests in a loose fashion: https://gist.github.com/350433 (note that the feature detection in this gist can be improved as the specs state errors may be thrown simply accessing window.localStorage; however it is still an excellent point of reference). If you get an opportunity to review it, you'll notice that his tests surround the polyfill meaning they can easily be removed and replaced higher up as a yepnope conditional test instead.

Should feature detection be quite coupled with your polyfill implementation (eg prefix detection also being required for event and attribute names etc), there's nothing wrong with having your own tests. However just be aware that should a developer opt for a solution like yepnope, they may end up performing the same tests twice (once as a conditional and again inside your polyfill). Good documentation with guidelines on how best to load your polyfill can usually help avoid many of the minor issues surrounding this.

The importance of documentation

Documentation is often one of the first things to fall out of project scope once we find ourselves constrained on time. This applies to polyfills as much as any open source project, but it's important to do your best to make documentation more than a second-class citizen in case other designers or developers begin using your implementation.

Many polyfills and shims are relatively short solutions so the first thing you can do is ensure your code is easily readable. Prioritise readability over overly terse code where possible comment your code so that regardless of a developer’s level of skill, they'll be able to make minor adjustments without requiring much assistance.

What might you consider important to document? Developers wishing to use your polyfill will be interesting in knowing how closely it follows the official specifications. Does it offer a 1:1 representation of the API defined by the specification? If not, what methods or features does it really expose? Does the solution have any caveats? Do you offer any additional features that might make using your solution preferable to someone writing their own?

Documentation should ideally attempt to answer the most frequently asked questions users may have about your implementation and if done well, may limit the number of questions you may receive about how X or Y works. There are polyfills which if used extensively and applied to a large number of elements in older browsers will actually result in blocking. Rather than having your users discover this first hand, test your solution adequately and be open about the type of performance users can expect to see with it. They'll appreciate beild told upfront about your implementation’s limitations.

Whilst not directly linked to documentation, also include a minified version of your solution in either your repo or official releases. This will allow developers to instantly discover the file-size cost to including your implementation in their pages (something I regularly find myself checking when looking through the Modernizr polyfill list).

Again, you want to make it as easy as possible for a developer to decide whether your solution is worth using or not. They'll thank you for it in the long run!.

The untestables

One useful piece of information to be aware of is that there a number of browser features that cannot be (or are very difficult) to detect. These include the HTML5 readyState and the webforms UI datepicker. Modernizr actually maintain a wiki page on untestables, which I'd recommend checking out.

These features are difficult to reliably detect as testing for them relies on UA sniffing, browser inference and other less than accurate approaches. If you're for a challenge, you can always try your shot at working on a cross-browser approach to solving an untestable (and please update the wiki with your findings if so), but it's useful to be aware of them in case you find yourself attempting to polyfill a feature that's been marked as such. .

Polyfill testing

Unit testing

To wrap up, I'm going to briefly cover polyfill testing starting with the importance of unit tests. Unit testing is an approach to testing the smallest testable portion of a script or application and ensures that isolated methods or features function as expected. Unit tests for your polyfills (regardless of whether you opt to use Jasmine, QUnit or another testing framework for them) should reduce risks, be easy to run and be easy to maintain as the polyfill evolves or changes.

They can, however, be tricky to reliably run. For example, a developer might wonder if they should be attempting to create a test for an IE fallback that should be able to run in say, Chrome: the answer to this is no, in my opinion. If you're unable to accurately simulate the events that would be required to correctly match testing directly in IE, just split your tests into those for edge, modern and legacy browsers and ensure that each test set runs as expected in just those browsers. It's equally as important that you have access to and are testing all browsers correctly too.

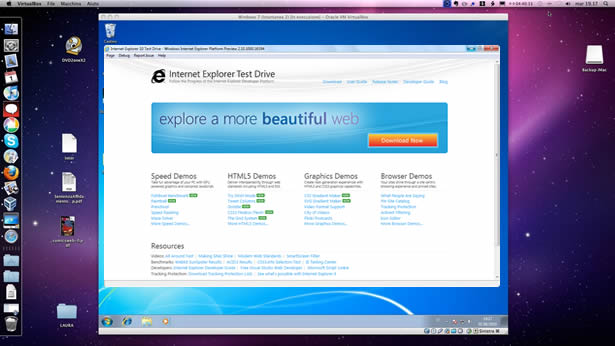

Cross-browser testing

We assume that all developers test their code cross-browser in the same reliable manner, however this is often not the case.

For example, I've recently come across a number of developers that believe IETester or IE's Document Mode provide the same 1:1 rendering and scripting experiences one may get when using dedicated versions of IE on Windows. This is unfortunately quite incorrect. I've conducted tests on both IETester and the IE Document/Browser Mode in the past, which resulted in layouts that appeared completely different in both when compared to the original browser.

For this reason, I don't recommend using either for testing whether your polyfill works or not. You need something more reliable to avoid the risk of false positives.

So, what do I consider an ideal testing setup? I personally use VirtualBox for Mac with a Windows 7 image for IE 6, 8, 9, 10 and all other modern browsers. IE9 and 10PP2 can currently be run independently without any issue but for 6 and 8 I use these standalone IE executables. You'll notice that I omitted IE7 from the list above. For IE7 testing you'll need a WindowsXP image and a copy of the original IE7 install (which you can find via Google relatively easily).

It may sound like a pain getting all of the above set up. However, once they are, you can easily leave VirtualBox running in the background ready for whenever you need it.

Conclusions

Whilst there are a plethora of other projects you could contribute your time to, if polyfills interest you I'd definitely encourage trying to write one around new features being introduced to browsers. The community is always looking for new and useful solutions that help break down the borders to feature accessibility and as I've stated, it's an excellent learning exercise.

As a closing note, I would like to thank Mathias Bynens and Remy Sharp for their technical review of this post as well as Paul Irish for his many resources that helped ease my own experience in writing a polyfill.

The Creative Bloq team is made up of a group of art and design enthusiasts, and has changed and evolved since Creative Bloq began back in 2012. The current website team consists of eight full-time members of staff: Editor Georgia Coggan, Deputy Editor Rosie Hilder, Ecommerce Editor Beren Neale, Senior News Editor Daniel Piper, Editor, Digital Art and 3D Ian Dean, Tech Reviews Editor Erlingur Einarsson, Ecommerce Writer Beth Nicholls and Staff Writer Natalie Fear, as well as a roster of freelancers from around the world. The ImagineFX magazine team also pitch in, ensuring that content from leading digital art publication ImagineFX is represented on Creative Bloq.