Adobe has been one of the biggest creative brands to embrace the potential of generative AI with Adobe Firefly, and in doing so has divided the creator community.

But Adobe's approach to including AI features in Photoshop and other software has been nuanced, and it's been done in tandem with clear initiatives designed to protect artists and copyrights. The idea is to add creator-first AI tools to its software, as well as develop new AI-first tools, like Adobe Project Concept AI while setting up tools to protect ownership.

What is clear is generative AI is here to stay, and many artists are using it in their workflows, like digital artist Guillermo Flores Pacheco who creates detailed, colourful collages using Firefly with his regular methods. But as detailed in our digital arts trends of 2025 article there are new laws and legal cases in the horizon that could curtail how AI is used and add copyright protections for artists.

In fairness to Adobe, it's ahead of the pack when it comes to its approach to protecting artists, and while not always perfect, the direction of travel is welcome. With initiatives like Adobe Content Credentials and Project Know How that track ownership and make clear what is and isn't AI generated Adobe already has more safeguards in place than other creative app developers.

Below Alexandru Costin, Vice President of Generative AI and Sensei at Adobe, explains in detail the challenges facing artists, how AI can be used safely, what the benefits can be, and how Adobe is putting place the tools to protect copyrights in the AI era.

CB: What does the future look like for AI, art and copyright?

AC: Generative AI is already transforming the creative process, giving creators tools to push boundaries, work more efficiently, and realise their visions faster than ever. At Adobe, we believe AI is here to support and enhance human creativity, not replace it, by enabling artists to create their best work while ensuring they get full credit for it.

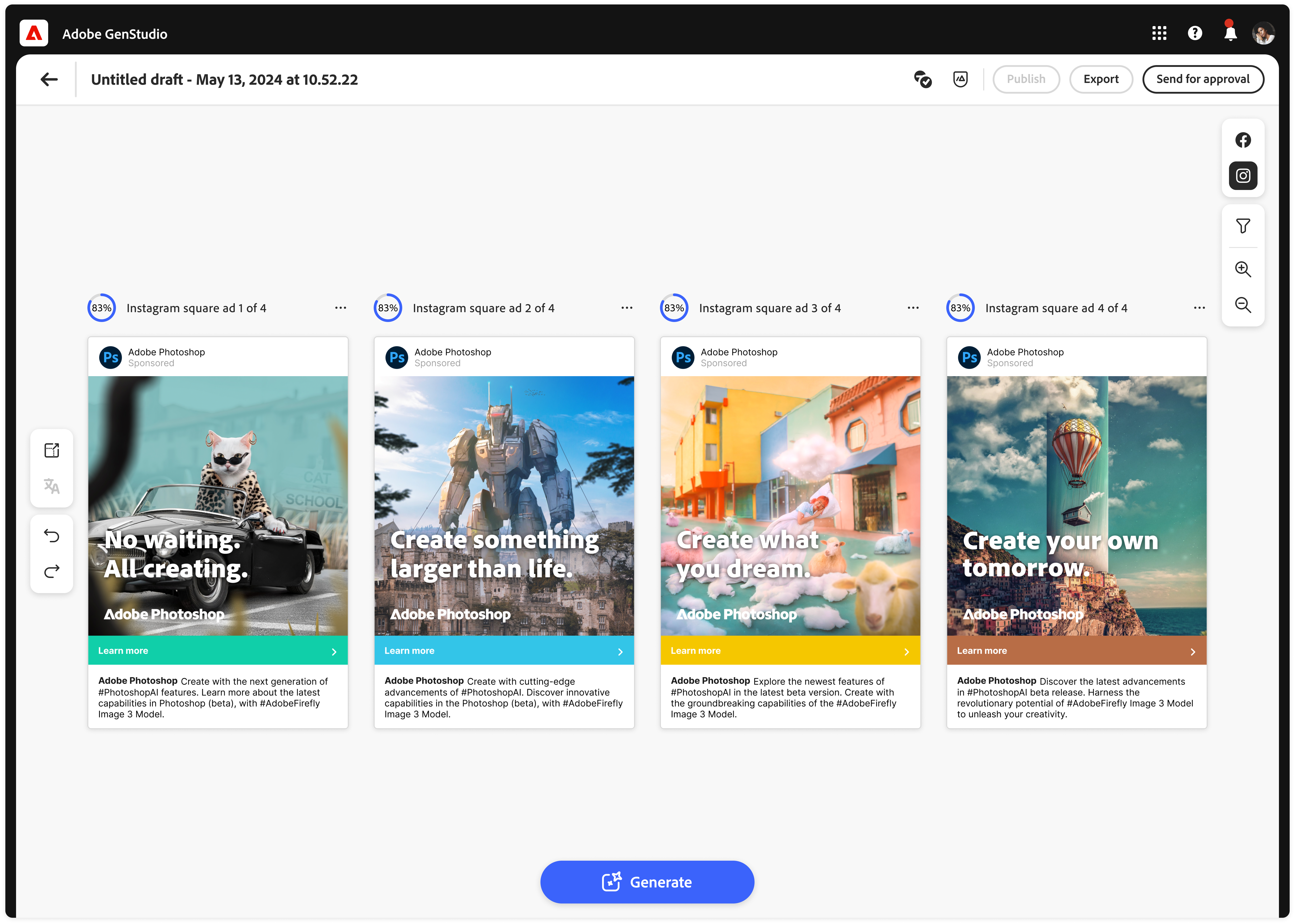

AI has the potential to democratise creativity, putting powerful tools in the hands of everyone, from professionals to small business owners, the world’s largest enterprises and students. Take Adobe Express: its AI-powered features make it easy to adapt content for different channels, seamlessly apply branding, and even rewrite or translate copy in seconds.

However, as AI evolves, so do its challenges, especially around regulation, transparency, and intellectual property. Safeguards are critical to ensure that people trust AI and that creators feel their work is protected. Looking ahead, we’re committed to working with policymakers, creators, and the broader community to ensure AI accelerates creativity while safeguarding intellectual property and fostering trust.

CB: How does Adobe decide on the Gen AI Firefly features it integrates into its products? Does a new innovation need to deliver a real-world use case?

Alexandru Costin: Our products touch billions of people, which means the decisions about what features we integrate into our products and how we do this matter to us. Our core mission has always revolved around empowering individuals and businesses to unleash their creative potential. Key to this is focusing on generative AI features that empower them to be more creative and productive.

Our family of Adobe Firefly models have the power to augment and amplify human ingenuity by giving people new playgrounds for ideation and exploration. Firefly empowers people to realise their creative visions – even the most fantastical or surreal ones – with greater speed, agility, and precision.

At the same time, innovative features integrated into Adobe products are also reducing ‘busy work’, freeing creatives from tedious or time-consuming tasks like resizing images and localising outputs. Once such example is IBM’s recent Let’s Create brand campaign where Firefly was used to generate 200 assets and over 1,000 marketing variations for the campaign in a matter of minutes.

It is equally important that we also consider how we innovate. Responsible innovation is our top priority whenever we launch a new AI feature. Our AI Ethics principles rooted in accountability, responsibility, and transparency guide us in carefully evaluating every feature before it reaches our users.

For example, multi-layered impact assessments are key to spotting features that might unintentionally perpetuate harmful biases or stereotypes. That said, we know the job doesn’t end once a feature is live, so we’ve set up ways for users to share feedback, especially if they notice outputs that feel off or biased. This collaboration helps us fix things quickly and continue improving Firefly for our community.

CB: How important will some things like tracing credentials and transparency become?

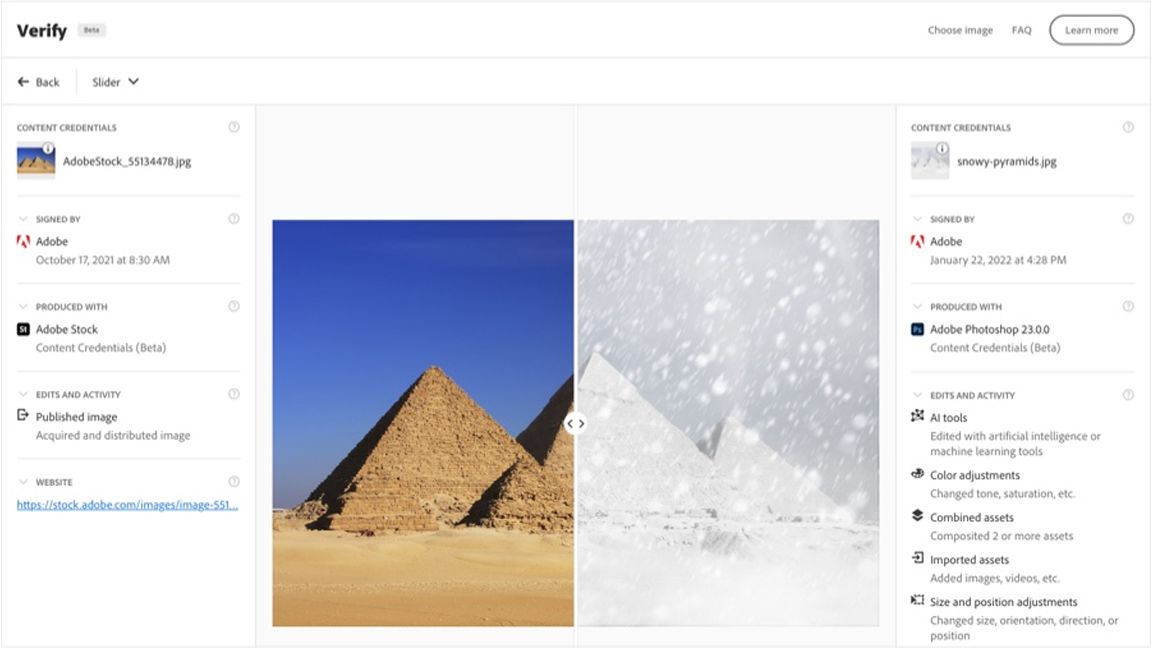

AC: Tracing credentials and transparency are becoming essential in today’s digital landscape. As the lines between real and synthetic or AI-generated content blur, provenance tools like Content Credentials play a critical role in helping to restore trust online.

Content Credentials are akin to a 'nutrition label' for digital content that anyone can attach to their work to share information about themselves and provide context about how it was created and edited. With this information at consumers’ fingertips, they have the ability to decide if they can trust the content they see online.

In a world where misinformation and deepfakes can cause real harm, features like being able to trace a content’s provenance become essential. We imagine a world where checking for Content Credentials is as habitual as reading a nutrition label on food packaging. This shift represents more than just technological progress – it's a reimagining of how we establish and sustain trust in the digital ecosystem.

CB: With many artists still concerned about Gen AI, what steps are you taking to ensure those using Adobe apps are confident and comfortable in their use?

AC: We understand that some creators are concerned about generative AI, and we’re committed to addressing these concerns, while fostering confidence in the tools we create.

We created Firefly for the creative community to give them more power, precision and control directly in the workflows they rely on every day. Adobe has always stood with the creative community, and we’re continuing that tradition by ensuring our family of Firefly models are developed with accountability, responsibility, and transparency in mind. To date, Firefly has been used to generate over 16 billion images worldwide and, importantly, it’s trained exclusively on licensed content, including Adobe Stock or content where copyright has expired, so creators can trust the foundation of the technology.

We also understand that some people prefer not to use AI in their workflows. Adobe offers non-AI-powered applications as well to provide creators choice and respect their preferences.

Our latest offering for our creative community includes the Adobe Content Authenticity web app which has a ‘Gen AI Training and Usage Preference’ setting, allowing artists to apply Content Credentials to their work to signal if they do not want their work used to train other generative AI models in the market. The web app also allows creators to gain attribution for their work. We believe all models should respect creators’ rights and preferences, and our approach reflects a deep respect for the creative community.

CB: What measures are in place to prevent Adobe's AI tools from unintentionally infringing on copyrighted works or mimicking the styles of specific artists without permission?

AC: Successfully resolving the copyright question needs careful consideration and collaboration from the public and private sector to ensure that ultimately it is the creator who wins. It’s also important to consider two distinct issues which include input (what model developers are using to train AI) and output (what types of images are being produced by an AI model).

AI is only as good as the data on which it is trained. Training on more data helps ensure the model is more accurate and less biased. Some model developers scrape the web to source their training data, including copyrighted materials. From a legal perspective, this is a question that is currently being considered in countries like the UK and by Congress in the US. At Adobe, we chose to train only on licensed content and public domain content where copyright has expired. Our model is designed to be commercially safe even in an evolving legal landscape.

At Adobe, we chose to train only on licensed content and public domain content where copyright has expired

Alexandru Costin, Vice President of Generative AI and Sensei at Adobe

When it comes to addressing creator concerns, we think it is more effective to focus on output because this is what consumers ultimately see in the marketplace. Reason being that you have some celebrities worried about AI from a name, image and likeness perspective and we’ve heard concerns from a vast array of creators (musicians, graphic artists, photographers, designers) about someone misusing an AI that has been trained on their work to impersonate their style and then using that output to compete with them in the marketplace. That doesn’t seem fair. That’s why we’ve advocated for a federal anti-impersonation right in the U.S. that can give creators protection against these types of bad actors in the age of AI.

CB: How does Adobe engage with artists and the community to gain feedback and react to AI developments and concerns?

AC: Creators are at the core of who we are as a company and building a legacy of trust by supporting them through every evolution in creativity is a responsibility we take seriously. It’s why we constantly engage with our community to understand their concerns. These conversations don’t just guide our innovations; they ensure we’re creating tools that protect, empower, and enhance the creative journey.

An example of this can be taken from the Adobe Content Authenticity web app, which was designed for creators by creators. We worked closely with our creative community at every stage of development - holding one-on-one and group listening sessions to understand their pain points, gathering feedback on key features, and refining the user experience to ensure this web app enhances their creative endeavours.

CB: How is Adobe protecting its users, and do you need other companies to adopt the same processes, and maybe Content Credentials as an industry standard, to ensure it works?

AC: We co-founded the Content Authenticity Initiative (CAI) in 2019, an initiative aimed at increasing trust and transparency online. Today, the CAI boasts more than 4,000 members consisting of major industry players including global social media platforms, generative AI providers, media organisations, tech companies, camera manufacturers, civil societies and more. Adobe is also a founding member of the Coalition for Content Provenance and Authenticity (C2PA), a global standards group that evolves the technical specifications for Content Credentials.

We’ve seen increased adoption and mainstream implementation of Content Credentials from both the public and private sectors, including by Google, TikTok, OpenAI, Meta, LinkedIn, and BBC News. We believe the more places that Content Credentials technology shows up, the more valuable they become as a tool for fostering a trustworthy digital ecosystem.

Content Credentials is also supported in a range of Adobe products and properties including Photoshop, Lightroom, Express, Stock, and Premiere Pro. Importantly, we automatically apply Content Credentials to assets generated with Adobe Firefly as part of our commitment to supporting transparency around the use of generative AI.

As we navigate a new digital frontier where generative AI offers new possibilities, but also brings uncertainty and challenges with deepfakes and deceptive content, we'll see an unprecedented push for transparency in digital content as the private and public sectors recognise the critical need for an industry-wide content provenance standard. Content Credentials will play a pivotal role in this shift.

To truly protect creators and address their concerns, cross-industry collaboration between industry and government in working towards an industry-wide content provenance standard will become even more imperative. Whilst Content Credentials may not be a 'silver bullet', they are a critical tool in restoring trust online. Coalescing around this tool can ensure that Content Credentials becomes the gold standard for transparency, authenticity, and trust in the digital age.

CB: Do you see added value to art with attached Content Credentials (in a similar way to the trad art's certificate of authenticity)?

AC: Content Credentials redefine how value is created and shared in the digital art ecosystem, offering profound benefits to both artists and audiences. For creators, the value lies in enhanced protection, recognition, and visibility. For consumers, Content Credentials serve as a window into the art’s origins and story. Audiences can gain deeper insights into the creator’s identity, the creative process, and the tools or techniques used to bring the piece to life.

By connecting creators and viewers through an authenticated digital chain of custody, Content Credentials offer a dual value proposition. It protects and empowers artists while educating and engaging audiences, setting a new standard for integrity and trust in the digital age.

CB: Can Content Credentials be edited or tampered with?

AC: Content Credentials are designed to be a robust and secure way to label digital content by combining three key technologies: secure metadata, watermarking, and fingerprinting. While each of these techniques alone might not be completely durable, together they create a reliable and tamper-proof system.

Secure metadata digitally signs information like when and where the content was created, who made it, and any edits made along the way. Watermarking adds an invisible stamp to the content that isn’t detectable by the human eye or ear. Fingerprinting assigns a unique identifier to the content based on its characteristics, allowing it to be matched to the original provenance information if needed.

The unique combination of these three techniques under a single solution, Content Credentials, presents the most comprehensive solution today for digital content provenance for images, audio and video.

Get the Creative Bloq Newsletter

Daily design news, reviews, how-tos and more, as picked by the editors.

Thank you for reading 5 articles this month* Join now for unlimited access

Enjoy your first month for just £1 / $1 / €1

*Read 5 free articles per month without a subscription

Join now for unlimited access

Try first month for just £1 / $1 / €1

Ian Dean is Editor, Digital Arts & 3D at Creative Bloq, and the former editor of many leading magazines. These titles included ImagineFX, 3D World and video game titles Play and Official PlayStation Magazine. Ian launched Xbox magazine X360 and edited PlayStation World. For Creative Bloq, Ian combines his experiences to bring the latest news on digital art, VFX and video games and tech, and in his spare time he doodles in Procreate, ArtRage, and Rebelle while finding time to play Xbox and PS5.