Generative AI in video game development is changing how games are going to be made, and ahead of the release of Unity 6 later this year platform developer is setting out its approach.

While all eyes have been on Unreal Engine 5 in recent years, big things are expected from Unity 6. We covered some of the planned content in our explainer Unity: everything you need to know, but recently I interviewed Unity’s director of AI research Nico Perony to discover more about its approach to AI.

A clear focus is on enhancing workflows and enable game developers to do more in the same length of time, while encouraging newcomers to Unity with the powerful Muse AI, an assistant designed to get artists and developers 'co-creating' with AI. Follow the instructions on Unity's dev site to see how Muse Chat can be downloaded to help you with a project.

As well as my interview below, you can find more information from Unity's demo they gave at the Unity Developer Summit at GDC a couple of months ago, or more recently the short demo (27 minutes in) that was shown at the latest Microsoft Build conference.

01. How have you approached integrating AI tools into Unity? Is there a theme to the kind of tools you're including and why?

Nico Perony: Unity is uniquely positioned to help creators succeed by injecting generative AI in their existing workflows. Unity AI (Unity Muse and Unity Sentis) is designed to work seamlessly with the ecosystem of Unity Editor, Runtime, data, and the Unity Network.

Going forward with Unity 6 (formerly LTS 2023), AI will be a major part of the techstream. Additionally, for Unity Muse, our AI models are built to allow game developers to create assets without worrying about challenges with IP and data ownership.

02. Could you provide an overview of the AI tools available within Unity for game development?

NP: Our goal with Unity Muse (AI tooling options) is to meet developers where they are, help them, and keep them in control. We want them to be able to iterate and experiment faster so they can find the fun in their games and experiences.

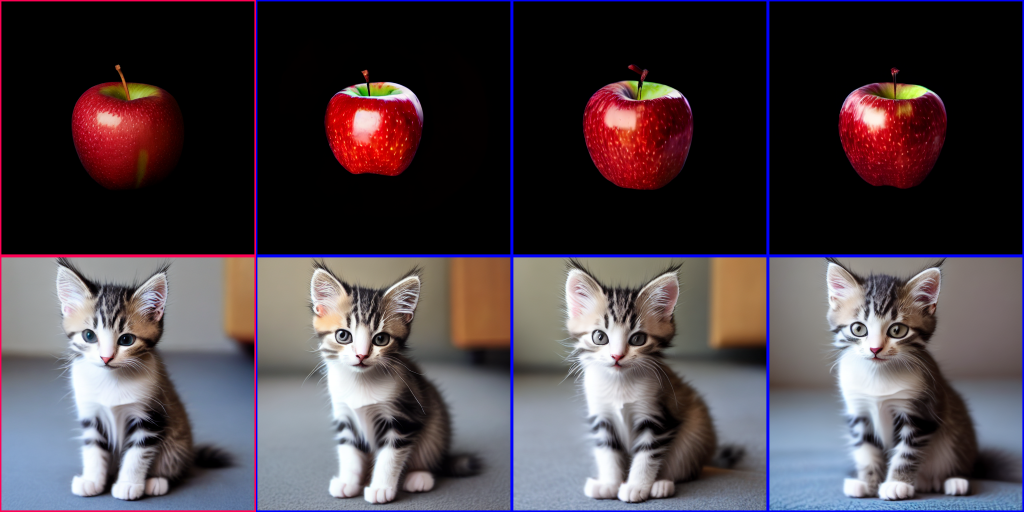

Muse Chat in the Editor lets you use natural language prompts to find exactly what you’re looking for (see our recent blog post). In seconds, you’ll have well-structured answers and instructions, and even project-ready code. Using the Muse Sprite capability, you can produce and modify 2D art directly in the Editor.

With the Muse Texture tool, you can also generate production-quality textures for 2D and 3D projects, in any style, all inside your project in the Editor. Just like our other tools, we expect to embed our AI tools – like Unity Muse – in the Unity workflow so it's there when you need it if a developer chooses to leverage AI in their workflow.

Unity Sentis enables developers to bring complex AI data models into the Unity Runtime to help solve complicated tasks and create new functionality in a game using AI models. Just like a physics engine is one of the tools to bring games alive, we also believe that you‘ll need a neural engine to solve some complicated tasks and or create new functionality in your game, with AI.

Sentis is a cross-platform solution for embedding AI models into a real-time 3D application, so you can build once and deploy AI capabilities in your Unity app from mobile to PC and web to popular game consoles. Since you’re running your program on the user’s device, there’s no complexity, latency, or cost associated with model hosting in the cloud, and the data from your users stays safely within the app.

03. How does Unity ensure that its AI tools are accessible to developers with varying levels of experience?

NP: We have built the suite of AI tools to fully integrate with existing capabilities of the Unity Editor. Our creators do not have to use AI, but they will feel empowered and noticeably faster when they create with the help of AI. For beginners, it can be as simple as asking questions to Muse Chat while building a project in the Editor.

For more advanced users, they can train their own models in their framework of choice (for example PyTorch) and embed them as ONNX files in their build with Unity Sentis. We cater to all creators, whether they are just starting on their AI journey or well-versed in machine learning development.

04. How do Unity's AI tools cater to the specific needs of game developers?

NP: With Unity Muse and Unity Sentis, we are meeting game developers where they work, and that’s in the Unity Editor. We are aware of the multiplicity of AI tools and platforms existing out there, and we are bringing a select and ethical curation to Unity developers.

For example, with the bridge we have built between Unity Sentis and the popular AI platform Hugging Face (blog post), Unity creators can easily embed existing models in their application without having to worry about model discovery, optimization, and deployment.

05. How do Unity's AI tools adapt to different project scales, from indie games to large-scale AAA productions?

NP: Unity’s AI tools, like the rest of the Unity ecosystem, are made to be used at all stages of the game development life cycle, and by teams of all sizes. Tools like Muse Sprite, Muse Texture, or Muse Animate will make prototyping easier, especially for smaller teams.

Tools like Muse Chat or Unity Sentis will enable production teams to efficiently write and audit functional and performant code, and embed AI in the runtime of their application.

06. Can you discuss any ethical considerations that Unity takes into account when developing AI tools for creative industries?

We are focused on managing our development of AI capabilities in a transparent and responsible manner. You can see that in our blog post on responsible AI. We also released a blog post showing how we curate and create ethical datasets to train the models powering Muse Texture and Muse Sprite.

Our approach is to think through how we can deliver tools that are easy for creators to use, responsibly sourced, and with output that creators can feel confident in using in their projects.

07. How does Unity address concerns about job displacement or changes in workflows due to the integration of AI tools?

We are building AI tools to help creators when they are stuck or want more iterations and give them more tools they can use – if they choose. Our goal is to keep the creator in control and in the centre of creativity.

Just like our other tools like SpeedTree, which uses procedural generation to build plants and vegetation, we expect Unity Muse to be another tool in an artist or developers toolbelt.

You can preview Unity 6 now at the Unity Blog. If you want to get into game development, read our guide to the best laptops for programming as well as pick up some inspiration from the best indie games.

Get the Creative Bloq Newsletter

Daily design news, reviews, how-tos and more, as picked by the editors.

Thank you for reading 5 articles this month* Join now for unlimited access

Enjoy your first month for just £1 / $1 / €1

*Read 5 free articles per month without a subscription

Join now for unlimited access

Try first month for just £1 / $1 / €1

Ian Dean is Editor, Digital Arts & 3D at Creative Bloq, and the former editor of many leading magazines. These titles included ImagineFX, 3D World and video game titles Play and Official PlayStation Magazine. Ian launched Xbox magazine X360 and edited PlayStation World. For Creative Bloq, Ian combines his experiences to bring the latest news on digital art, VFX and video games and tech, and in his spare time he doodles in Procreate, ArtRage, and Rebelle while finding time to play Xbox and PS5.