The real versus the reel: the Persistence of Vision phenomenon

We take a look at the work of Douglas Trumbull and other pioneers’ research on the Persistence of Vision.

Persistence of Vision – or PoV, but not to be confused with Point of View – is a phenomenon whose cause is still being determined but is one building block for the frame-by-frame technology used in film and games today.

It is currently thought to be caused by the phi phenomenon, the perception of continuous motion when viewing separate images in rapid succession, aided by the optical illusion of an afterimage. We've all seen the afterimage illusion – it's the glow that temporarily blinds us after looking at a bright light, or the colour shift that remains when we stare at an image like the one shown below.

Early animation devices such as the phenakistoscope, the zoetrope, and the praxinoscope took advantage of PoV, but though film and game technology has advanced from film to digital, acceptance of increased frame rates have limped far behind.

Technology is innovation, but advancements in entertainment tend to be uneven, moving here and not there, fearing the earthquake that topples above and realigns below. Yet as films with higher frame rates like The Hobbit and Avatar become the norm, the production of digital effects needs to be redefined in order to meet the desirable results.

Understanding how PoV affects this means understanding how we got here, from the projection methods of silent film to Showscan – the brainchild of filmmaker and VFX pioneer Doug Trumbull – and beyond.

Showscan's history

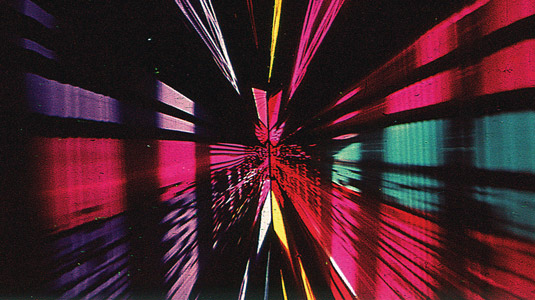

Now a writer, producer and director, Trumbull's quest to make PoV a reality was inspired by his work on Stanley Kubrick's 1968 release of 2001: A Space Odyssey, using 70mm Cinerama.

"That film stuck with me as a young budding filmmaker because it's so spectacular." He knew he had to find a way to explore this cinematic spectacle. But first, he had to establish his career, starting high with his directorial project, Silent Running. The film was well received and earned development deals at almost all the major studios, but over the next few years the projects derailed for a variety of business reasons, leaving Trumbull frustrated and broke.

Get the Creative Bloq Newsletter

Daily design news, reviews, how-tos and more, as picked by the editors.

Trumbull, however, is not so easily defeated. The right discussions with the right people led to a subsidiary company at Paramount called Future General Corporation, where Trumbull did R&D on the future of entertainment.

He began experimenting in all kinds of film formats: Cinerama, Techniscope, VistaVision and so on. Doug and his partner Richard Yuricich tried almost every camera they could find and on every type of screen.

"It was only after we felt there was nothing all that special about any of them that I realised the one thing we had not yet tried was frame rates," says Trumbull.

Laboratory experiments began, shooting in 24, 36, 48, 60, 66, and 72 frames per second (fps). Students, hooked to electrocardiogram, electroencephalogram (EEG), electromyogram (ECG) and galvanic skin response devices, watched movies in a double-blind test.

Through this, Trumbull was able to evaluate the human physiological stimulation as a function of frame rate with no other variables. "The results generated a perfect bell-shaped curve, validating that at or above 60fps, human physiological stimulation becomes very high, leaving 24 frames in the dust."

The impact of Showscan

The Showscan film process was born. Trumbull set up a screening of his ten-minute demo film, Night of the Dreams, for Paramount executives including Frank Yablans and chairman of the board Charlie Bluhdorn of Gulf+Western, who owned Paramount.

The 60fps 70mm film had stereo sound and used a special projector with a gigantic screen. "Bluhdorn jumped up out of his seat, turned to Frank Yablans and said 'Gentleman, if we don't make a movie in this process we're fools!'"

Work began on the first feature motion picture, Brainstorm, to be produced for this process, but by the time the screenplay was completed the management at Paramount had changed. Production in Showscan never began.

"Studios would not finance a movie unless there were at least 2,000 theatres equipped to show it. The exhibitors would not install the projectors and screens unless there was a supply of movies. I fought for a couple of years until I finally had to abandon ship and move on." Brainstorm was made at MGM in the end, using conventional 70mm technology.

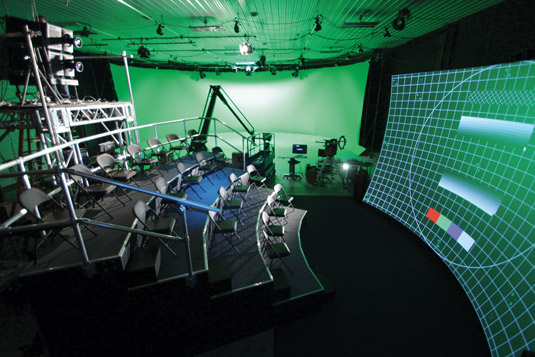

However, as the movie industry transitioned to digital projection, there was a new hope for Showscan. "I found out that digital projectors are effortlessly running at 144fps," says Trumbull. "Now we don't have to ask the exhibitors to put in new projectors because they are already built into the system."

The reel reason

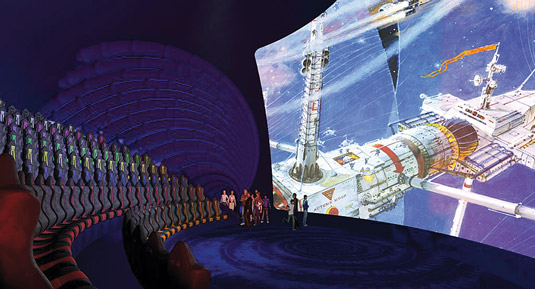

MAGI's higher frame rates, brightness, better bit depth, larger screens and improved 3D all lead to a more immersive cinema experience. It's about the audience being in the movie rather than looking at the movie.

The 70mm, large-format, high-resolution film stock, was an improvement to the original Cinerama process too, but the multiplex concept took over, and large standalone theatres with giant screens were subdivided into small screen 35mm theatres, scuttling 70mm and Cinerama.

Conventional 35mm film shoots at 24fps, and the shutter is closed half the time while the film gets advanced to the next frame. To reduce flicker, each frame is projected twice; 48 flashes of light. Consequently, a 24 frame digital movie flashes each frame five times before it goes to the next frame. A stereo movie alternately flashes each frame three times; each eye sees 72 flashes, totalling 144 flashes a second.

2K, which is 2,048 horizontal pixels by 1,080 vertical pixels, is the current standard of the industry. It's called 2K whether it's 2.35 to 1 or 1.7 to 1 or 16x9, whatever aspect ratio the filmmaker wants to use. With 4K it's twice as wide and twice as high so the total area of the image is four times the information.

4K is becoming of interest to the industry now; it's like the difference between 35mm to 70mm. It's much sharper, clearer, more vivid. "I realised," says Trumbull, "that we could do even better than Showscan, and it would be easy to try this process I'm experimenting with right now: 4K at 120fps in 3D where each frame is flashed only once, alternating left and right at 60fps per eye, on giant screens with very high brightness, and without a shutter", so 100 per cent of what happens before the camera is captured. "This creates an illusion where there is no more blurring, no more strobing, and no more flickering. It's perfect, like a window on to reality."

Trumbull's process uses off-the-shelf equipment: digital cameras, solid state drives, computers "but I don’t want to say if you raise your frame rate all your problems are solved, because that is not the case." One daunting consideration is 120 frames instead of the standard 24 results in five times the frame rate – four times the info and 20 times the data, roughly eight gigabytes a second. "We are on the outer edges of how much data can pass through a wire in a second."

Now Doug is putting together all the pieces of the puzzle, which includes hyperrealism, giant screens, powerful sound and the new emerging technology of lasers and 3D. "One of the 3D aspects I've been developing goes back to the old Cinerama process where there were three cameras and three projectors. A close-up couldn't be accomplished by changing the lens, so the only option was move the camera closer to the subject.

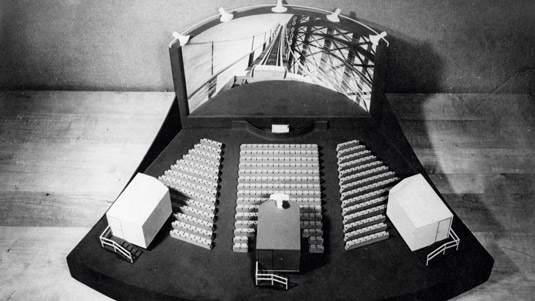

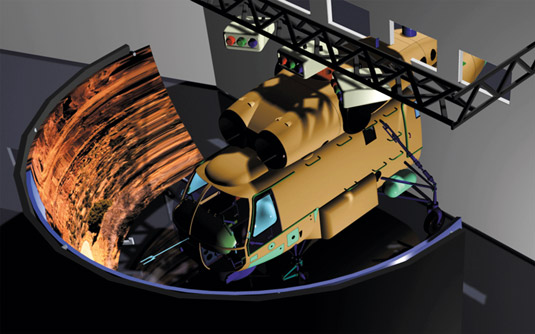

"The camera was recording the same angle of view that the projectors were delivering to the audience. That was critical to the sense of natural depth, perspective and space. I've adopted that in this process while designing my theatre with a curved screen, projector and seating area."

Shaping up: The Tørus

Though increased screen light equals better colour, image, and stereo, screen materials used now are little different than those used in 1960. In the beginning, screens were simply white, similar to a white wall with flat paint, and light was dispersed evenly but not brightly enough for the higher frame rate required for talkies.

Advancements from the 'silver screen' (with its aluminium paint on woven, seamless sailcloth, which ended with the addition of perforations for sound in 1930) to CinemaScope, dubbed the Miracle Mirror, resulted in a curved screen with 2:1 gain. This screen became the standard and remained through the 1950s.

Now, flat or slightly curved 'gain screens' are the most common. Gain helps to economically solve the need for higher brightness. In the first half of the 1900s, gain was accomplished through a process that coated the screen surface with particles of retro-reflective glass-like beads.

However, reflective light is directional and can cause a 'hotspot' of localised light by aiming a small area of increased light back at the viewer. The solution was to add a slight curve to the screen. The glass-like beads were later replaced with angular reflective pearlescent pigments, reducing the hotspot even more.

The success of the curved design inspired the Tørus screen, developed by Glenn Berggren and Gerald Nash of the Sigma Design Group, with some help from Stewart Filmscreen, the premier projection screen manufacturer.

The Stewart Filmscreen Tørus screen that Doug is using has a triple reflective, 3× gain. The seating pattern is mapped in a CAD file as is the projector location, and then the ideal incident and complementary angles of light distribution are ray traced. If you map out enough data points, you can connect the dots and derive the curves of a Tørus, which will normalise the light angles to most viewers so that uniformity in brightness is achieved.

A typical flat screen has a larger standard deviation of incident angles of light, and therefore complementary angles of distribution within the venue. Screens do not amplify light; they can only re-direct the distribution of reflected light. Higher gain screens reflect light more directionally, as opposed to diffusively. A matte white screen distributes light hemispherically. The Tørus works by bending the four edges slightly towards the viewer, dispersing the light evenly across the surface.

"The more you bend, the more the light aims at the viewer," Glenn Berggren explains. "The result is the entire screen is a hot spot"

because the normal fall-off of a gain screen is ameliorated via strategic angular positioning of the viewing surface, via the compound curved shape.

According to Grant Stewart: "The 3.0 gain screen is designed for the preservation of states of polarisation for the light hitting the surface. This preservation of polarisation enables the content to be separated into two channels of information, for right eye and left eye, and

this is one of the ways 3D images are provided. The eyewear furnished to the viewer contains complementary polarisers which 'blank' the light of one channel from each eye, identical to the way folks use rotated polarising filters to observe a solar eclipse."

Motion clarity

Motion blur is an artist's representation based on archaic rules which are at least a century old. "Cinema, at 24 Hertz, uses motion blur to compensate for jittering due to the currently used lower frame rate, limiting the sharpness and fidelity of an object from a human perception perspective," explains Larry Paul.

"Back in the 1920s, 24 Hertz was considered adequate, but mostly for sound reasons." If your visual effects aren't done properly they will look fake or be difficult to watch. The higher the frame rate the better the potential result, but the more challenging it is to create an environment that looks real. When objects move quickly at 24Hz they are blurred with motion while the shutter is open, however, this is not how the real world is seen.

High frame rate (HFR) provides the temporal advantage of sharper imagery. When, for example, you are on a train and you turn your head to track an object outside, you can see the detail in that object. In a low frame rate environment blurred objects are always blurry regardless of how one tracks moving objects.

Sight and sound

In cinema, surrounding the viewer with sound helps to convey emotion, make a connection to the characters and the story taking place, and to subliminally transport you to another location. Typically this has been achieved through a channel-based solution, where the predominant standard has been 5.1 or 7.1.

Trumbull is experimenting with Dolby's Atmos Sound System, which provides localised sonic elements, addressing every loudspeaker inside the theatre individually. Atmos can isolate the reproduction of sound to a specific location or pan the sound around the room, or from the ceiling. Subtle sounds can emit from specific locations; the sound of dripping in the deep recesses of a cave, a single cricket that ominously falls silent, for example.

Controlling perception

It is important to consider how our senses interact. For example, a ventriloquist and dummy doll creates the illusion that the ventriloquist's voice is emitting from the dummy. This is an example where the auditory and visual systems connect the sound source to the location of the visual input.

Particularly during daylight, our physiological system tends to weight the information to the visual information because, from an evolutionary perspective, the visual input is probably accurate. You wind up with an illusion.

According to Poppy Crum: "In reality, the information our brain receives regarding the sonic and visual location of an environmental object is often incongruent. In this way, one might say we live in a constant state of ventriloquism, and the situation with the dummy doll is much more than a parlour trick. Rather, it is a fundamental adaptation that allows us to efficiently survive in a complex sensory world."

The human ability to match an audio signal with the related visual input can be applied to the cinematic 3D stereo illusion as the visual pops off the screen, causing the corresponding sound to appear to move forward with it. Sound can fool the viewer into thinking they saw something they didn't. This means you can create experiences that take advantage of how the brain identifies relevant information, have a stronger engagement from the physiological system, and have heightened physiological experiences.

"We are finding all kinds of fascinating phenomena, an equally interesting aspect of perception of how sound and picture fit together, convincing your nervous system that what you are seeing is real," explains Trumbull. "It's quite different from simply telling a story; it's a completely different art form."

All this means it's only a matter of time before we must also see the highest quality digital effects.

Words: Renee Dunlop

Renee Dunlop has over 20 years' experience working as a script analyst, creative and technical writer, 2D and 3D artist. She is editor of Production Pipeline Fundamentals for Film and Games. This article originally appeared in 3D World issue 181.

Thank you for reading 5 articles this month* Join now for unlimited access

Enjoy your first month for just £1 / $1 / €1

*Read 5 free articles per month without a subscription

Join now for unlimited access

Try first month for just £1 / $1 / €1

The Creative Bloq team is made up of a group of art and design enthusiasts, and has changed and evolved since Creative Bloq began back in 2012. The current website team consists of eight full-time members of staff: Editor Georgia Coggan, Deputy Editor Rosie Hilder, Ecommerce Editor Beren Neale, Senior News Editor Daniel Piper, Editor, Digital Art and 3D Ian Dean, Tech Reviews Editor Erlingur Einarsson, Ecommerce Writer Beth Nicholls and Staff Writer Natalie Fear, as well as a roster of freelancers from around the world. The ImagineFX magazine team also pitch in, ensuring that content from leading digital art publication ImagineFX is represented on Creative Bloq.