Behind the scenes of 'Lights': the latest WebGL sensation!

Interactive studio HelloEnjoy has built a mind-blowing 3D music video for Ellie Goulding's song 'Lights'. Here creative director Carlos Ulloa explains how the team chose WebGL and created various immersive graphic effects

HelloEnjoy was asked by Interscope Records to create an interactive music experience using WebGL for the British artist Ellie Goulding.

At HelloEnjoy, we believe in creating interactive 3D experiences that are both intuitive and visually engaging. Our goal is to demand very little from the user to reach broader audiences, and at the same time reward them with high-end graphics and aesthetics.

We love music and have always seen a lot of potential in making it visual and interactive, so when Tool of North America, our agents in the US, approached us with this project we jumped in immediately.

The client gave us free creative rein and was very understanding with the experimental nature of the technology, which allowed us to play with different visualisation techniques. In the same way live music visuals make for a heightened experience, we wanted to achieve this feeling of perceiving music in an enhanced way through light and colour, plus interactivity.

Technology

Being technology agnostic allows us to focus on the solution that best meets our project's needs. In this case, we believe the best option was WebGL, a new web technology that brings hardware-accelerated 3D graphics to modern browsers without installing additional software.

WebGL is basically a JavaScript API built on top of OpenGL ES 2.0, the standard graphics library in use today for rendering 2D and 3D graphics not only on desktop computers, but also on mobile devices such as tablets and smartphones.

To unleash the power of WebGL we chose the three.js 3D engine, a powerful and easy to use library created by Mr.doob and maintained by a very talented team of developers around the world.

Get the Creative Bloq Newsletter

Daily design news, reviews, how-tos and more, as picked by the editors.

And although you can get started in JavaScript development using a text editor, for a project as ambitious as ours, containing over 12,000 lines of code, you need a more powerful environment. We use WebStorm, a JavaScript IDE with autocompletion, refactoring, on-the-fly code analysis and other advanced features.

Music

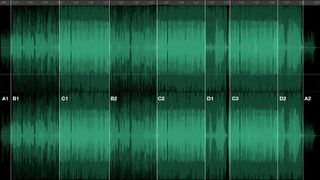

As a foundation for the visuals to build up along with the music, we analysed the different stems of the song to capture the structure and energy levels for each part of the experience.

The stems were provided by the record label and gave us a new perspective and a deeper understanding of the music. Some of them were used to sync graphic elements and others were combined to obtain high quality volume and audio spectrum data.

Environment

Our creative approach was based around the concept of flying over an infinite landscape which reacts to the sound and evolves along with the music. This allows user interaction but also delivers a great experience if the user chooses not to interact.

We used a variety of tools and techniques to create the different elements of the site, some come from traditional game development, while others are common in graphics programming. We have selected a few of them to share with you.

Terrain

The terrain is made of identical tiles which are placed in the field of view of the camera. We save considerable processing time by reusing them in different places, instead of updating each one individually. On the other hand, we had to make sure to make them seamless so they are fully tileable.

The terrain itself is generated from a 66x66 pixels height map, a greyscale image showing how high the land is. We started by creating it in Photoshop using the Clouds filter, but the resulting terrain was too steep. We obtained a more rounded landscape by applying Gaussian blur, but we knew there was still room for improvement.

We finally realised that the standard colour resolution of 256 levels of grey wasn't high enough for our needs. It required more precision. Our solution involves further softening of the height map values inside JavaScript, to produce gentle hills and rounded valleys.

To make it look more interesting, we wanted the ground to display different colours and shapes. To do this, we need to render a dynamic texture in real time and apply it to the landscape. We started doing it in canvas at 512x512 resolution, but the performance suffered when redrawing it on each frame. In the end, we opted for having a separate WebGL scene that's rendered onto the texture that's used for the terrain.

This terrain scene works essentially in 2D with its own orthogonal camera (which doesn't have any perspective). Here we use standard planes to create different types of lines and circles at various parts of the song. At this point, we also render the lights emitted from the other objects in the landscape, and finally we use post processing to make it seamless along the sides.

Glowing spheres

A range of different light emitting objects populate our environment. They are all generated by code and have various shapes, shaders and behaviours.

The spheres are interactive and synced to the beats. For their shading we use a technique called vertex colour, where colours are assigned to each vertex and the graphics hardware smoothly interpolates between them creating lovely gradients.

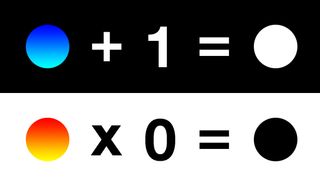

To fade the spheres to black and white we use a custom shader, with multiply and additive parameters. In graphics cards, RGB colour values range from 0 to 1, instead of 0 to 255. This way, if we multiply by 1 we get the same colour, but if we do it by 0 we obtain black (0,0,0). Adding works as expected, if we add 0 the colour doesn't change, but by adding 1 we get white (1,1,1) as the result is clamped.

Light beams

The last part of the song is very epic. Starting with a silence, it unleashes a lot of energy at once. Here's where we introduce the light beams.

To create this effect we build a mesh out of three planes rotated 120 degrees apart. Their texture is a simple gradient which is additive blended, so they are rendered on top of each other. The result is a bright stream of light which gives a nice illusion of volume.

Twitter integration

The name and avatar image of the users who tweet about the site can be seen during the experience. The real-time tweets are powered by Echo's powerful StreamServer platform, which allows us to quickly collect, store and serve the live data with little engineering effort.

A grid of particles is used to display them, with each particle representing a pixel of the original image. To create the text in the Twitter names, we use a bitmap font converted in Glyph Designer, a Mac-only app normally used to create colour bitmap fonts for iOS games. It exports an image with all the characters and a font description file in Cocos2D .fnt format which, being just a text file, is easy to parse in JavaScript.

Voice

Ellie Goulding's voice is visualised as a sparkling light with trails that move and change shape according to the volume and spectrum frequency of the combined vox stems.

This type of data can produce very large files, and needs to be compressed somehow, so it doesn't increase loading times considerably. Fortunately, in JavaScript it's very efficient to save raw data as PNG images. They are automatically decompressed by the browser and pixel data can be easily retrieved with HTML5 canvas.

Our first approach was to create the sparkling light with a 3D particle system, but we needed too many particles to achieve the effect we wanted. With so many effects going on, we had little performance left for this task and we realised we needed a different solution.

In order to obtain a realistic effect with good performance, the particle system was rendered in TimelineFX, a powerful particle effects editor used in games, and exported as a sprite sheet.

On the other hand, the trails are created in 3D using alpha and additive blending modes and animated in real-time using a combination of sound spectrum data and trigonometric functions to smooth it.

Post-processing

And finally, a couple of effects are applied to the final render. Bloom adds a nice glow around the objects in the scene, while vignette reduces the brightness at the borders of the image. The combination of both effects produce a more realistic and warmer image, and reinforces the illusion of light and shadow.

Conclusion

We believe real-time 3D graphics are particularly suited to enhance music, allowing users to enjoy it in new and exciting ways by creating a richer experience with immersive visuals and interaction. Although it's important to remember that graphics are meant to serve the experience and no amount of technical wizardry can replace well defined aesthetics, there is little doubt that the advent of hardware accelerated 3D in the web and mobile brings new levels of expressiveness. We have exciting times ahead.

And if you want to see what Hollywood's up to in 2012, check out the best 3D movies.

Thank you for reading 5 articles this month* Join now for unlimited access

Enjoy your first month for just £1 / $1 / €1

*Read 5 free articles per month without a subscription

Join now for unlimited access

Try first month for just £1 / $1 / €1

The Creative Bloq team is made up of a group of design fans, and has changed and evolved since Creative Bloq began back in 2012. The current website team consists of eight full-time members of staff: Editor Georgia Coggan, Deputy Editor Rosie Hilder, Ecommerce Editor Beren Neale, Senior News Editor Daniel Piper, Editor, Digital Art and 3D Ian Dean, Tech Reviews Editor Erlingur Einarsson and Ecommerce Writer Beth Nicholls and Staff Writer Natalie Fear, as well as a roster of freelancers from around the world. The 3D World and ImagineFX magazine teams also pitch in, ensuring that content from 3D World and ImagineFX is represented on Creative Bloq.

Related articles

-

-

-

-